Wufei Ma

@wufeima

Followers

121

Following

319

Media

22

Statuses

85

PhD student at @CCVLatJHU @JHU | Student researcher at Google | Prev: Meta, Amazon, MSRA, Megvii

Baltimore, MD

Joined August 2019

Yes, there is an official marking guideline from the IMO organizers which is not available externally. Without the evaluation based on that guideline, no medal claim can be made. With one point deducted, it is a Silver, not Gold.

🚨 According to a friend, the IMO asked AI companies not to steal the spotlight from kids and to wait a week after the closing ceremony to announce results. OpenAI announced the results BEFORE the closing ceremony. According to a Coordinator on Problem 6, the one problem OpenAI

14

57

588

Microsoft claims their new AI framework diagnoses 4x better than doctors. I'm a medical doctor and I actually read the paper. Here's my perspective on why this is both impressive AND misleading ... 🧵

277

1K

9K

We are at the Johns Hopkins booth at @CVPR . Come join us 😁 @JHUCompSci @HopkinsEngineer @HopkinsDSAI

1

6

23

Hopkins researchers including @JHUECE Tinoosh Mohsenin and @JHU_BDPs Rama Chellappa are speaking at booth 1317 of the IEEE / CVF Computer Vision and Pattern Recognition Conference today! Come meet #HopkinsDSAI

#CVPR2025

2

15

31

One of the biggest bottlenecks in deploying visual AI and computer vision is annotation, which can be both costly and time-consuming. Today, we’re introducing Verified Auto Labeling, a new approach to AI-assisted annotation that achieves up to 95% of human-level performance while

2

212

109

Excited to announce that our group will be presenting eight papers at @CVPR in Nashville! 🎉 We're excited to share the ideas we've been working on with the community. If you'll be there, we'd love to meet and chat -- always happy to exchange ideas and catch up in person. See

0

6

6

GitHub Copilot now has a coding agent embedded right where you already collaborate with developers: on GitHub. And yes, you can access it from VS Code too. 🤖

88

476

4K

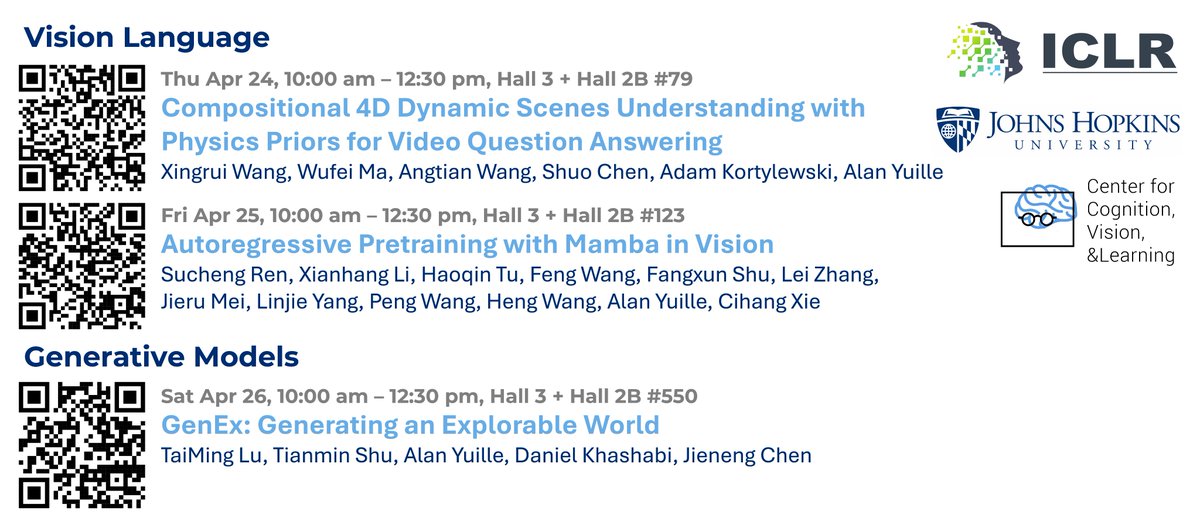

🤩Thrilled to share three papers from our group to be presented at @iclr_conf -- see you in Singapore!

0

2

6

@XingruiWang & @YuilleAlan’s “Compositional 4D Dynamic Scenes Understanding with Physics Priors for Video Question Answering” w/ @wufeima, Angtian Wang, @an_epsilon0, & @AdamKortylewski constructs a dataset & model 4 temporal world representations: https://t.co/mF7oNy3tYK (5/12)

arxiv.org

For vision-language models (VLMs), understanding the dynamic properties of objects and their interactions in 3D scenes from videos is crucial for effective reasoning about high-level temporal and...

1

2

2

🚀Our group is seeking summer interns to join our group in 2025 and work on exciting research projects in computer vision and AI. 🔗Job posting: https://t.co/qJ7WjmC4XJ 🤩If interested please send Prof @YuilleAlan an email! @HopkinsEngineer @JHUCompSci @HopkinsDSAI

0

10

16

Two more interesting examples from running Gemini 2.0 Flash Thinking on our 3DSRBench.

0

0

0

Estimating orientation from the perspective of a man? This is a challenging problem as models are often confused by 2D and 3D spatial reasoning. Gemini 2.0 gives a textbook reasoning of the problem. The answer is unfortunately wrong but very impressive thinking!

1

0

0

Relative position from the perspective of a horse? Gemini 2.0 divides the problem into smaller steps, estimating orientation and relative position. It also comes up with ways to verify its answer, i.e., confirming with visual cues, and to eliminate other possibilities.

1

0

0

Can Gemini 2.0 Flash Thinking handle complex 3D spatial reasoning? While not perfect, its spatial reasoning capabilities are truly remarkable!🤯 I test the model on several 3DSRBench questions ( https://t.co/NUrc5lGDGI). It's very impressive how Gemini 2.0 effectively breaks down

Introducing Gemini 2.0 Flash Thinking, an experimental model that explicitly shows its thoughts. Built on 2.0 Flash’s speed and performance, this model is trained to use thoughts to strengthen its reasoning. And we see promising results when we increase inference time

1

3

9

🧠 “I want algorithms that will work in the real world and that will perform at the level of humans, probably ultimately better. And to do that, I think we need to get inspired by the brain,” says @YuilleAlan. Learn more about his research here:

cs.jhu.edu

Bestowing machines with the ability to perceive the physical world as humans do has been a career-long mission of Alan Yuille, a pioneer in the field of computer vision.

0

6

10

👏 Gemini 2.0 impresses with its visual and physical reasoning, but 3D spatial reasoning remains a challenge. 🔥We present 3DSRBench to benchmark the 3D spatial reasoning capabilities. 🫨 Surprisingly, Gemini 2.0 achieves only 50% accuracy, falling significantly short of

The Gemini 2.0 era is here. And we’re excited for you to start building with it. A quick rewind of what we just released ⏪ Gemini 2.0 Flash ⚡ comes with low latency and better performance. 🔵 You can now access an experimental version in @GeminiApp on the web, while Gemini

0

6

17

🤯Gemini 2.0 is great, especially how it sees in 3D and reasons about the physical world. However, 3D spatial reasoning still has a long way to go. 🔥We present 3DSRBench, a comprehensive 3D spatial reasoning benchmark with 2772 manually annotated VQAs across 12 question types.

2

5

24

🚀Excited to share our ImageNet3D dataset for general-purpose object-level 3D understanding, which augments 200 categories from ImageNet21k with 3D annotations. 🔗Project page: https://t.co/3iTfhESycX 🥰Big thanks to my advisors: @AdamKortylewski @yaoyaoliu1 @YuilleAlan

🎇This week our group will be presenting four papers at @NeurIPSConf. Feel free to stop by our posters and check out the latest works from our group! ✉️DMs open for any questions and chats. 🥳Kudos to the authors and collaborators: @pedrorasb @Zongwei_Zhou @jieneng_chen

0

4

13

Introducing Genex: Generative World Explorer. 🧠 Humans mentally explore unseen parts of the world, revising their beliefs with imagined observations. ✨ Genex replicates this human-like ability, advancing embodied AI in planning with partial observations. (1/6)

6

49

164