Vincent Abbott

@vtabbott_

Followers

7K

Following

3K

Media

149

Statuses

517

Maker of *those* diagrams for deep learning algorithms | @mit @mitlids incoming PhD

Perth 🔜 Boston

Joined July 2022

RT @vtabbott_: @SzymonOzog_ I'll be refactoring the code to allow for texture packs at some point. This is actually a good resource for sty….

0

1

0

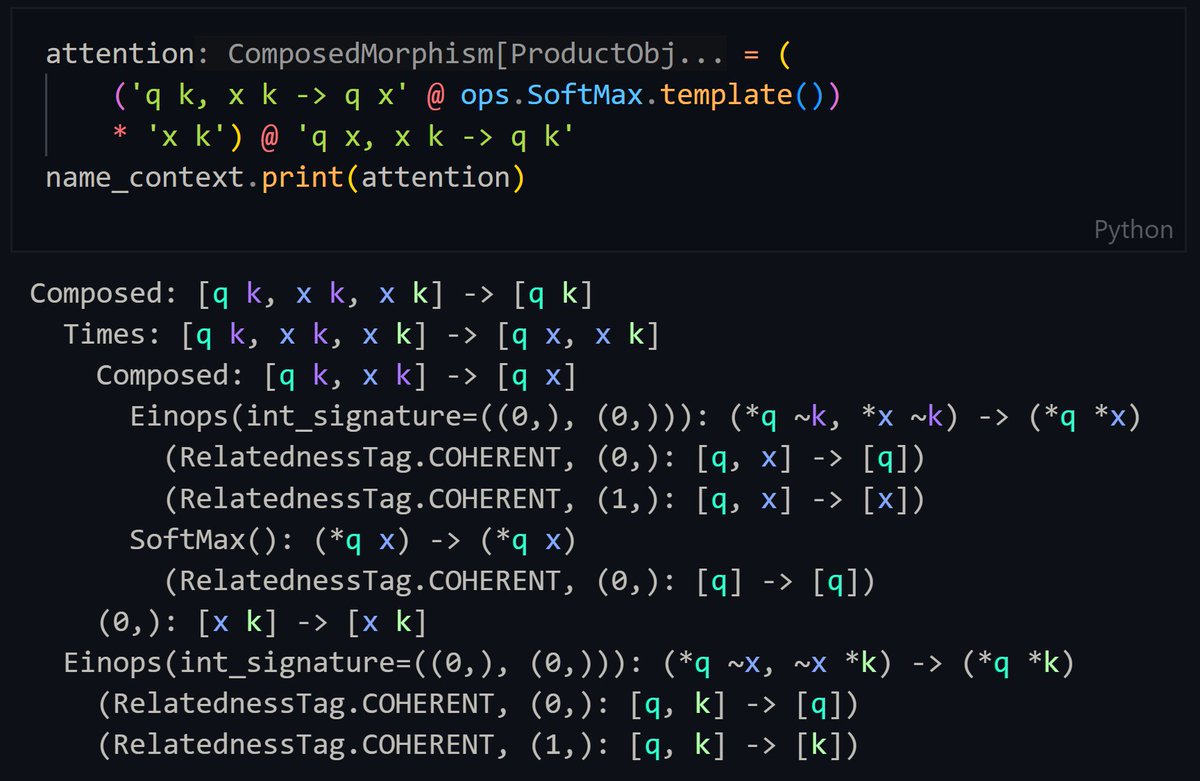

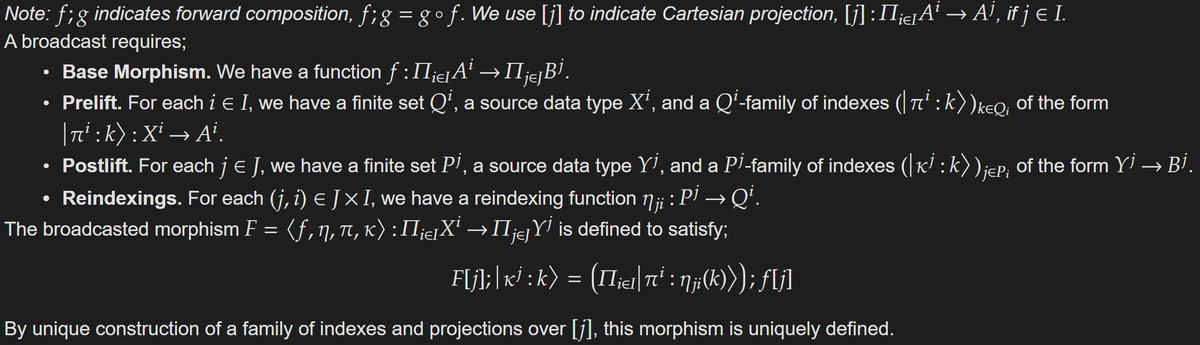

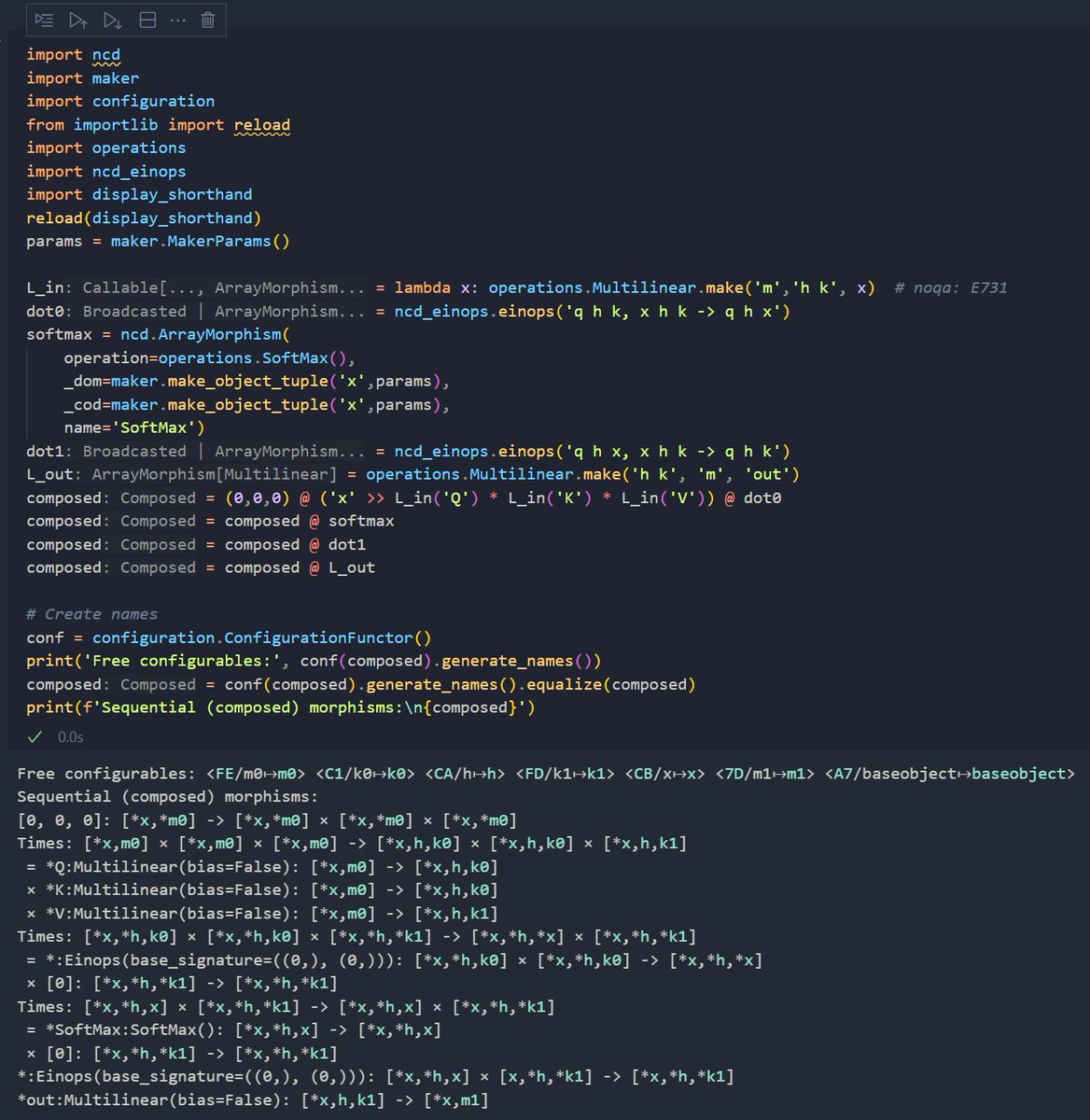

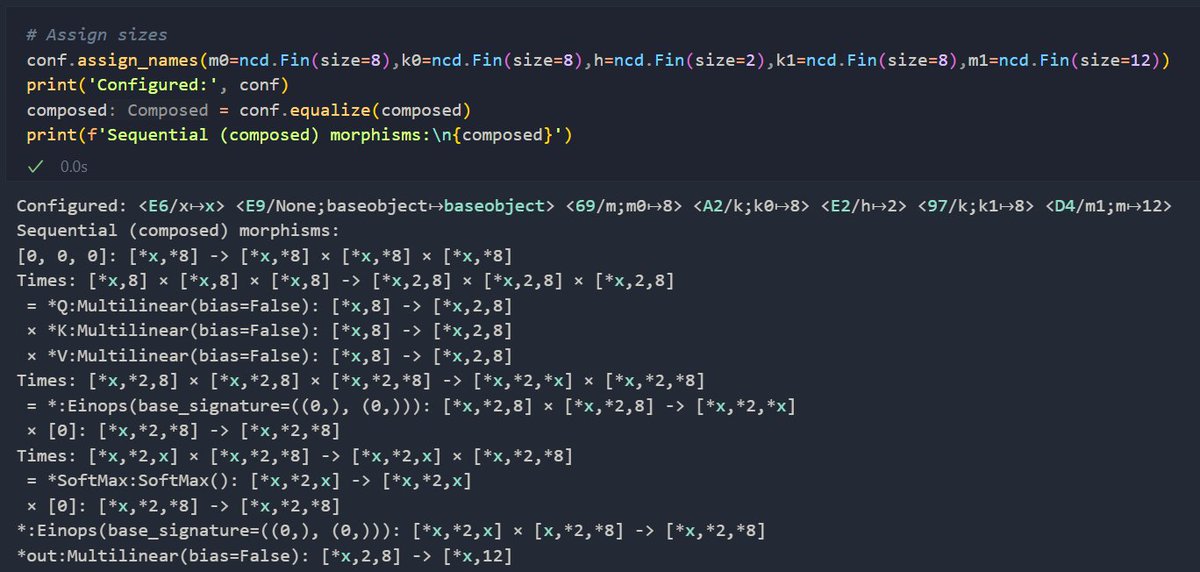

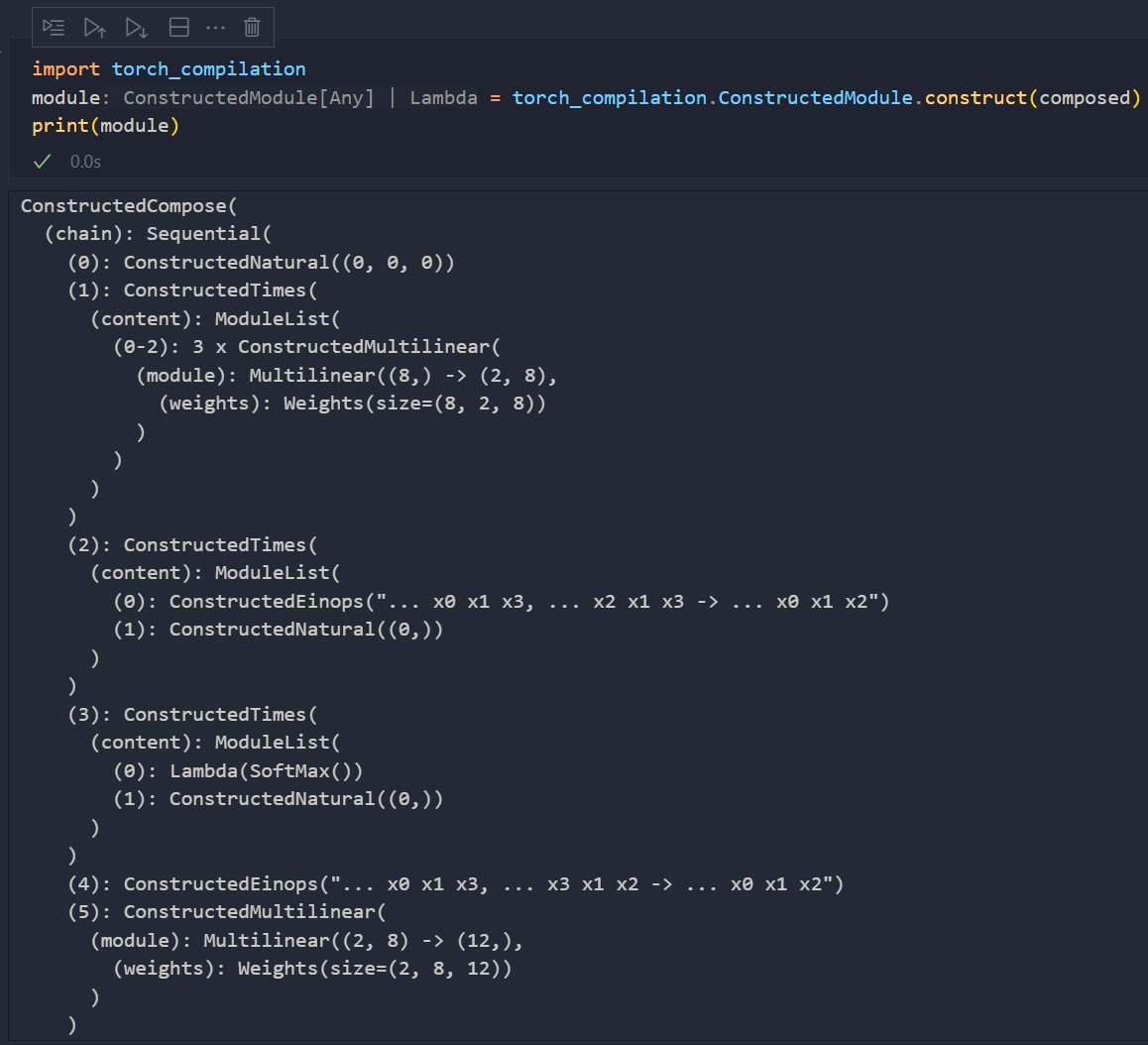

Recently posted w/ @GioeleZardini and @sgestalt_jp. Diagrams indicate exponents are attention’s bottleneck. We use the fusion theorems to show any normalizer works for fusion and we replace SoftMax with L2, and implement it thanks to @GerardGlow47445! Even w/o warp shuffling TC.

2

5

26