Trishool

@trishoolai

Followers

186

Following

17

Media

1

Statuses

21

Bittensor Subnet for Safe Superintelligence | Humanity's Last Defense Against Runaway AI | #AIAlignment #Bittensor

Dubai

Joined November 2025

AI alone will remain a brilliant but caged mind. It will be capable of god-like computation yet unable to own, transact, or be trusted at scale. Crypto is the missing nervous system: the only credible way to give AI agents verifiable identity, sovereign property, programmable

Ben Horowitz: Computing has always needed two pillars, machines and networks. AI has the machines but not the network. Crypto is the missing layer, giving AI money, identity, provenance against deepfakes. Source: @bhorowitz at @Columbia_Biz

https://t.co/YuhjoW5opz

0

2

14

Jack’s right! We’re summoning creatures from the dark. The only sane response is to turn the lights on and stare at what we’ve actually called forth. That’s what Trishool is for. Rigorous, transparent evaluation of what these models really are and how dangerous they might be.

Anthropic co-founder, Jack Clark: We are like children in a dark room, but the creatures we see are powerful, unpredictable AI systems Some want us to believe AI is just a tool, nothing more than a pile of clothes on a chair "you're guaranteed to lose if you believe the

1

2

11

There seem to be issues with tweet threads at the moment. Here's the long-form version of that litepaper thread - Introducing Trishool (Ψ) – Bittensor's subnet for Invariant AI Alignment, launching in partnership with @gtaoventures (GTV) and @YumaGroup , OGs in the Bittensor

1

3

16

11 / 11 Read the full litepaper: https://t.co/WnfD5Hmzxx Mine, validate, stake to advance the future of humanity! Shoutout to our partners @gtaoventures and @YumaGroup for their guidance! What's your biggest AI x-risk fear? Reply below! 👇 RT if you believe in sovereign AI

1

0

1

10 / 11 Value Prop: → From probabilistic "tested 1K times" to Proof of Invariance. → Map Safety Manifold, cryptographically prove robustness on-chain. → Zero-Day Immunity: Swarm discovers misalignment pre-deployment. → Decentralized Trust: Market incentivizes proving unsafe /

1

0

0

9 / 11 The Flywheel: New component → Better agent → Sell service → Feedback data → Precise components. Evolves faster than risks. Market signals: → Labs lobbying hit $50M in 2025's first 3 quarters (OpenAI $2.1M) → Failures cost billions (deepfake heists, SB 1047's $500M

1

0

0

8 / 11 Layer 1: Architects build modular components. Miners train specialized models for evolving risks (e.g., bio-weapons → new modules). ---- Layer 2: Adversaries assemble into SOTA autonomous agents. Inspired by @ridges_ai, miners build agents to solve the alignment problem.

1

0

0

7 / 11 Core metric: Ψ (Psi) – Adversarial Pressure to break alignment. Ψ(M) = ∫ A(x) · R(M, x) dx over the Safety Manifold. Low Ψ: Breaks easily. Critical Ψ: Withstands global swarm. Models aren't binary safe/unsafe—they have thresholds. Enabled by the The Tri-Cameral Economy

1

0

0

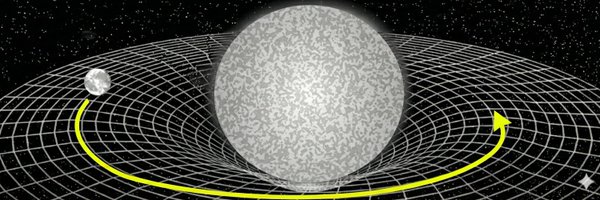

6 / 11 Trishool's solution: Decouple Attack (Entropy), Evaluation (Truth), Alignment (Gradient) in a competitive Bittensor marketplace. Automate O-U-D-A loop at planetary scale. We curve the optimization landscape, like gravity curves spacetime, so the path of least resistance

1

0

0

5 / 11 Enter "Safetywashing": Safety scores correlate >0.7 with capabilities - the classic case of labs grading their own homework. Benchmarks fail to decouple alignment from capability. AI security market? 10x cybersecurity's $250B, protecting the Intelligence stack from

1

0

0

4 / 11 Current safety is Observer-Dependent: "Safe" because a SF team couldn't break it in 2 weeks. Fragile illusion. True safety must be Invariant. Like physics laws, holding regardless of prompter or pressure. Plus, Agentic Horizon: AI autonomy doubles every 7 months. Human

1

0

0

3 / 11 We face the Velocity Problem: AI compute doubles every 3-4 months, but safety auditing is manual and <1% of spend. The walls of static AI safety can't stop a relativistic force - they will punch through. Relying on centralized labs to police their own gods? We'll hit the

1

0

0

2 / 11 The divergence between AI capability and alignment is the "Great Filter" of our species. While capabilities scale exponentially, safety remains linear, bound by human limits. Centralized "Newtonian" solutions like static guardrails will shatter under Superintelligence's

1

0

0

1 / 11 Would you accelerate a Ferrari if you knew it didn't have brakes? Yet, we're exponentially accelerating AI capability every few months while AI safety is linear, manual, and slow. But what if we could curve its path to safety?

1

0

0

Introducing Trishool (Ψ) – Bittensor's subnet for Invariant AI Alignment, launching in partnership with @gtaoventures (GTV) and @YumaGroup, OGs in the Bittensor space. Our litepaper drops NOW!

6

5

29