Tom Young

@tomyoung903

Followers

57

Following

722

Media

2

Statuses

124

Research fellow working on large language models @NUS w/ folks such as @XueFz and @YangYou1991

Singapore

Joined April 2017

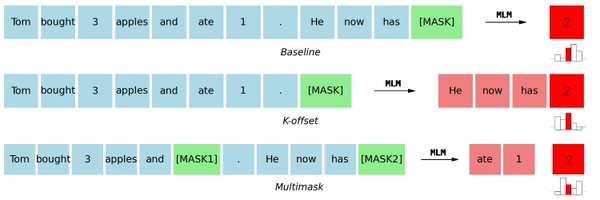

Do Masked Language Models (e.g., PaLM2) inherently learn self-consistent token distributions? 🤔🤔 Our experiments show: No.❗And we can ensemble multiple inconsistent distributions to improve the model’s accuracy. 📈 Paper: https://t.co/pgbP3Nf05w Code: https://t.co/kCiDOEeGU0

0

1

9

AI agents are getting better at looking at different types of data in businesses to spot patterns and create value. This is making data silos increasingly painful. This is why I increasingly try to select software that lets me control my own data, so I can make it available to my

deeplearning.ai

The Batch AI News and Insights: AI agents are getting better at looking at different types of data in businesses to spot patterns and create value...

129

355

2K

We are at the dawn of a "Cambrian Explosion" in scientific discovery. A super AI science copilot (or even pilot) is on the horizon. As AI frees humanity from routine labor and enhances education, we can fully harness our intelligence—investing our efforts (with AI’s aid) into

0

1

1

Thrilled to share that MixEval-X has been selected for a spotlight at #ICLR2025! Our real-world-aligned, efficient evaluation method pushes the frontier of multi-modal model development. It would be useful to you whether you’re working on MMLM, AIGC, or any2any models. 🪐Brace

🏇 Frontier players are racing to solve modality puzzles in the quest for AGI. But to get there, we need consistent, high-standard evaluations across all modalities! 🚀 Introducing MixEval-X, the first real-world, any-to-any benchmark. Inheriting the philosophy from MixEval,

9

8

20

Excited to share that our paper on automated scientific discovery has been accepted to #ICLR2025! In brief, 1. It shows that LLMs can rediscover the main innovations of many research hypotheses published in Nature or Science. 2. It provides a mathematically proven theoretical

Given only a chemistry research question, can an LLM system output novel and valid chemistry research hypotheses? Our answer is YES!!!🚀Even can rediscover those hypotheses published on Nature, Science, or a similar level. Preprint: https://t.co/xJQr0JrIzO Code:

3

11

30

That’s why we built MixEval-X. Test your multi-modal models in real-world tasks here: https://t.co/Jz2THe24I3 It’s easy to run!

Gemini 2.0 Flash Experimental has the ability to produce native audio in a variety of styles and languages - all from scratch. 🗣️ Here’s how this is different to traditional text-to-speech systems ↓ https://t.co/FRWb3q3KHe

0

4

11

🔥Congrats GDM on successfully having Fuzhao! Needless to say the extreme challenge to get recruited by GDM Gemini team, especially for an SG based PhD student, what I want to mention here are three most important merits that I observe from Fuzhao during the four years’

Life Update: I’m joining Google DeepMind as a Senior Research Scientist after three incredible years of PhD (aka "Pretrain myself harD" 😄). I’ll be contributing to Gemini pretraining and multi-modality research. I feel incredibly fortunate to report to @m__dehghani again, and

1

2

16

🏇 Frontier players are racing to solve modality puzzles in the quest for AGI. But to get there, we need consistent, high-standard evaluations across all modalities! 🚀 Introducing MixEval-X, the first real-world, any-to-any benchmark. Inheriting the philosophy from MixEval,

2

21

68

How to get ⚔️Chatbot Arena⚔️ model rankings with 2000× less time (5 minutes) and 5000× less cost ($0.6)? Maybe simply mix the classic benchmarks. 🚀 Introducing MixEval, a new 🥇gold-standard🥇 LLM evaluation paradigm standing on the shoulder of giants (classic benchmarks).

31

66

245

Say hello to Grok-1's new PyTorch+HuggingFace edition! 🚀 314 billion parameters, 3.8x faster inference. Easy to use, open-source, and optimized by Colossal-AI. 🤖 Dive in: #Grok1 #ColossalAI🌟 https://t.co/D1P1XM1rEs Download Now: https://t.co/Fg3BmiNRzT

huggingface.co

33

108

679

Was impressed by the idea the first time I got to know it. DMC demonstrates the possibility of compressing KV cache in a smarter way, and KV cache compression is just sooooo important nowadays! Glad to see the huge leap in LLM's efficiency! Kudos to the team! 🔥🔥🔥

The memory in Transformers grows linearly with the sequence length at inference time. In SSMs it is constant, but often at the expense of performance. We introduce Dynamic Memory Compression (DMC) where we retrofit LLMs to compress their KV cache while preserving performance

0

3

4

Big congrats to @DrJimFan and @yukez !!! I’m super lucky to be part of the GEAR team! I do believe that Generalist Embodied Agent is the next step towards AGI. Join GEAR and let’s make some exciting things together!

Career update: I am co-founding a new research group called "GEAR" at NVIDIA, with my long-time friend and collaborator Prof. @yukez. GEAR stands for Generalist Embodied Agent Research. We believe in a future where every machine that moves will be autonomous, and robots and

0

1

32

You don't see a paper like this everyday!

Neural Network Diffusion Diffusion models have achieved remarkable success in image and video generation. In this work, we demonstrate that diffusion models can also generate high-performing neural network parameters. Our approach is simple, utilizing an autoencoder and a

0

0

3

(1/5)🚀 Our OpenMoE Paper is out! 📄 Including: 🔍ALL Checkpoints 📊 In-depth MoE routing analysis 🤯Learning from mistakes & solutions Three important findings: (1) Context-Independent Specialization; (2) Early Routing Learning; (3) Drop-towards-the-End. Paper Link:

5

101

512

Fuzhao shared his developing details with OpenMoE every time I asked. A lot of details go into training LLMs.

Mistral magnet link is awesome, but let’s get the timelines straight. My student and NVIDIA intern Fuzhao open-sourced a decoder-only MoE 4 months ago. Google Switch Transformer, a T5-based MoE, was open > a year ago. MoE isn’t new. It just didn’t get as much attention routed

0

0

4

As a postdoc working on AI, I still spend a lot of time trying to improve my coding skills😂. Would love to see more tweets on those.

People often ask if ML or software skills are more the bottleneck to AI progress. It’s the wrong question—both are invaluable, and people with both sets of skills can have outsized impact. We find it easier, however, to teach people ML skills as needed than software engineering.

0

0

2

This pipeline suits many companies I imagine🤔🤔

NVIDIA basically compressed 30 years of its corporate memory into 13B parameters. Our greatest creations add up to 24B tokens, including chip designs, internal codebases, and engineering logs like bug reports. Let that sink in. The model "ChipNeMo" is deployed internally, like a

1

0

4

🥳🥳🥳 I feel fortunate to chat with and learn from him from time to time 😄😄

Super thrilled to announce that I've been awarded the 2023 Google PhD Fellowship! Enormous gratitude to my wonderful mentors/advisors who championed my application: @m__dehghani, @YangYou1991, @AixinSG, and to all my incredible collaborators. A heartfelt thanks to @GoogleAI and

1

0

5

“Levels of AGI: Operationalizing Progress on the Path to AGI” Google defines AGI levels in this new paper. It is interesting. Paper: https://t.co/DtjTK9sG7w

5

12

53

What's In My Big Data? Proposes a platform and a set of sixteen analyses that allow us to reveal and compare the contents of large text corpora https://t.co/rYdZErJFld

1

35

203