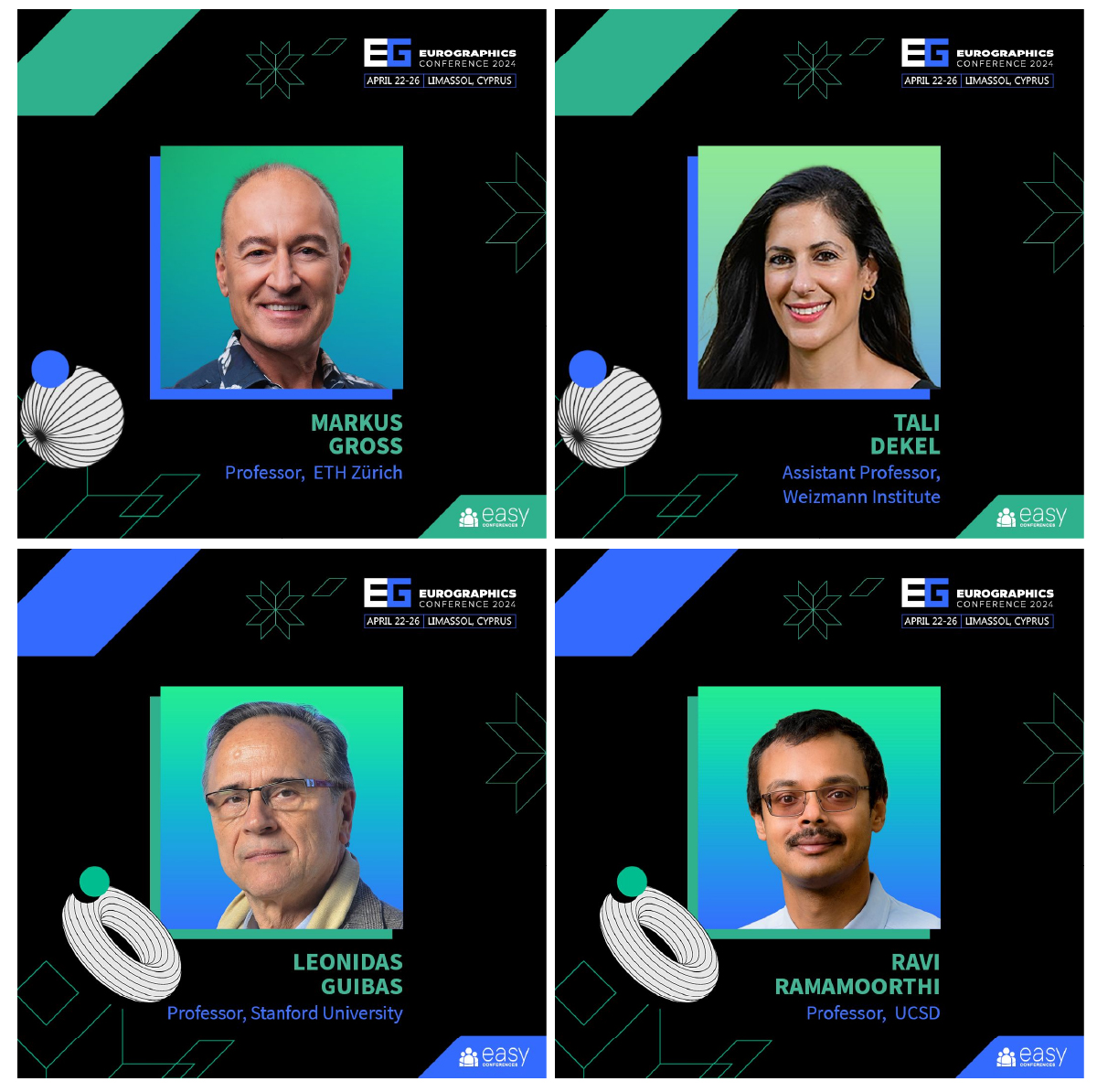

Tali Dekel

@talidekel

Followers

2K

Following

125

Media

14

Statuses

111

Associate Professor @ Weizmann Institute Research Scientist @ Google DeepMind

Joined May 2019

Best Paper Award @ SIGGRAPH'25 🥳.

So much is already possible in image generation that it's hard to get excited. TokenVerse has been a refreshing exception!. Disentangling complex visual concepts (pose, lighting, materials, etc.) from a single image — and mixing them across others with plug-and-play ease!.

5

1

94

So much is already possible in image generation that it's hard to get excited. TokenVerse has been a refreshing exception!. Disentangling complex visual concepts (pose, lighting, materials, etc.) from a single image — and mixing them across others with plug-and-play ease!.

Excited to share that "TokenVerse: Versatile Multi-concept Personalization in Token Modulation Space" got accepted to SIGGRAPH 2025!.It tackles disentangling complex visual concepts from as little as a single image and re-composing concepts across multiple images into a coherent

0

1

28

#CVPR2025 🚀.

Understanding the inner workings of foundation models is key for unlocking their full potential. While the research community has explored this for LLMs, CLIP, and text-to-image models, it's time to turn our focus to VLMs. Let's dive in! 🌟 .

0

2

22

RT @pika_labs: Today we launched our Pika 2.0 model. Superior text alignment. Stunning visuals. And ✨Scene Ingredients✨that allow you to up….

0

352

0

Understanding the inner workings of foundation models is key for unlocking their full potential. While the research community has explored this for LLMs, CLIP, and text-to-image models, it's time to turn our focus to VLMs. Let's dive in! 🌟 .

🔍 Unveiling new insights into Vision-Language Models (VLMs)!. In collaboration with @OneViTaDay & @talidekel, we analyzed LLaVA-1.5-7B & InternVL2-76B to uncover how these models process visual data. 🧵.

0

23

153

Working on layered video decomposition for a few years now, I'm super excited to share these results! .Casual videos to *fully visible* RGBA layers, even under significant occlusions!.Kudos @YaoChihLee, @erika_lu_, Sarah Rumbley, @GeyerMichal, @jbhuang0604, and @forrestercole.

Excited to introduce our new paper, Generative Omnimatte: Learning to Decompose Video into Layers, with the amazing team at Google DeepMind!. Our method decomposes a video into complete layers, including objects and their associated effects (e.g., shadows, reflections).

1

17

128

Creating a panoramic video out of a casual panning video requires a strong generative motion prior, which we finally have! Kudos @JingweiMa2 and team!.

We are excited to introduce "VidPanos: Generative Panoramic Videos from Casual Panning Videos". VidPanos converts phone-captured panning videos into (fully playing) video panoramas, instead of the usual (static) image panoramas. Website: Paper:

0

0

9

Unlike images, getting customized video data is challenging. Check out how we can customize a pre-trained text-to-video model *without* any video data!.

Introducing✨Still-Moving✨—our work from @GoogleDeepMind that lets you apply *any* image customization method to video models🎥. Personalization (DreamBooth)🐶stylization (StyleDrop) 🎨 ControlNet🖼️—ALL in one method!. Plus… you can control the amount of generated motion🏃♀️.🧵👇

0

3

26

Self-supervised representation learning (DINO) + test time optimization=DINO-tracker! Achieving SOTA tracking results across long range occlusions. Congrats @tnarek99 @assaf_singer and @OneViTaDay on the great work! 🦖🦖.

Excited to present DINO-Tracker (accepted to #ECCV2024)! A novel self-supervised method for long-range dense tracking in video, which harnesses the powerful visual prior of DINO. Project page: [1/4].@assaf_singer .@OneViTaDay.@talidekel

1

22

138

Thank you @WeizmannScience for featuring our research!.

Weizmann’s Dr. @TaliDekel, among the world’s leading researchers in generative AI, focuses on the hidden capabilities of existing large-scale deep-learning models. Her research with Google led to the development of the recently unveiled Lumiere >>

0

1

44

RT @_k_a_c_h_: Hi friends — I'm delighted to announce a new summer workshop on the emerging interface between cognitive science 🧠 and compu….

0

31

0

Thank you @ldvcapital for the recognition and for supporting women in Tech!.

Since 2012, we have been showcasing brilliant women whose work in #VisualTech and #AI is reshaping business and society. We are thrilled to feature 120+ women driving today's tech innovations 🚀.

1

1

7

Thank you @SagivTech for the opportunity to take part in @IMVC2024, and for keeping our Israeli Vision community live and kicking during these challenging times!

0

0

13

Space-Time Diffusion Features for Zero-Shot Text-Driven Motion Transfer -- accepted to #CVPR2024! Code is available: .w/ @DanahYatim @RafailFridman @omerbartal @yoni_kasten.

Text2video models are getting interesting!📽️. Check out how we leverage their space-time features in a zero-shot manner for transferring motion across objects and scenes!. Led by @DanahYatim @RafailFridman,@yoni_kasten @talidekel.[1/3]

0

2

54

Looking forward to it!.

AI for Content Creation workshop is back at #CVPR2024. 21st March paper deadline @ Plus stellar speakers:.@AaronHertzmann .@liuziwei7 .@robrombach .@talidekel .@Jimantha .@Diyi_Yang .late breaking speakers and special guest panel.!!!.

0

0

3