Swayam Singh

@swayaminsync

Followers

1K

Following

5K

Media

690

Statuses

3K

@MSFTResearch | “An Asynchronously Conscious Human”

BLR

Joined April 2021

Strong version of you is dealing with all the inner demons silently, keeping all the chaos contained within you, hidden from the outside world. It'll get exhaustive sometimes and I am proud of you. Don't give up.

0

0

17

One thing I’ve really enjoyed about building a new data type is adding proper algorithm support. GCC, Clang, and CPython are great references, but a lot of their internal logic doesn’t directly apply to my dtype or even stays consistent across platforms so there’s always some

1

0

6

Back in 2017 In my school (ofcourse, Kendriya Vidyalaya), we cried because our school-wide teacher crush got married, literally all boys. That "gam bhara" celebration was directly proportional to how "badnaam" that class is. Those were so good days!!

Teaching is such a fulfilling job. My brother, who is a teacher in a government school, went back to work after his wedding, and the kids welcomed him with a fully decorated classroom and tons of gifts that included pens, notebooks, diaries, and handmade cards. I can’t even 😭

0

0

5

A pair who's not scared of me, still they hesitate to come close but yeah bonding is ongoing The black one only focussed on treats :)

1

0

6

Almost done!! I hope this will not spoil the upcoming Dune-3 movie

0

0

5

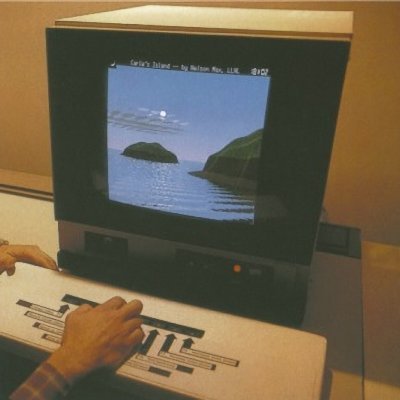

Won't be doing any kind of "extreme-work" post midnight 😂 feat by @Ramneet_Singhh

1

0

8

OSS projects are like: Ideal: "Please read our docs to understand the system." Reality: "Please understand the system so you can fix our docs."

1

0

11

These recently added @CppCon talks are banger, I'll be bing-watching them today along with work. Ordering might be (row-major) 0, 3, 5, 4, 2, 1 https://t.co/H8TeLqwRBQ

0

0

6

I obviously love the vLLM dev ecosystem, its use of build system, incremental compilation, and now plugins!

Need to customize vLLM? Don't fork it. 🔌 vLLM's plugin system lets you inject surgical modifications without maintaining a fork or monkey-patching entire modules. Blog by Dhruvil Bhatt from AWS SageMaker 👇 Why plugins > forks: • vLLM releases every 2 weeks with 100s of PRs

0

0

5