Steve Azzolin

@steveazzolin

Followers

262

Following

766

Media

5

Statuses

101

ELLIS PhD student @ UNITN/UniCambridge || Prev. Visiting Research Student at UniCambridge || Prev. Research intern at SISLab

Joined January 2014

📢 Now that we have an in-person LoG, why are we still supporting meetups? 👉 Meetups keep the community alive all year round, beyond the main event 👉 They give the community more chances to connect locally and inclusively 👉 They complement—not compete with—the in-person LoG

📢News about our Call for Local Meetups 🚉 To favor commuting, 3-day events are now allowed 🗓️ Deadline extended to 30th November See https://t.co/bYWUSQROON for details

0

1

8

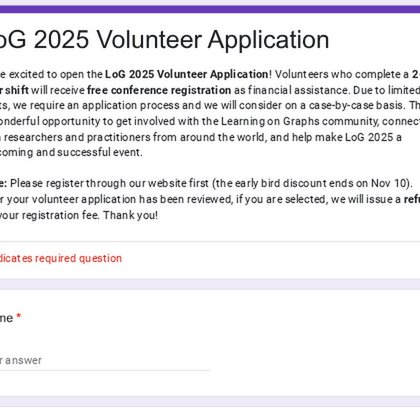

🚀 The LoG 2025 volunteer application is open! Give 2 hours → get FREE conference registration. Connect with researchers worldwide and help make LoG 2025 welcoming and successful. 🌍🤝 Apply now ➡️ https://t.co/hUOQLxYeDv

#LoG2025 #Volunteer #AI #ML

docs.google.com

We’re excited to open the LoG 2025 Volunteer Application! Volunteers who complete a 2-hour shift will receive free conference registration as financial assistance. Due to limited spots, we require an...

0

3

6

📢LoG 2025 Registration📢 👤 Venue: Arizona State University, Phoenix, AZ 🚨 For each accepted paper/tutorial, at least one author must attend in person: https://t.co/98fSr6jis7 🔗 Registration details: https://t.co/xwPMjSkOGI

5

3

10

✨Call for organising local meetups is now out✨ We continue our mission to support a thriving network of local, researcher-driven events 📢 Join our network and submit a proposal: https://t.co/bYWUSQROON 🗓️Deadline: 10th November

0

5

11

📢 Call for Tutorials – LoG 2025 Learning on Graphs Conference, Dec 10 https://t.co/O6g9flo0El 🗓 Key dates (AoE): • Sept 17 – Proposal deadline • Oct 1 – Notification • Nov 3 – Final materials • Dec 10 – Tutorials Submit your proposal & join us! #LoG2025

logconference.org

0

5

11

In case you missed it, we're still taking self-nominations for reviewers at LoG 2025✍️

🚨 Reviewer Call — LoG 2025 📷 Passionate about graph ML or GNNs? Help shape the future of learning on graphs by reviewing for the LoG 2025 conference! 📷 https://t.co/05V5vGgZbY 📷 RT & share! #GraphML #GNN #ML #AI #CallForReviewers

0

0

3

Join the Temporal Graph Learning Workshop at KDD 2025, we have an amazing program with great speakers and papers waiting for you. Let's shape together the future of temporal graph research. 📍Aug 4 | 1–5 PM | Room 714A, Toronto Convention Center 🔗

sites.google.com

Key dates

1

4

13

Ready to present your latest work? The Call for Papers for #UniReps2025 @NeurIPSConf is open! 👉Check the CFP: https://t.co/i0lGs0DQXm 🔗 Submit your Full Paper or Extended Abstract here: https://t.co/9fZzZvgi9M Speakers and panelists: @d_j_sutherland @elmelis @KriegeskorteLab

0

12

18

🚨 Calling all ML & AI companies! The LOG 2025 sponsor page is now live: https://t.co/tlmDf6LrN4 LOG is the go-to venue for graph ML, reasoning & systems research. We're inviting sponsors to support this fast-growing community & gain visibility. #LOG2025 #GraphML #MachineLearning

logconference.org

0

10

29

What can we do to make self-explanations less ambiguous? -> We propose to automatically adapt explanations to the task by stitching together SE-GNNs with white-box models and combining their explanations.

1

0

2

- Models encoding different tasks can produce the same self-explanations, limiting the usefulness of explanations

1

0

2

Studying some popular models, we found that: - The information that self-explanations convey can radically change based on the underlying task to be explained, which is, however, generally unknown

1

0

2

🧐What are the properties of self-explanations in GNNs? What can we expect from them? We investigate this in our #ICML25 paper. Come to have a chat at poster session 5, Thu 17 11 am. w. Sagar Malhotra @andrea_whatever @looselycorrect

2

4

21

Big news: The first in-person event is coming 👀

We’re thrilled to share that the first in-person LoG conference is officially happening December 10–12, 2025 at Arizona State University https://t.co/Js9FSm6p3N Important Deadlines: Abstract: Aug 22 Submission: Aug 29 Reviews: Sept 3–27 Rebuttal: Oct 1–15 Notifications: Oct 20

0

0

4

This is an issue on multiple levels, and authors using those "shortcuts"👀 are equally responsible for this unethical behaviour

to clarify -- I didn't mean to shame the authors of these papers; the real issue is AI reviewers, what we see here is just the authors trying to defend against that in some way (the proper way would be identifying poor reviews and asking the AC or meta-reviewer to discard them)

1

2

6

Happening tomorrow! Saturday 10-12:30 am Poster # 508

📣 New paper on #XAI for #GNNs: 👉 Can you trust your GNN explanations? 👉 How can you measure #faithfulness properly? 👉 Are all estimators the same? 👉 What's the link with #OOD generalization? We look at these questions and more in Steve's latest #ICLR paper! Have a look!

0

3

7

In LoCo-LMs, we propose a neuro-symbolic loss function to fine-tune a LM to acquire logically consistent knowledge from a domain graph, i.e. wrt. to a set of logical consistency rules. @looselycorrect @tetraduzione

https://t.co/YXGg38mIon

arxiv.org

Large language models (LLMs) are a promising venue for natural language understanding and generation. However, current LLMs are far from reliable: they are prone to generating non-factual...

1

4

12