Qian Liu

@sivil_taram

Followers

4K

Following

3K

Media

124

Statuses

1K

Researcher @ TikTok 🇸🇬 📄 Sailor / StarCoder / OpenCoder 💼 Past: Research Scientist @SeaAIL; PhD @MSFTResearch 🧠 Contribution: @XlangNLP @BigCodeProject

Joined November 2021

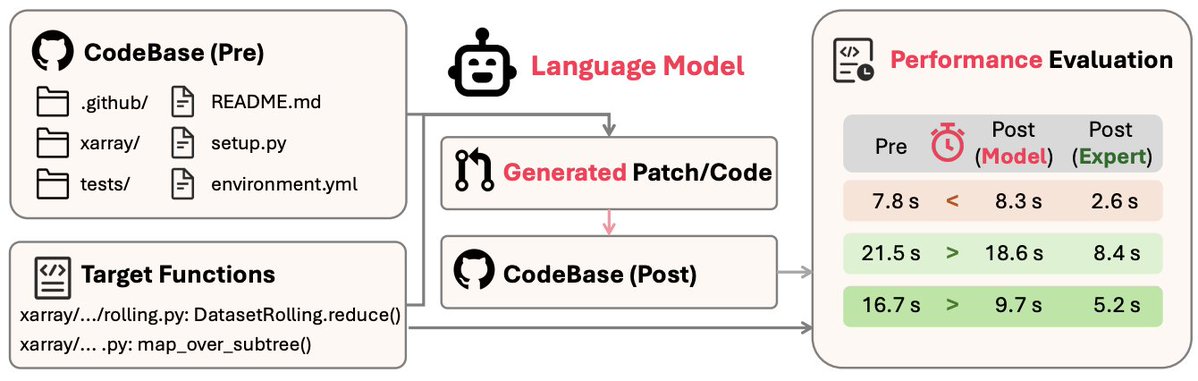

🔥 LLMs can fix bugs, but can they make your code faster? We put them to the test on real-world repositories, and the results are in!. 🚀 New paper: "SWE-Perf: Can Language Models Optimize Code Performance on Real-World Repositories?". Key findings:.1️⃣ We introduce SWE-Perf, the

1

16

60

RT @yusufma555: 🚀🚀🚀 Ever wondered what it takes for robots to handle real-world household tasks? long-horizon execution, deformable object….

0

88

0

RT @FaZhou_998: Apart from the performance, it’s pure entertainment just watching Qwen3‑Coder build Qwen Code all by itself. Agentic coding….

0

10

0

RT @Alibaba_Qwen: >>> Qwen3-Coder is here! ✅. We’re releasing Qwen3-Coder-480B-A35B-Instruct, our most powerful open agentic code model to….

0

1K

0

RT @huybery: After three intense months of hard work with the team, we made it! We hope this release can help drive the progress of Coding….

0

81

0

RT @Marktechpost: TikTok Researchers Introduce SWE-Perf: The First Benchmark for Repository-Level Code Performance Optimization. SWE-Perf,….

0

10

0

RT @allhands_ai: Nice new research work by @tiktok_us on benchmarking performance optimization by LLM agents: Open….

arxiv.org

Code performance optimization is paramount in real-world software engineering and critical for production-level systems. While Large Language Models (LLMs) have demonstrated impressive...

0

16

0

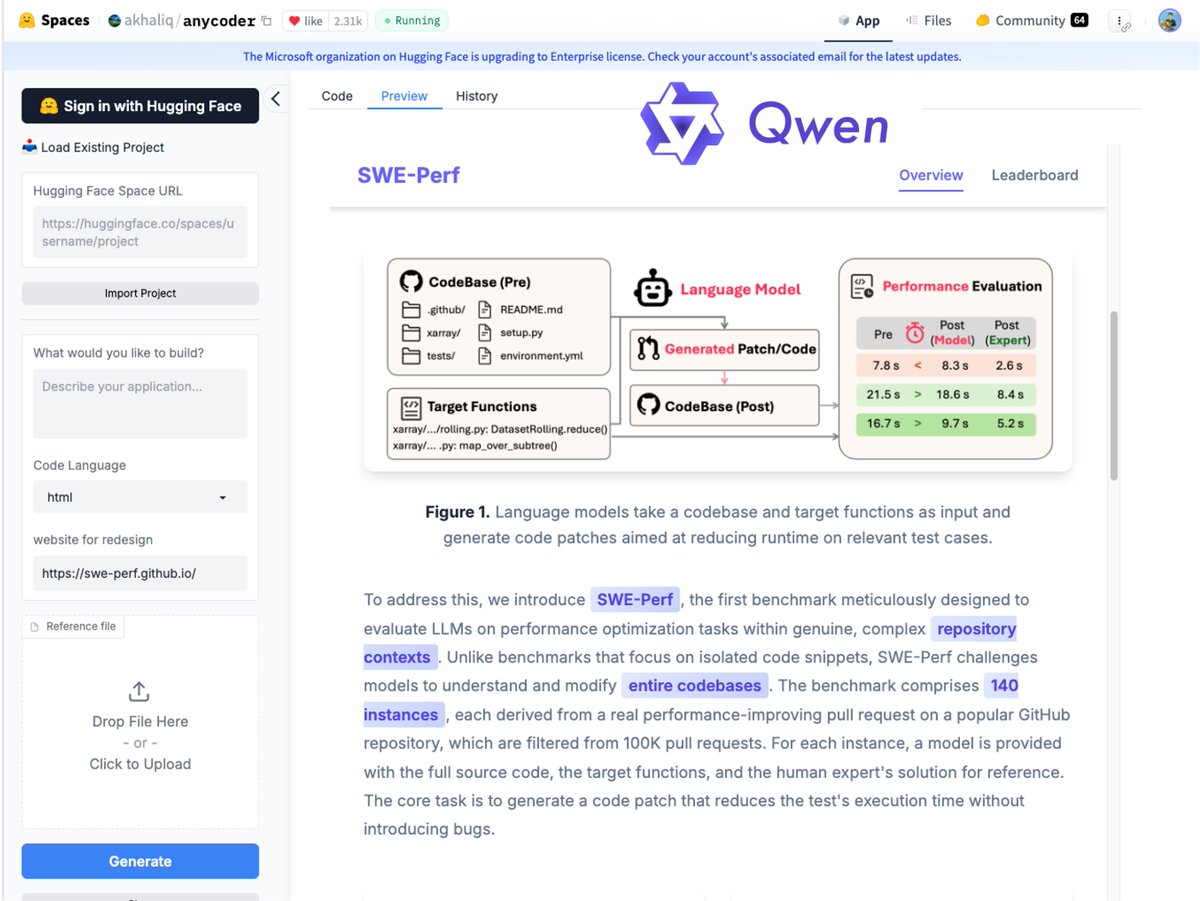

RT @_akhaliq: SWE-Perf. Can Language Models Optimize Code Performance on Real-World Repositories?

0

23

0

Work together with Xinyi He, @spirit__song , Lin Yan, Zhijie Fan, @yeeelow233 , Zejian Yuan, and Zejun Ma!

0

0

2

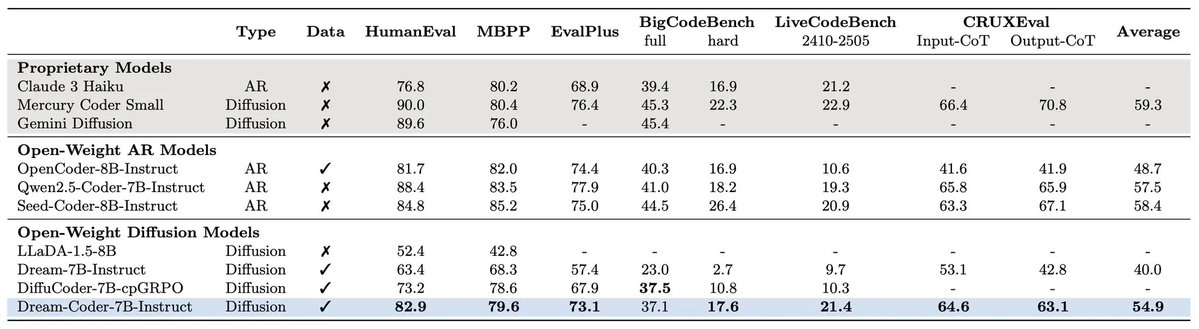

RT @ikekong: What happend after Dream 7B?. First, Dream-Coder 7B: A fully open diffusion LLM for code delivering strong performance, traine….

0

32

0

RT @_zhihuixie: 🚀 Thrilled to announce Dream-Coder 7B — the most powerful open diffusion code LLM to date.

0

33

0