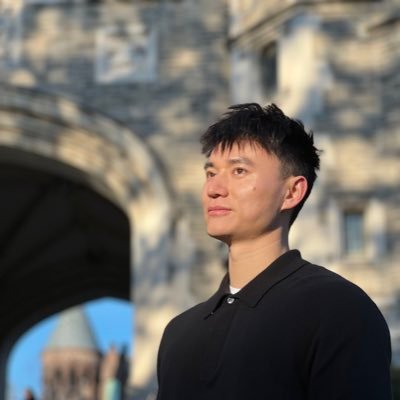

Ryan Chan

@ryanchankh

Followers

391

Following

943

Media

6

Statuses

51

Machine Learning PhD at @penn. Interested in the theory and practice of interpretable and interactive machine learning.

Philadelphia, PA

Joined September 2021

Come check out our work at NeurIPS on Dec 5, Friday! Conformal Information Pursuit for Interactively Guiding Large Language Models Project Link: https://t.co/DZyHGQ9uZa Poster: Dec 5 (Friday), 11am-2pm, Exhibit Hall C,D,E #2811

1

0

5

I wrote a review paper about statistical methods in generative AI; specifically, about using statistical tools along with genAI models for making AI more reliable, for evaluation, etc. See here: https://t.co/oNrb4dYe9i! I have identified four main areas where statistical

11

99

484

Congratulations to @PennEngineers researchers featured at @NeurIPSConf, with work spanning LLMs, generative AI, trustworthy AI, neuroscience, health and more. #NeurIPS2025

0

5

14

Come check out our work “𝐒𝐄𝐂𝐀: 𝐒𝐞𝐦𝐚𝐧𝐭𝐢𝐜𝐚𝐥𝐥𝐲 𝐄𝐪𝐮𝐢𝐯𝐚𝐥𝐞𝐧𝐭 𝐚𝐧𝐝 𝐂𝐨𝐡𝐞𝐫𝐞𝐧𝐭 𝐀𝐭𝐭𝐚𝐜𝐤𝐬 𝐟𝐨𝐫 𝐄𝐥𝐢𝐜𝐢𝐭𝐢𝐧𝐠 𝐋𝐋𝐌 𝐇𝐚𝐥𝐥𝐮𝐜𝐢𝐧𝐚𝐭𝐢𝐨𝐧𝐬” at 𝐍𝐞𝐮𝐫𝐈𝐏𝐒 2025 in San Diego this Friday, December 5!

1

2

3

Finally, I would like to thank my wonderful collaborators: Yuyan Ge @yuyan_ge, Edgar Dobriban @EdgarDobriban, Hamed Hassani @HamedSHassani, René Vidal @vidal_rene.

1

0

2

The long-term goal is to develop helpful and guided AI agents that can collaborate and cooperate with humans and other agents for better decision making, especially in high-stake domains such as helping doctors to make challenging but accurate diagnoses.

1

0

1

While it seems straight-forward, it turns out expressing uncertainty in an LLM itself is a major challenge. To make it work, we had to leverage uncertainty quantification techniques such as conformal prediction to obtain calibrated measures of uncertainty.

1

0

2

In an interactive QA setting, it means that at each iteration, the LLM agent selects the informative question that reduces the most uncertainty. And based on the answer to the question, it selects the next question; so on and so forth until it is confident to make a prediction.

1

0

0

We begin by having LLM agents play a game called 20 Questions, where one LLM is thinking of some object, and the other has to guess the object by asking a small number of questions. The statistical principle that describes this process is known as Information Gain.

1

0

0

With today’s development in Large Language Models, it’s an interesting question to see whether AI agents can interact like humans do. Can LLM agents seek information dynamically like humans do? We explore this question in our work: Conformal Information Pursuit!

1

0

1

As humans, we often interactively solve tasks together. Imagine you are feeling sick and going to the doctors. To make an accurate diagnosis, the doctor has to learn some information from you by asking you a sequence of questions.

1

3

6

Concept cells stitch together, one by one, in imagination and memory. For instance, “Shrek and Jennifer Aniston walk into a bar. … Maybe Shrek orders a beer,” suggested researcher Pieter Roelfsema. As you read this, it’s likely that concept cells “are building something in your

quantamagazine.org

Individual cells in the brain light up for specific ideas. These concept neurons, once known as “Jennifer Aniston cells,” help us think, imagine and remember episodes from our lives.

0

6

14

PaCE: Parsimonious Concept Engineering for Large Language Models "we propose Parsimonious Concept Engineering (PaCE), a novel activation engineering framework for alignment. First, to sufficiently model the concepts, we construct a large-scale concept dictionary in the

1

4

21

🔍 Curious about how to interpret and steer your LLM? Want to accurately engineer your LLM's activation? 🚀 Excited to introduce 𝐏𝐚𝐂𝐄: 𝐏𝐚𝐫𝐬𝐢𝐦𝐨𝐧𝐢𝐨𝐮𝐬 𝐂𝐨𝐧𝐜𝐞𝐩𝐭 𝐄𝐧𝐠𝐢𝐧𝐞𝐞𝐫𝐢𝐧𝐠 𝐟𝐨𝐫 𝐋𝐚𝐫𝐠𝐞 𝐋𝐚𝐧𝐠𝐮𝐚𝐠𝐞 𝐌𝐨𝐝𝐞𝐥𝐬! We generate a concept

6

30

129

Penn Engineering is pleased to announce AI Month, a four-week series of events in April touching on AI's many facets and impact on engineering and technology. See the full list of events here: https://t.co/PFu6gnHzLA

#AIMonthatPenn

0

4

11

Excited to see an executive order on pushing safe, secure, and trustworthy AI. Even more so because the order targets key current risks and tangible interventions to take today, unlike having a sole focus on future existential risks. (1/4) https://t.co/iEewS5DfAI

1

1

5

Come checkout our poster for linearizing and clustering data on nonlinear manifolds using an unsup/self-sup approach! The method is simple, fast, and can be trained on a gaming GPU. Yet, it achieves SOTA clustering on CIFAR-10, 20, 100, and even TinyImageNet-200. See you Nord 108

3

6

25

🏗️ As we wrap up summer, construction on Penn Engineering's Amy Gutmann Hall is making incredible progress. Learn more about the building, which compliments the School's IDEAS Signature Initiative, at 🔗 https://t.co/XsFhmcrbST 🎥 by @earthcam

0

2

3