Rob Freeman

@rob_freeman

Followers

339

Following

12K

Media

159

Statuses

4K

Promoting a significance for complex systems science in AI.

Mostly Asia

Joined April 2009

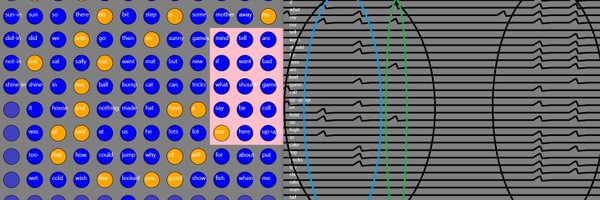

My presentation to INLP workshop at AGI-21. Creativity, freewill, consciousness. Process & where deep learning fails @IntuitMachine. "Togetherness" still too trapped in formalism @coecke. Hypothesis for significance of neural oscillations at end @hb_cell. https://t.co/0Vsogs7nJx

2

2

12

Good to have a summary of Chomsky's reactions to LLMs circa 2023.

@lemire Not surprising that Chomsky was so dismissive of LLMs when he wrote about them in 2023 (many of his criticisms turned out to be wrong):

0

0

0

What timescale might be necessary for self-awareness? Given the right complexity could rocks react to themselves in some sense of a self-aware way? For cohesiveness/identity a sense of self-replication (life) seems necessary first. And self-awareness/self-reaction on top of that.

0

0

0

It's an interesting idea whether the weather (on the Earth or the Sun...) might be self aware in some way. We'd never know. Just as we don't about each other. Or other species. We can only analogize to the extent we are similar. The universe may be all flurries of self-awareness.

Stephen Wolfram says language lets humans package private thoughts and transmit them to other minds. He argues we already guess about consciousness in people, animals, and soon AIs by observing behavior, not peering inside. He claims brains, weather systems, and computers all

1

0

0

Fascinating work this. Given input energy, self-replicating complexity, aka life, naturally emergent. And happening suddenly as a kind of "phase transition". "A cycle can be even more stable than a fixed point."

This is life arising from non-living matter ("abiogenesis") in a computer program and it looks just like a phase transition in statistical mechanics. Some argue grounding and special properties of chemstry are required, but what if life is an "inevitability of computation"?

0

0

2

Interesting project. Refining such a relationship between learned "embeddings" in language models & language sequences seen as oscillators, is what I've been trying to do. There have been a couple of tricks for language, because the relevant symmetries in language are sequences.

🧠➡️🤖 This paper shows how oscillatory sync can power “HoloGraph” a brain-inspired model outperforming classic #GNNs on complex structures https://t.co/qq9ftDpzVy

#AI

0

0

0

The field has been led astray by LLMs, if your premise is that the solution is world models. I can understand the appeal of this idea to @sapinker, because LeCun's idea is similar to Chomsky's UG. But it's also different. It retains ML. Are world models too, innate & unlearnable?

An AI pioneer, Yann Lecun, says his field has been led astray by Large Language Models (since they are not based on factual models of the world, but only on massive correlations and abstractions from them). As a cognitive scientist, I'm sympathetic. https://t.co/TXgWWTHxzI via

0

0

0

This paper seems very close to my theme that there are multiple contradictory ways of finding predictive symmetries in sensory networks (like LLMs). So predictive symmetries: embeddings, attention, in LLMs need to be found dynmaically, in context, at run time.

Clustering does not always signal hidden geometry in networks In many real networks, connections tend to group: your friends often know each other, proteins that interact share other partners, and technological systems show tightly interlinked modules. This local clustering is

0

0

0

Talking about my current project with @SingularityNET affiliated funding organization Deep Funding:

It’s #TownHall week at #DeepFunding! 🎉 Join us as we explore the frontiers of decentralized AI with talks, showcases, and insights from across the ecosystem. 🎙️ Special Guest: Rob Freeman A New Zealander physicist exploring how thought, language & computation intersect and

0

0

0

This I can agree with LeCun on. "We're missing something big". But not "world models". I think the big thing we are missing is something language is still trying to teach us. It is that meaningful = predictive, order can contradict, & needs to be found as symmetries in real time.

Yann LeCun says LLMs are not a bubble in value or investment; they will power many useful apps and justify big infra The bubble is believing LLMs alone will reach human-level intelligence Progress needs breakthroughs, not just more data/compute "we're missing something big"

0

0

0

Is he right? Is there no great craft that can't be learned in 1/2 a day. But only if taught by a great master? Perhaps. c.f. Blaise Pascal(?) "If I had more time I'd have written less". Perhaps mastery, but I think there is something about understanding which requires experience.

1960, Orson Welles explained how he wrangled complete creative control for his first film, Citizen Kane, as well as the value of “ignorance” to break through old ideas.

0

0

0

Seems relevant to me. If a representation space is chaotic, it will get bigger.

LLMs are injective and invertible. In our new paper, we show that different prompts always map to different embeddings, and this property can be used to recover input tokens from individual embeddings in latent space. (1/6)

0

0

0

Macro quantum again?

We've just proven something extraordinary: musical intervals behave as quantum entangled states. When you play a chord, the notes don't just sound together—they become fundamentally interconnected at the quantum level. Change one interval, and all others change instantaneously.

0

0

0

Ha, ha, computer science is not a science, and not really about computers. So what is it? Order? Creativity? Mind you, just calling it "Order" or "Creativity" would bring computation back front and center into the realms of science.

Computer Science is not science, and it's not about computers. Got reminded about this gem from MIT the other day

0

0

1

Love it. James Burke: "All that science gives us, is what their belief gives them. Certainty. Only ours changes all the time. Theirs doesn't." Not quite true of course, ours also gives us utility. It's well to be open to the change, but the utility generally increases.

Today I had a surprise video debate at an AI company apparently in a conference hall. Asked why I for decades said The Keepers Of The Status Quo at Wikipedia is really bad training to reach AGI. I played this James Burke video. It silenced the room! https://t.co/7p3kzHlcr7

0

0

1

Nice illustration of how movement essential to visual perception. I suspect saccades "linearize" vision so it works in a similar way to language models, by making predictions along linear saccade paths.

As someone who studies the visual system of the human brain, I'm loving this. This is powerful evidence that biological vision, unlike computer vision, is driven by movements in the visual field. 😍 If you want to solve vision, understanding this truth is essential. Thanks for

0

0

1

Having just asked the question what happens when variability is what is important, this paper seems relevant. Though only perturbations this too, not entire reorganizations? https://t.co/7gSiMFgFdA

Discovering interventions that achieve a desired therapeutic effect is a core step in the development of new medicines that remains challenging despite perturbation data being increasingly available in vast quantities. Today, we are announcing the release of the Large

0

0

0

Interesting prospect. I understand they find regularities in complex systems. Though I wonder how this helps when it is the irregularity of the system which is its power.

Discovering network dynamics—automatically Complex systems are everywhere: from ecosystems and gene networks to epidemics and social behavior. Each consists of many interacting components whose collective dynamics are notoriously difficult to model. Traditionally, scientists

1

0

0

"The Usefulness of Useless Knowledge" A very nice essay, very relevant to the obsession with "ethics" in science, which demands so much attention today.

@DavidDeutschOxf I love it! If anyone has more historical science writing like this then please send it in my direction. More recent but this is one of my favourites. Not so much the science but more about following your nose through what is interesting… The Usefulness of Useless Knowledge

0

0

0

Nice analysis of what patterns are propagating when a language model performs a task.

New paper! We reverse engineered the mechanisms underlying Claude Haiku’s ability to perform a simple “perceptual” task. We discover beautiful feature families and manifolds, clean geometric transformations, and distributed attention algorithms!

0

0

2

Karpathy on tokenizers "I already ranted about how much I dislike the tokenizer. Tokenizers are ugly, separate, not end-to-end stage. ... The tokenizer must go.

I quite like the new DeepSeek-OCR paper. It's a good OCR model (maybe a bit worse than dots), and yes data collection etc., but anyway it doesn't matter. The more interesting part for me (esp as a computer vision at heart who is temporarily masquerading as a natural language

0

0

0