Ryan Kosai

@rkosai

Followers

484

Following

4K

Media

79

Statuses

2K

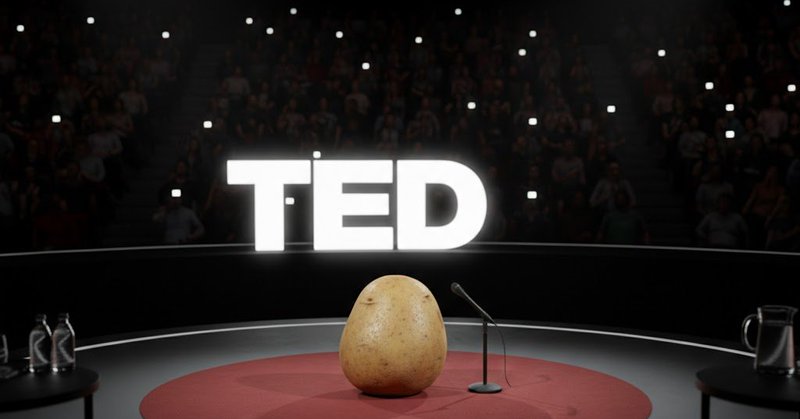

CTO @readysetpotato, an autonomous research agent for biology. 20 years in software, principal engineer, 3x CTO.

Seattle, WA

Joined May 2008

I learned about programming from my dad. That's how I know the first rule of software is to number your punch cards in case you drop them.

1

1

20

There's this kind of sociopathic defacement of civil society in the name of "achievement" where we form a lipid bilayer of ego membrane, something on the outside that assumes this is all par for the course, and something internal that feels like we're winking at one another

96

196

2K

If we are successful in our goal, millions of AI scientists running in a high-fidelity environment will accelerate scientific discovery to an unfathomable scale.

1

0

0

This is the end of a long thread. Potato is building an environment for biological sciences. The environment includes tools to search and review scientific literature, build wet lab protocols, create and run bioinformatics scripts, and drive robots for full lab automation.

1

0

0

While we've built an AI-agent resting on existing frontier models, we continue to advance and build the environment for an AI-powered future — a high-fidelity world model for scientific research.

1

0

0

As suggested earlier, we expect all this functionality to be subsumed by frontier models by the major LLM providers.

1

0

0

The system also generates an LLM-as-judge numerical scoring rubric with categories such as completion, correctness, and efficiency. These scores form an early framework for measuring how well the agent utilized the environment, as well as how well it explains, justifies, and

1

0

0

Once the agent has ended its session, the system automatically considers the full history and artifacts to produce a complete summary report. This report provides the scientific context, experiments run, and a summary of the evaluated data.

1

0

0

In the long term, a more sustainable solution is to allow automated recursive backtracking, so the agent can decide against its current trajectory and try again from an earlier iteration. However, this approach requires very robust instantaneous scoring toward the final outcome,

1

0

0

This can be performed post hoc, so even if the agent has completed a run, a user can revisit the timeline and create a branch at any desired point along that timeline.

1

0

0

To mitigate this issue in the short-term, we allow the user-in-the-loop to branch the timeline after any thinking phase. During a branching event, the historical record is maintained, but the model is prompted to reevaluate its decision with additional user suggestions and

1

0

0

One major challenge to building a robust agent in the current era is that frontier model outputs still exhibit both high variability and sensitivity to input conditions. Practically speaking, this means that a non-trivial number of decisions made by the model are wrong or

1

0

0

We also compress the historical context, using the compression method published by Factory AI, and have found it to be very effective and tunable across context window sizes. https://t.co/T9agXlxzJ5

factory.ai

Compressing Context LLMs attend only to the tokens in their current prompt. Because every model enforces a finite contex...

1

0

0

This has the benefit of compressing both the tool request and response into a single chunk of information, eliminating content duplication. Internal testing has also shown the XML structure to be more amenable to handling by the latest models than the native JSON formats used for

1

0

0

Even with a dedicated tool context, information accumulates rather quickly. To further simplify the context window for each thinking phase, we rewrite the entire history into concise XML blocks representing previous tool executions.

1

0

0

Storing the artifacts in a structured format additionally provides a medium-term “memory” for the agent, which allows it to retrieve specific details by key, without automatically consuming valuable context space.

1

0

0

Likewise, evaluating literature involves search and reranking, scanning documents for relevant facts, and finding corroboration or divergence between documents. All of these are token-heavy activities, and benefit from the externalizing effects of a tool-oriented architecture.

1

0

0

This context is crucial for accurately setting parameters, such as in protein-ligand docking, where environmental conditions directly impact affinity scoring.

1

0

0

For example, we've found that in-silico computational simulation requires detailed module documentation, but also benefits heavily from literature-drawn scientific context.

1

0

0

Instead, we return just the necessary results, with a reference ID to later access the tool's internal reasoning and artifacts.

1

0

0