Reece Keller

@rdkeller

Followers

204

Following

28

Media

7

Statuses

14

CS+Neuro @CarnegieMellon. PhD Student with @xaqlab and @aran_nayebi working on autonomy in embodied agents.

Pittsburgh, PA

Joined February 2021

RT @aviral_kumar2: Given the confusion around what RL does for reasoning in LLMs, @setlur_amrith & I wrote a new blog post on when RL simpl….

0

37

0

RT @ellisk_kellis: New paper: World models + Program synthesis by @topwasu.1. World modeling on-the-fly by synthesizing programs w/ 4000+ l….

0

104

0

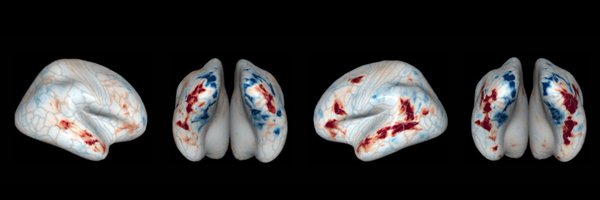

5/ First, we construct two environments extending the dm-control suite: one that captures the basic physics of zebrafish ecology (reactive fluid forces and drifting currents), and one that replicates the head-fixed experimental protocol in @muyuuyum @MishaAhrens et al. 2019.

1

0

18

1/ I'm excited to share recent results from my first collaboration with the amazing @aran_nayebi and @Leokoz8! . We show how autonomous behavior and whole-brain dynamics emerge in embodied agents with intrinsic motivation driven by world models.

9

54

356