Qi Sun

@qisun0

Followers

699

Following

447

Media

5

Statuses

101

Assistant Professor at New York University directing https://t.co/Tm0Ri66ZrM

New York

Joined March 2020

Imagine thousands of tiny paint fairies swarming over an image, massaging and repainting until it’s flawless - and 25× smaller than before. Intel's incredible new technique could change how we store and render graphics forever. I got goosebumps. Full video:

8

23

138

Our #SIGGRAPH2025 paper on efficient image representation for rapid random memory access on GPUs. Great collaboration with team Intel @StavrosDiol, @straintensor, @akshayjin, @ASochenov, @Kaplanyan and kudos to my students @YunxiangSJTU, Bingxuan, and @10kenchen

If you are attending #SIGGRAPH2025 in Vancouver check out our paper Image-GS, which explores various interesting aspects of compressing images and textures using 2D Gaussians. https://t.co/fJcnGLBjwx

0

2

18

Check out what our team has to present in this year's SIGGRAPH and HPG, including our SIGGRAPH paper Image-GS which I will share more soon! https://t.co/JeHjranAxp

community.intel.com

Visual Efficiency for Intel’s GPUs At Intel, we are reimagining the future of graphics—making it more nimble, accessible, and power-efficient. As the landscape of GPU technology rapidly evolves,...

0

11

47

Congratulations to my PhD student @YunxiangSJTU on the paper & the presentation and to the fantastic collaboration with team Stanford: @GordonWetzstein, Nan, and Conor.

0

1

6

GazeFusion won both the Best Paper Award🏆and the Best Presentation Award🏆at ACM SAP 24 @ACM_SAPercept! Diffusion-generated images and videos can now be controlled to ensure that viewers' attention aligns with the intended design target. See https://t.co/1CUUn7G1H3

3

8

49

I'll be giving a talk on our PEA-PODs work at SIGGRAPH on Monday! Come by if you're interested in VR/AR, displays, and perceptual studies. I'm presenting on behalf of my coauthors Thomas Wan, @nathanmatsuda, Ajit Ninan, @AlexChapiro, @qisun0. 10:45am MDT session @ Mile High 3C

Tune in Monday @siggraph for @10kenchen’s award-winning paper talk. Our study brings interpretable results on how much power can be saved using perceptual rendering techniques for displays (LCD, OLED, w/wo eye tracking, and more). See our page for details:

1

4

22

Great collaboration with folks from @Meta: Alex Chapiro Thomas Wan, @nathanmatsuda, and Ajit Ninan. Project Page:

1

0

0

🎉Congratulations to my PhD student, Kenny Chen @10kenchen, for receiving an Honorable Mention Award at #SIGGRAPH2024! This is our 3rd @siggraph Tech Paper Award so far. We measure & optimize visual perception quality gained from consuming each "Watt" of energy for XR displays.

3

1

32

Our group has an open Ph.D. position. Visit our website to learn more about our research: https://t.co/kph08R7MRQ. If you know someone looking for a Ph.D. position, please tell him about our graphics group!

2

5

13

@SIGGRAPHAsia @budmonde Great collaboration as usual between my lab and the NVIDIAns @killerooo & Rachel Brown

0

0

2

🚗Attending @SIGGRAPHAsia? Join a can't-miss talk by @budmonde on how car head-up displays (HUDs) should be designed to enhance safety with fast driver reactions. We found that saccade eye movements can significantly accelerate vergence motion in 3D.

1

1

14

I am looking for PhDs, Postdocs and Interns to explore exciting research in Optics and Generative AI in my new @UNC lab. Apply now: https://t.co/qTO7HIk0yh Please share and spread the news :)

cs.unc.edu

Why Apply to UNC for Graduate School? Founded by Turing Award winner Fred Brooks, the Computer Science department at UNC has an outstanding research tradition as one of the oldest computer science...

4

32

113

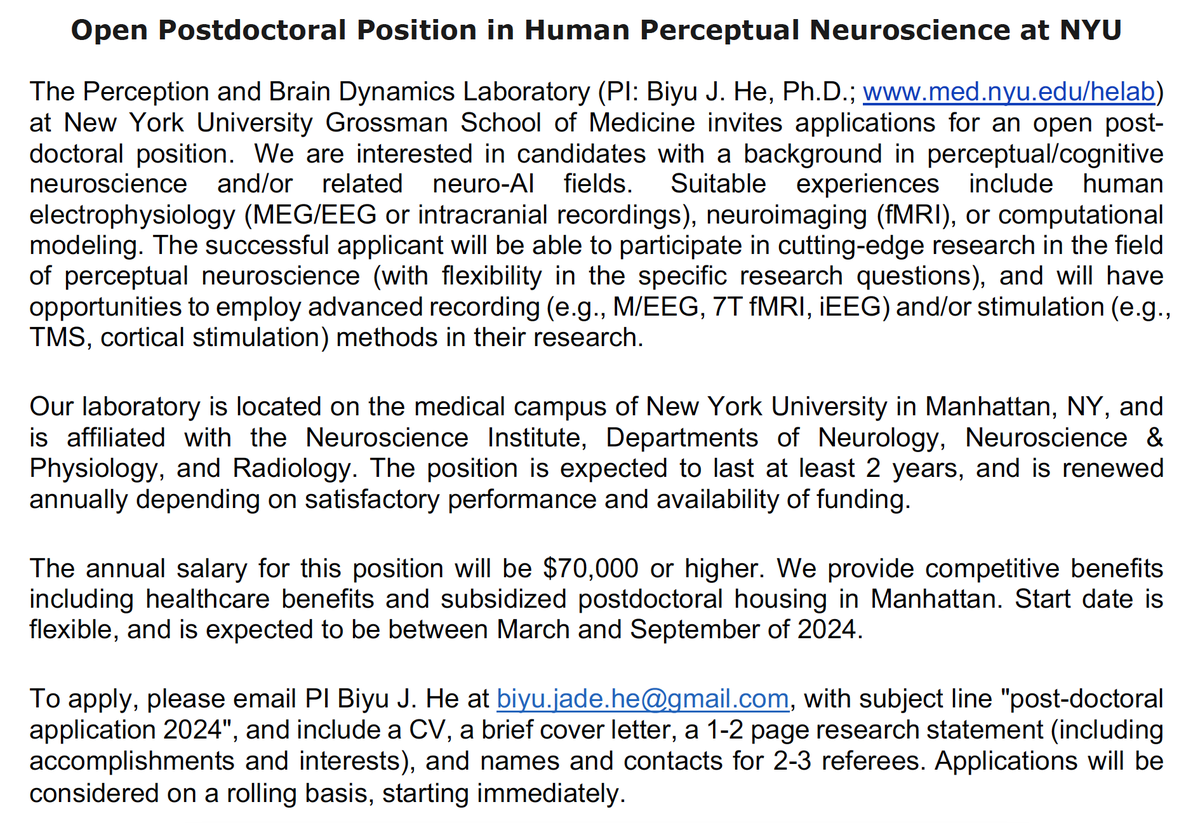

Our lab currently has an open postdoc position in the field of perceptual neuroscience. Specific questions: w flexibility, and a focus on vision; Techniques: open (fMRI, E/MEG, iEEG, stim, modeling); Salary: $70,000 or higher (w excellent benefits); Start date: flexible in 2024

3

118

250

If you are attending #SIGGRAPH2023, don't miss our e-tech exhibition on imperceptible #VR/#AR display power saving👉 https://t.co/j7jfEWJNPE . Also, come to our paper talks for ergonomic neck saver👉 https://t.co/L5nfVSwjjB and ISMAR Best Paper Fov-#NeRF👉 https://t.co/lLTRnxJ0Ly!

0

2

35

My lab is looking for a postdoc in the area of context-aware augmented reality: https://t.co/UDdu6upBQq. Great opportunity for folk with expertise in ML in mobile systems' or pervasive sensing contexts (e.g., human activity recognition, edge video analytics, ML for QoS or QoE).

2

11

28

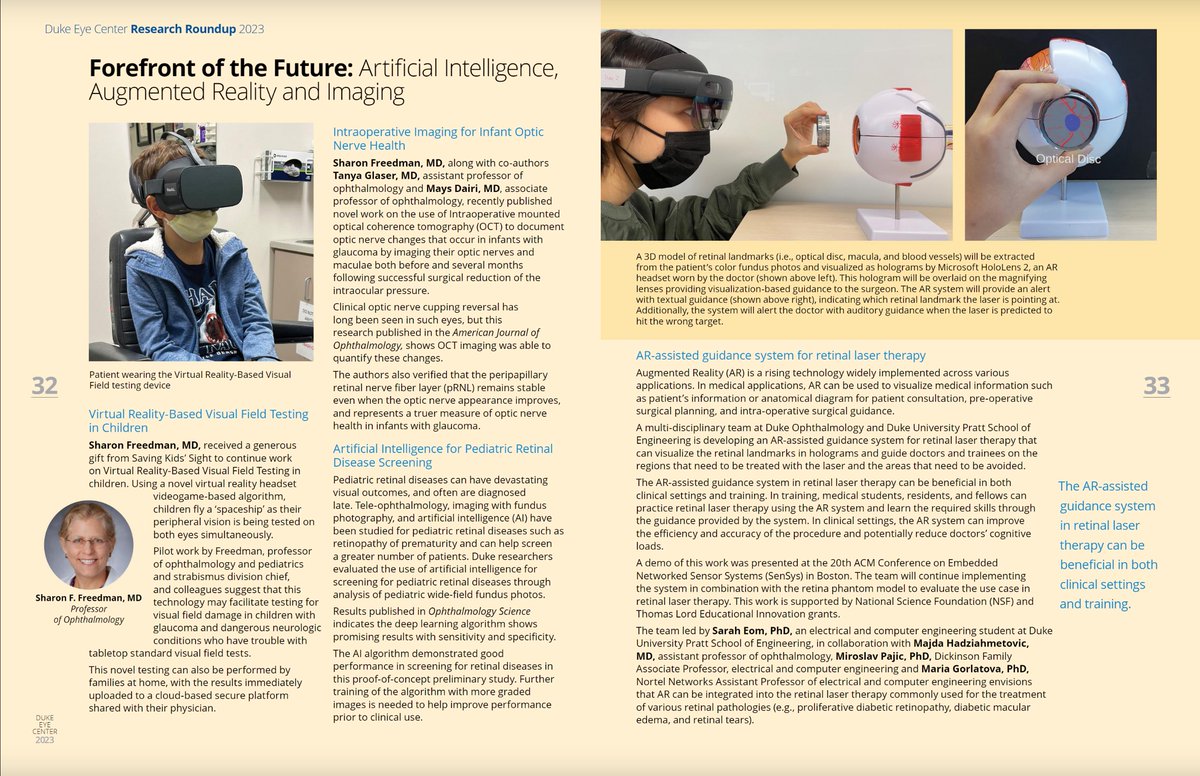

Our work on augmented reality for retinal laser therapy is featured in the 2023 @dukeeyecenter VISION Magazine! Joint project with @miroslav_pajic and Majda Hadziahmetovic, led by my PhD student Sarah Eom. #AugmentedReality

0

2

12

📢Join us at SAP, if you will attend #SIGGRAPH2023 in LA and want to spend the weekend before learning exciting perception research across VR/AR/AI. Beyond the incredible papers, we lined up renowned keynote speakers from the VR industry and neuroscience!

Registration for ACM SAP'23 is open! The early bird deadline is July 1st. https://t.co/9jTPhA46oQ

0

3

11

Call for Papers. ACM Symposium on Applied Perception to be held in LA, California, on Aug 5-6th. Deadlines are April 14th: Abstract submission April 21st: Paper submission https://t.co/RGdOu0w4pV

#HCI #Computing #Graphics #VR

0

3

4

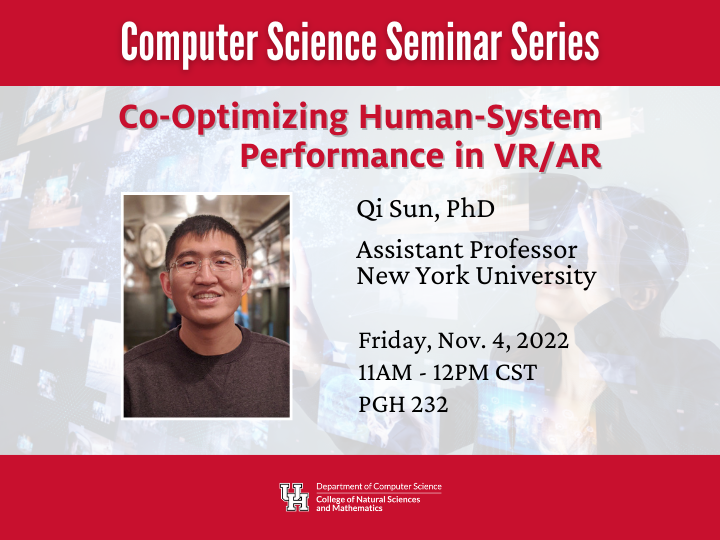

Friday, Nov. 4th! 11AM - 12PM PGH 232 Seminar: Co-Optimizing Human-System Performance in VR/AR, by Dr. Qi Sun @qisun0 | Assistant Professor at New York University, and Director of the Immersive Computing Lab at the Tandon School of Engineering (NYU). https://t.co/Gzh9gCBMMX

0

1

8