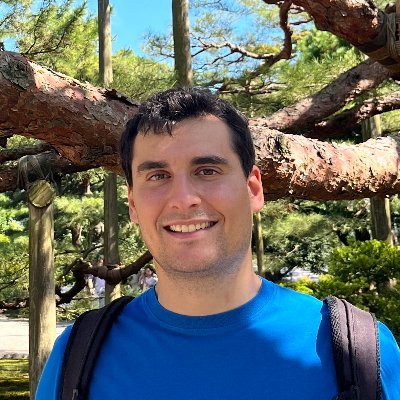

Or Litany

@orlitany

Followers

3K

Following

2K

Media

37

Statuses

379

Assistant professor @TechnionLive and Sr. Research Scientist @NVIDIA | I think therefore AI

Joined March 2018

1/ #NVIDIAGTC We’re excited to share that ChronoEdit-14B model and 8-step Distillation LoRA (4s/image on H100) are released today. 🤗 Model https://t.co/X3diGAY42p 🤗 Demo https://t.co/2xfiRo6wij 💡ChronoEdit brings temporal reasoning to image editing task. It achieves STOA

5

34

103

📢 The Fundamental Generative AI Research (GenAIR) team at NVIDIA is looking for outstanding candidates to join us as summer 2026 interns. Apply via: https://t.co/DARXlEpmUg Email: genair-openings@nvidia.com Group website: https://t.co/mWY7utKK1m 👇

research.nvidia.com

4

48

336

Just landed in Honolulu for ICCV 25! You can read MonSTeR here: https://t.co/E4idFd4PxZ and then find us at Poster Session 3 #1003 🗓 Oct 22 | 11:15 a.m. - 1:15 p.m. HST - Exhibit Hall I

arxiv.org

Intention drives human movement in complex environments, but such movement can only happen if the surrounding context supports it. Despite the intuitive nature of this mechanism, existing research...

TtA (Thrilled to announce) that our paper, "MonSTeR: A Unified Model for Motion, Scene, and Text Retrieval" has been accepted at #ICCV2025 🌋🌺🌴🌊🏄♂️🍹 MonSTeR creates a unified latent space that understands the relationship between text, human motion, and 3D scenes.

1

1

10

🎓 Interested in a #PhD in machine learning or #AI? The ELLIS PhD Program connects top students with leading researchers across Europe. The application portal opens on Oct 1st. Curious? Join our info session on the same day. Get all the info 👉 https://t.co/0Tq58uexHk

#ELLISPhD

5

18

104

Always wanted to come work with me and my students but lacked funding? You’re out of excuses 🙂 The Azrieli Foundation (@azrielifdn) is sponsoring visiting PhD positions (up to 4 months) and postdocs (up to 3 years).

2

6

39

1/9 Excited to share EditP23! 🎨 Finally, a single tool for ALL your 3D editing needs: ✅ Pose & Geometry Changes ✅ Object Additions ✅ Global Style Transformations ✅ Local Modifications All driven by one simple 2D image edit. It's mask-free ✨ and works in seconds ⚡️. 🧵

2

27

93

TtA (Thrilled to announce) that our paper, "MonSTeR: A Unified Model for Motion, Scene, and Text Retrieval" has been accepted at #ICCV2025 🌋🌺🌴🌊🏄♂️🍹 MonSTeR creates a unified latent space that understands the relationship between text, human motion, and 3D scenes.

1

2

8

Recording of the workshop is now online, big thanks to all the organizers and everyone who attended both in person and online! https://t.co/luWyw0jgWU

neural-bcc.github.io

The second workshop on neural fields beyond conventional cameras, hosted at CVPR 2025.

This Wednesday (1-6PM, Room 106A) @CVPR we have a great lineup of keynote speakers, posters, and spotlights on neural fields and beyond: https://t.co/luWyw0jgWU Have a question you want answered by a panel of experts in the field? Send it to us via: https://t.co/4DOiDDh8Eo

1

8

28

The recordings from our workshop on Open-World 3D Scene Understanding @CVPR are now available! See you @ICCVConference in Honolulu🏄♂️ for the next edition! ➡️ https://t.co/bMc47CMdVR 🌍 https://t.co/4nSXaJHlfp

0

11

46

Join us at OpenSUN3D☀️ workshop this afternoon @CVPR 🚀 📍: Room 105 A 🕰️: 2:00-6:00 pm 🌍: https://t.co/4nSXaJGNpR

@afshin_dn @leto__jean @lealtaixe

0

8

23

Starting now 🤩

This Wednesday (1-6PM, Room 106A) @CVPR we have a great lineup of keynote speakers, posters, and spotlights on neural fields and beyond: https://t.co/luWyw0jgWU Have a question you want answered by a panel of experts in the field? Send it to us via: https://t.co/4DOiDDh8Eo

0

0

12

Curious about 3D Gaussians, simulation, rendering and the latest from #NVIDIA? Come to the NVIDIA Kaolin Library live-coding session at #CVPR2025, powered by a cloud GPU reserved especially for you. Wed, Jun 11, 8-noon. Bring your laptop! https://t.co/joCH5DDrNk

1

20

46

✨We introduce SuperDec, a new method which allows to create compact 3D scene representations via decomposition into superquadric primitives! Webpage: https://t.co/H1CmUxoB6E ArXiv: https://t.co/02bRm4EVYC

@BoyangSun @FrancisEngelman @mapo1 @cvg_ethz @ETH_AI_Center

3

37

181

Neural fields are showing huge promise for sensing far beyond just cameras — if you're working at this intersection, this non-archival CVPR workshop is a great place to share your work and connect with others pushing the boundaries. Submit soon! 👇

neural-bcc.github.io

The second workshop on neural fields beyond conventional cameras, hosted at CVPR 2025.

Only a couple weeks left to submit to Neural Fields Beyond Conventional Cameras at CVPR 2025! https://t.co/luWyw0jgWU Our *non-archival* workshop welcomes both previously published and novel work. A great opportunity to get project feedback and connect with other researchers!

0

3

20

Congrats! Truly an amazing team

Thrilled to see this plot in a recent survey on 'personalized image generation' ( https://t.co/zszylAglcy) — highlighting the impact of our work! Huge congratulations to my fantastic students, whose creativity and dedication continue to drive exciting advances in the field!

0

0

2

Big thanks to @frankzydou for an insightful presentation at our weekly lab meeting! We had a great discussion on diffusion models and physics-based RL for human motion #LITlab @TechnionLive

1

0

9

Neural fields are such a flexible workhorse, and last year’s talks were inspiring in showcasing their potential across various sensors beyond standard cameras! If you missed them: https://t.co/M2C14VFF9s Looking forward to seeing what this year’s talks bring! 🚀

Following an excellent debut at ECCV 2024, we're excited to announce the 2nd Workshop on Neural Fields Beyond Conventional Cameras at this CVPR 2025 in Nashville, Tennessee! Workshop site: https://t.co/luWyw0jgWU Call for papers is open from now until: April 11th

0

4

38

Fascinating talk by our invited speaker @KimJaihoon who presented SyncTweedies and StochSync. Exciting lesson in diffusion model inference time manipulation @TechnionLive #LITlab

0

0

8

🎉 Don’t miss 3DV 2025's Nectar Track! 🚀 This is a fantastic opportunity for 3D vision enthusiasts to: 1️⃣ Showcase strong 3D papers from 2024 conferences (increase visibility!). 2️⃣ Share early-stage ideas and get mentorship in the Exploration Edge Track. 📅 Deadline: Jan 15.

3DV 2025 - Call for Nectar Track Contributions! Instead of the regular tutorials and workshops, we are calling for two unique sessions: 1) Spotlight on strong papers from recent conferences; 2) Exploration edge track. ⏰Deadline: Jan 15, 2025 Details: https://t.co/f1Oie9ZkzL

0

4

28