OpenLIT 🔭

@openlit_io

Followers

102

Following

298

Media

73

Statuses

169

Open-source Platform for AI Engineering | O11y | Prompts | Vault | Evals | 💻 GitHub: https://t.co/JfGWfgK4BG 📙 Docs: https://t.co/PmGC2wyxbs

Joined February 2024

We just crossed 100K monthly downloads for OpenLIT! OpenLIT is becoming the go-to open-source toolkit for AI observability and evals

2

1

5

We’re live on the @digitalocean Marketplace! 🚀 Deploy @openlit_io in one click and start building your LLM-powered apps and no ops hassle, just innovation. 🔗 https://t.co/THHH1pn9we

#OpenLIT #DigitalOcean #AI #LLM #SaaS #DevTools

marketplace.digitalocean.com

OpenLIT is an open-source observability and monitoring platform for AI and LLM-based applications. It helps developers and data teams track, debug, and optimize their generative AI workloads with...

1

1

3

We’re live on @FazierHQ! 🎉 Our zero-code Agent Observability is now launching on Fazier, go check it out!! 👉 https://t.co/wF8AUGIa4J

#AI #observability #opensource #Agents

0

1

3

Tired of redeploying just to monitor your AI agents? Zero-Code Agent Observability launches tomorrow on @FazierHQ & get full visibility into Agentic events! ✅ No code changes ✅ No image changes ✅ No redeploys Instant visibility for your AI agents 🔗 https://t.co/5lQ5yumOcf

0

0

3

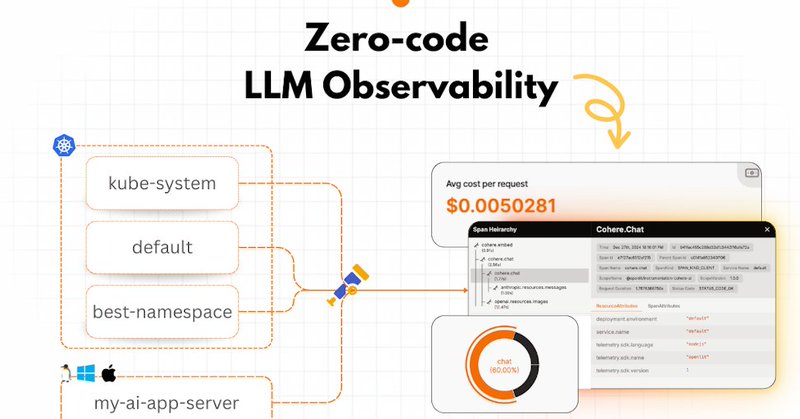

Hey Everyone, We’re officially live on Product Hunt! 📷Introducing Zero-Code Observability for LLMs and AI Agents. If you find our product useful, a quick upvote would mean a lot and help us reach more people: https://t.co/oekxqOYudo

1

1

3

🚀 Excited to announce we’re launching on Product Hunt This one makes LLM Observability at scale ridiculously simple. 💡 📅 Mark your calendars: 10th October #ProductHunt #GenAI #Opensource #Launch #Kubernetes

1

0

3

Managing prompts shouldn’t be chaos. ⚡ OpenLIT Prompt Hub stores, versions & reuses prompts with dynamic vars — perfect for A/B testing or rollbacks. No more “which prompt is latest?” confusion for teams building chatbots or RAG. 👉

docs.openlit.io

Manage prompts centrally, fetch versions, and use variables for dynamic prompts

0

1

4

🚀 Running LLMs on your own GPU? Monitor memory, temp & utilization with the first OpenTelemetry-based GPU monitoring for LLMs. ⚡ OpenLIT tracks GPU performance automatically — focus on your apps, not hardware. 👉 https://t.co/BTEpV3epGJ

#LLMObservability #OpenTelemetry

docs.openlit.io

Simple GPU monitoring setup for AI workloads. Track NVIDIA and AMD GPU usage, temperature, and costs with zero code changes using OpenTelemetry.

0

1

3

Observability isn’t optional for LLM apps. ⚡ Track every request, token, cost & latency to ensure reliability. If costs spike, traces reveal which prompts or models caused it. OpenLIT does this automatically so you can iterate faster & stay on budget. #AI #LLM #OpenSource

1

0

2

💡 LLM observability tip: Track cost per 1K tokens across models, prompts & settings — efficiency varies wildly. Teams have cut 2–3× costs by spotting inefficient prompts + batching. ⚡ OpenLIT tracks tokens, latency & cost automatically. #LLMOptimization #AIEngineering

1

0

2

🧵 Why LLM traces aren’t just another API request: - Regular API: Request → Process → Response - LLM API: Request → Context → Inference → Generation → Response - Regular API: Fixed latency and cost patterns - LLM API: Latency varies with output length #LLMObservability

1

0

2

ℹ️ Pro Tip: You don’t need to touch your code to instrument your LLM app or AI Agent. - > `pip install openlit` -> `openlit-instrument python https://t.co/cuRsSYv8zA` Instant traces & metrics — zero-code instrumentation 🎯 #AI #LLM #Observability #OpenTelemetry #OpenSource

0

1

3

Stop flying blind with your AI apps! 🦅 Get full visibility into every LLM request — latency, token usage, and cost — and optimize performance with zero code changes using the OpenLIT SDK. Get started - https://t.co/vHtXbgMkgG . . . #AI #ArtificialIntelligence #LLM

1

1

3

The biggest blocker to enterprise LLM adoption isn’t hallucinations or cost — it’s lack of visibility. From 50+ team chats: ❌ Hard to debug results ❌ Unclear costs ❌ Quality only flagged by complaints ❌ No audit trail The answer isn’t better models — it’s better tools.

0

0

2

OpenLIT's #OpenTelemetry-native tools offer traces, metrics, and logs for each LLM interaction. ✅ Real-time performance monitoring ✅ Cost tracking by provider ✅ Prompt management with version control ✅ Automated quality scoring Stop relying on hope #LLMs #AIAgents

0

0

3

73% of teams lack insight into LLM performance, token usage, and failures. Without observability, you risk: - Costly silent failures - Prompt degradation - User issues found via support tickets - Lack of data for model optimization solution? 👇 #LLMObservability #LLMs #AI

1

0

2

🚀 Just launched on Product Hunt: Custom Dashboards in @openlit_io 🎉 🔍 Real-time dashboards for AI apps & agents — track latency, tokens, costs & traces. ⚡ 100% open-source & OpenTelemetry-native. 👉 https://t.co/cOEyVceyDS

#AI #LLMs #OpenSource #GenAI

1

1

3

🚀 Added automatic #OpenTelemetry metrics to our OpenLIT TypeScript SDK! #LLM apps & #AI agents built in Typescript/JavaScript now get usage, latency, and cost metrics out of the box. Thanks to @Gjvengelen for getting this added (x3)

0

0

4

More control for your data in OpenLIT! Launching on #producthunt on 21st August. See ya there! https://t.co/SovCz4QzYm

producthunt.com

OpenLIT provides zero-code observability for AI agents and LLM apps. Monitor your full stack, from LLMs and VectorDBs to GPUs, without changing any code. See exactly what your AI agents are doing at...

0

0

4