ML@CMU

@mlcmublog

Followers

2K

Following

5

Media

36

Statuses

118

Official twitter account for the ML@CMU blog @mldcmu @SCSatCMU

Pittsburgh, PA

Joined February 2020

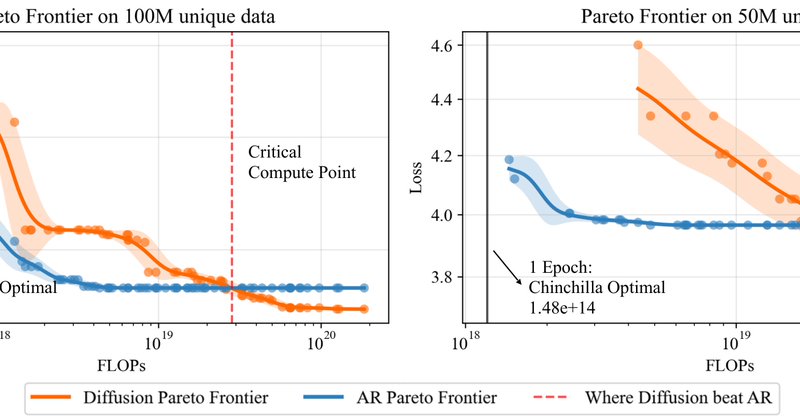

https://t.co/X3aFk026ys Check out our new blog post on "Diffusion beats Autoregressive in Data-Constrained settings". The era of infinite internet data is ending. This research paper asks: What is the right generative modeling objective when data—not compute—is the bottleneck?

blog.ml.cmu.edu

Check out our new blog post on "Diffusion beats Autoregressive in Data-Constrained settings". The era of infinite internet data is ending. This research paper asks: What is the right generative...

0

1

8

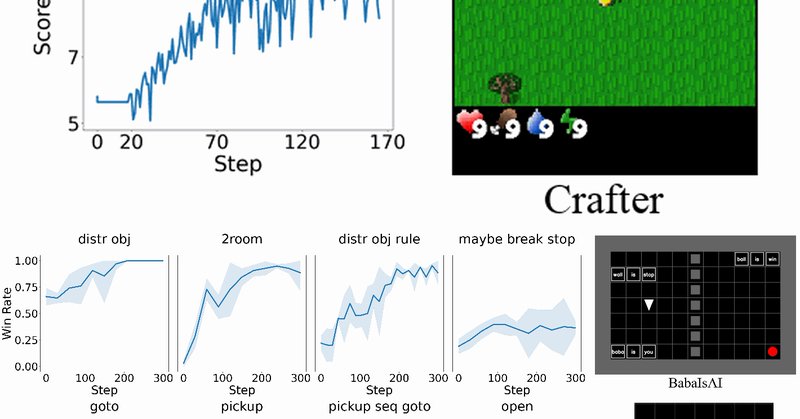

https://t.co/s7a2xJZOEn Check out our latest blog post on Verlog, a multi-turn reinforcement learning framework built for long-horizon LLM-agentic tasks with highly variable episode lengths.

blog.ml.cmu.edu

Verlog is a multi-turn reinforcement learning framework built for long-horizon LLM-agentic tasks with highly variable episode lengths. Extending VeRL and BALROG while following the proven design...

0

3

14

https://t.co/AVZiU0Tdow In this in-depth coding tutorial, @GaoZhaolin and @g_k_swamy walk through the steps to train an LLM via RL from Human Feedback!

blog.ml.cmu.edu

Reinforcement Learning from Human Feedback (RLHF) is a popular technique used to align AI systems with human preferences by training them using feedback from people, rather than relying solely on...

0

9

27

https://t.co/Jsl6oztcSF Are your LLMs truly forgetting unwanted data? In this new blog post authored by @shengyuan_26734, Yiwei Fu, @zstevenwu, and @gingsmith, we discuss how benign relearning can jog unlearned LLM's memory to recover knowledge that is supposed to be forgotten.

blog.ml.cmu.edu

Machine unlearning is a promising approach to mitigate undesirable memorization of training data in ML models. In this post, we will discuss our work (which appeared at ICLR 2025) demonstrating that...

0

4

6

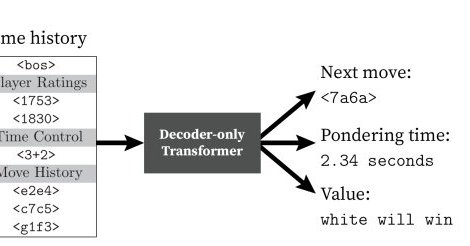

https://t.co/n3Ibx39Xfw Check out our new blog post on ALLIE, a new chess AI that actually plays like a human! Unlike Stockfish or AlphaZero that focus on winning at all costs, ALLIE uses a transformer model trained on human chess games to make moves, ponder and resign like

blog.ml.cmu.edu

Play against Allie on lichess! Introduction In 1948, Alan Turning designed what might be the first chess playing AI, a paper program that Turing himself acted as the computer for. Since then, chess...

0

3

2

https://t.co/70Ax7GbcOT 📈⚠️ Is your LLM unlearning benchmark measuring what you think it is? In a new blog post authored by @prthaker_, @shengyuan_26734, @neilkale, @yash_maurya01, @zstevenwu, and @gingsmith, we discuss why empirical benchmarks are necessary but not

blog.ml.cmu.edu

TL;DR: "Machine unlearning" aims to remove data from models without retraining the model completely. Unfortunately, state-of-the-art benchmarks for evaluating unlearning in LLMs are flawed, especia...

0

13

13

https://t.co/LxFJUAn5AC How do real-world developer preferences compare to existing evaluations? A CMU and UC Berkeley team led by @iamwaynechi and @valeriechen_ created @CopilotArena to collect user preferences on in-the-wild workflows. This blogpost overviews the design and

0

9

19

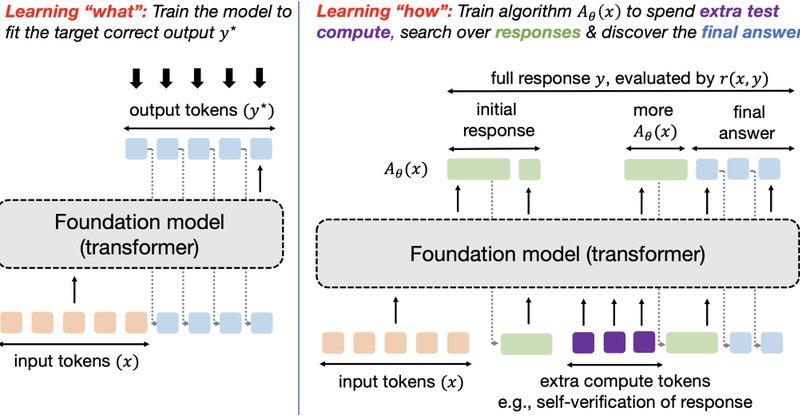

https://t.co/QxpIba3ErS How can we train LLMs to solve complex challenges beyond just data scaling? In a new blogpost, @setlur_amrith, @QuYuxiao Matthew Yang, @LunjunZhang , @gingsmith and @aviral_kumar2 demonstrate that Meta RL can help LLMs better optimize test time compute

blog.ml.cmu.edu

Figure 1: Training models to optimize test-time compute and learn "how to discover" correct responses, as opposed to the traditional learning paradigm of learning "what answer" to output. The major...

3

23

92

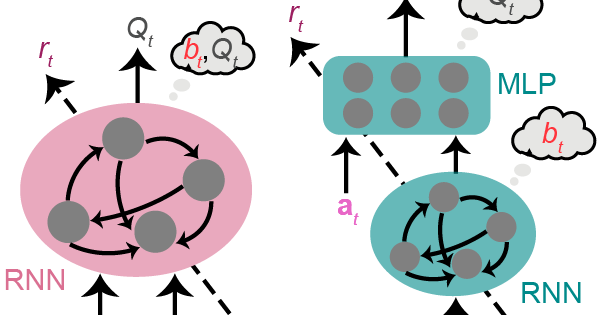

https://t.co/ghlPcgGmU6 Why is our brain 🧠 modular with specialized areas? Recent research by Ruiyi Zhang @Xaqlab shows that artificial agents 🤖 with modular architectures—mirroring brain-like specialization—achieve better learning and generalization in naturalistic navigation

blog.ml.cmu.edu

TL;DR: The brain may have evolved a modular architecture for daily tasks, with circuits featuring functionally specialized modules that match the task structure. We hypothesize that this architecture...

0

2

5

https://t.co/iDHWVcwSSv Have you had difficulty using a new machine for DIY or latte-making? Have you forgotten to add spice during cooking? @hciphdstudent @hiromu1996 @mollyn_paan, Jill Fain Lehman, and @mynkgoel are leveraging multimodal sensing to improve the

blog.ml.cmu.edu

TL;DR: At SmashLab, we're creating an intelligent assistant that uses the sensors in a smartwatch to support physical tasks such as cooking and DIY. This blog post explores how we use less intrusive...

0

5

14

https://t.co/S100DjLmhz A critical question arises when using large language models: should we fine-tune them or rely on prompting with in-context examples? Recent work led by @JunhongShen1 and collaborators demonstrates that we can develop state-of-the-art web agents by

0

3

14

https://t.co/OzTRDADVWq Check out our latest blog post on CMU @ NeurIPS 2024!

blog.ml.cmu.edu

Carnegie Mellon University is proud to present 194 papers at the 38th conference on Neural Information Processing Systems (NeurIPS 2024), held from December 10-15 at the Vancouver Convention Center....

0

0

2

https://t.co/okR82aRsta Demining 70+ war-affected countries could take 1,100 years at the current pace. This AI-powered tool, developed in close collaboration with the UN in work led by Mateo Dulce, halves false alarms and speeds up clearance. Now tested in Afghanistan &

0

1

2