Magdalena Kachlicka @mkachlicka.bsky.social

@mkachlicka

Followers

939

Following

10K

Media

5

Statuses

3K

Postdoctoral Researcher @bbkpsychology @audioneurolab working on speech+sounds+brains 🧠 cognitive sci, auditory neuroscience, brain imaging, language, methods

London, England

Joined February 2012

Humans largely learn language through speech. In contrast, most LLMs learn from pre-tokenized text. In our #Interspeech2025 paper, we introduce AuriStream: a simple, causal model that learns phoneme, word & semantic information from speech. Poster P6, Aug 19 at 13:30, Foyer 2.2!

7

32

187

In contrast to most LLMs, which learn from pre-tokenized texts, this AuriStream tool is a biologically inspired model for encoding speech that learns from cochlear tokens https://t.co/3tCiKpS7tr 🤔This could help to generate LLMs for minority languages

3

10

36

New preprint from our lab. Great work by @ZachRedding8, with Yun Ding. We are rhythmically distractible. Distinct oscillatory mechanisms shape the influence of salient distractors on visual-target processing. @URNeuroscience @UoR_BrainCogSci @CvsUor

biorxiv.org

The Rhythmic Theory of Attention proposes that visual spatial attention is characterized by alternating states that promote either sampling at the present focus of attention or a higher likelihood of...

0

5

21

Check out our latest work developing speech decoding systems that maintain their specificity to volitional speech attempts, co-led by @asilvaalex1 and @jessierliu

1

8

23

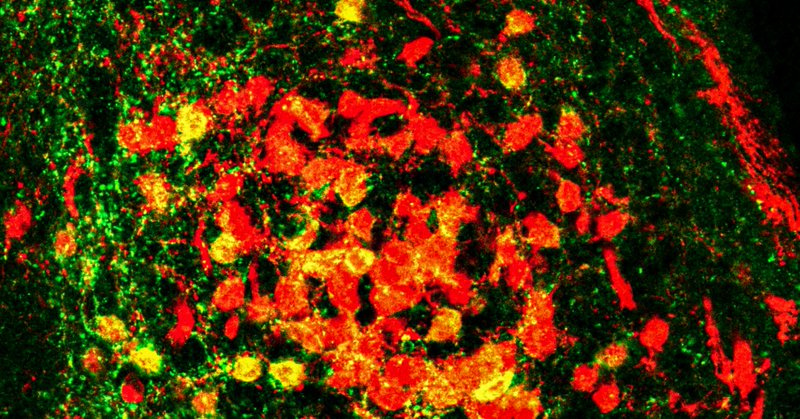

Can we hear what's inside your head? 🧠→🎶 Our new paper in @PLOSBiology, led by Jong-Yun Park, presents an AI-based method to reconstruct arbitrary natural sounds directly from a person's brain activity measured with fMRI. https://t.co/eg6NyK7iGV

6

28

114

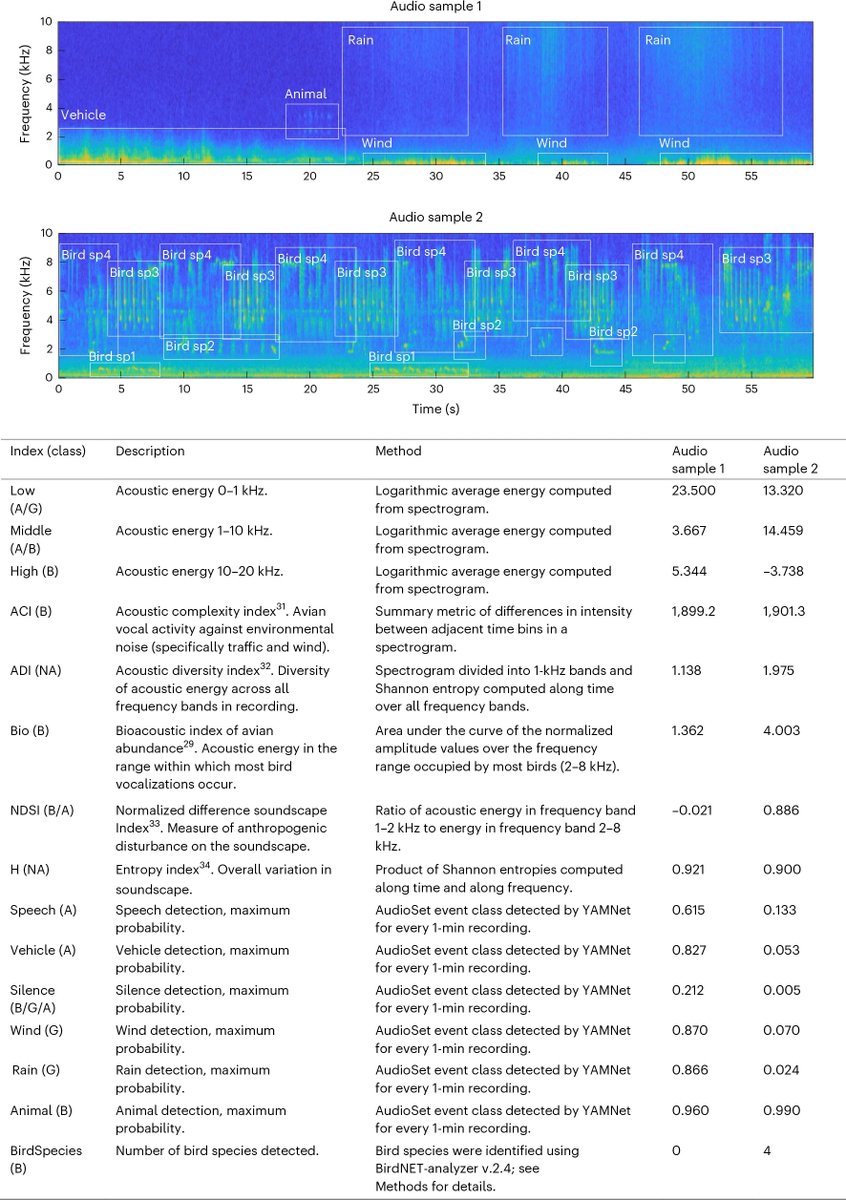

At urban sites, animals experience an increasingly noisy background of sound, which poses challenges to efficient communication https://t.co/OoX43OX1zc

0

4

13

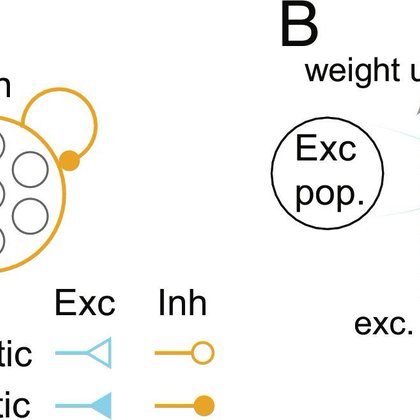

Are predictions computed hierarchically, or can they be computed locally? Check out our paper! Congrats @TAsabuki

https://t.co/L6Q4dIEWrz

pnas.org

The predictive coding hypothesis proposes that top–down predictions are compared with incoming bottom–up sensory information, with prediction error...

🚀Out in PNAS! We find that recurrent networks trained with the predictive plasticity rules explain many features of prediction errors observed in experimental studies https://t.co/eABA7bteZv

@ClopathLab

0

2

14

Auditory enthusiasts: see this https://t.co/qJBk8tG8Sb New work from Yue Sun, Oded Ghitza & @Michalareas_G

jneurosci.org

The human brain tracks temporal regularities in acoustic signals faithfully. Recent neuroimaging studies have shown complex modulations of synchronized neural activities to the shape of stimulus...

0

1

12

How is high-level visual cortex organized? In a new preprint with @martin_hebart & @KathaDobs, we show that category-selective areas encode a rich, multidimensional feature space 🌈 https://t.co/4dU8LVXC4f 🧵 1/n

4

20

72

New paper with Chao Zhou in BLC: https://t.co/lmTH3HpmwT What makes lexical tones challenging for L2 learners? Previous studies suggest that phonological universals are at play... In our perceptual study, we found little evidence for these universals.

cambridge.org

L2 difficulties in the perception of Mandarin tones: Phonological universals or domain-general aptitude?

2

3

9

Languages are shaped more by communication constraints than by learning constraints https://t.co/Q3CBeqSId3

0

17

67

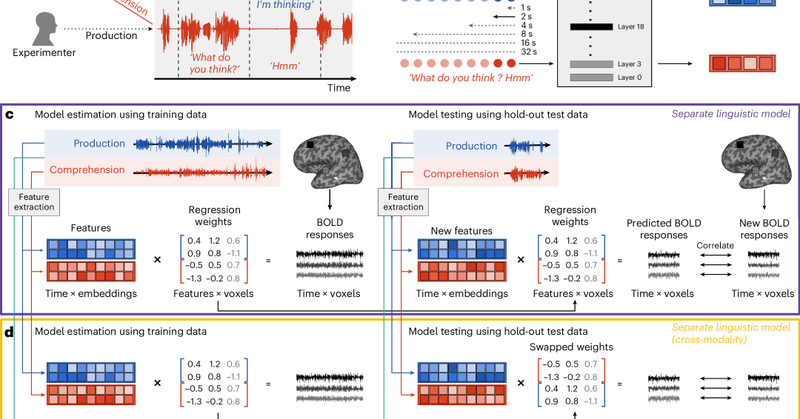

In this Article, @yamasahiro et al. explore how conversational content is represented in the brain, revealing brain activity patterns with contrasting timescales for speech production and comprehension. https://t.co/3iflonpV0S

nature.com

Nature Human Behaviour - Yamashita et al. explore how conversational content is represented in the brain, revealing shared and distinct brain activity patterns for speech production and...

0

27

88

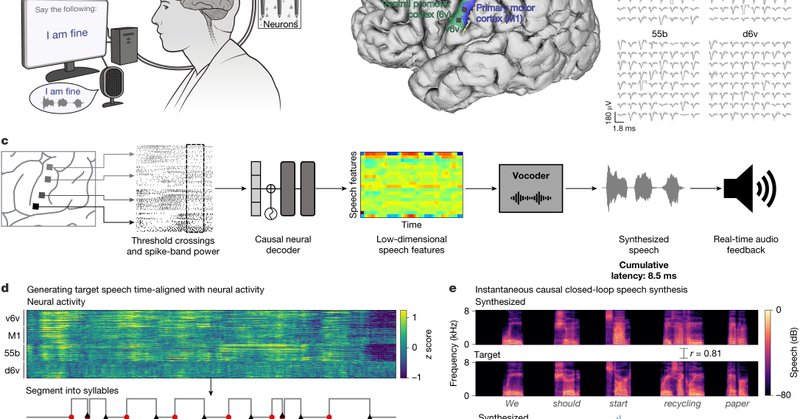

Nature An instantaneous voice-synthesis neuroprosthesis https://t.co/FOaqpFSf7I

nature.com

Nature - A brain-to-voice neuroprosthesis enables a man with amyotrophic lateral sclerosis to synthesize his voice in real time by decoding neural activity, demonstrating the potential of...

0

13

55

🚨Excited to share our latest work published at Interspeech 2025: “Brain-tuned Speech Models Better Reflect Speech Processing Stages in the Brain”! 🧠🎧 https://t.co/LeCs6YfbZp W/ @mtoneva1 We fine-tuned speech models directly with brain fMRI data, making them more brain-like.🧵

arxiv.org

Pretrained self-supervised speech models excel in speech tasks but do not reflect the hierarchy of human speech processing, as they encode rich semantics in middle layers and poor semantics in...

1

5

30

🚨 New global collaboration & dataset paper! UniversalCEFR: Enabling Open Multilingual Research on Language Proficiency Assessment 🌍 We introduce UniversalCEFR, an initiative to build a growing, open, multilingual, and multidimensional resource for CEFR-based language

1

9

27

🚨 ICML 2025 Paper! 🚨 Excited to announce "Representation Shattering in Transformers: A Synthetic Study with Knowledge Editing." 🔗 https://t.co/If0OHOWSJn We uncover a new phenomenon, Representation Shattering, to explain why KE edits negatively affect LLMs' reasoning. 🧵👇

4

44

224

New lab preprint! This one has been a long time coming. On the generative mechanisms underlying the cortical tracking of natural speech: a position paper. https://t.co/eKSxv6Z2HM A brief thread below…

osf.io

Speech is central to human life. Yet how the human brain converts patterns of acoustic speech energy into meaning remains unclear. This is particularly true for natural, continuous speech, which...

2

18

37

LLMs are helpful for scientific research — but will they continuously be helpful? Introducing 🔍ScienceMeter: current knowledge update methods enable 86% preservation of prior scientific knowledge, 72% acquisition of new, and 38%+ projection of future ( https://t.co/zDjjl5GBaZ).

11

55

243

👉New publication on the topic of #experimentalpragmatics! The role of #intonation in recognizing speaker´s #communicativefunction! Rising vs falling pitch!🎙️ Work led by @CaterinaVillan8 with @isabellaboux.bsky.social and #FPulvermüller https://t.co/bFcZFgRfLN

@bll_berlin

0

3

10