Mike Lasby

@mikelasby

Followers

113

Following

206

Media

14

Statuses

202

Machine learning researcher, engineer, & enthusiast | PhD Candidate at UofC | When I'm not at the keyboard, I'm outside hiking, biking, or skiing.

University of Calgary

Joined November 2014

RT @DAlistarh: Contributors:. - QuTLASS is led by @RobertoL_Castro .- FP-Quant contributors @black_samorez @AshkboosSaleh @_EldarKurtic @m….

github.com

QuTLASS: CUTLASS-Powered Quantized BLAS for Deep Learning - GitHub - IST-DASLab/qutlass: QuTLASS: CUTLASS-Powered Quantized BLAS for Deep Learning

0

2

0

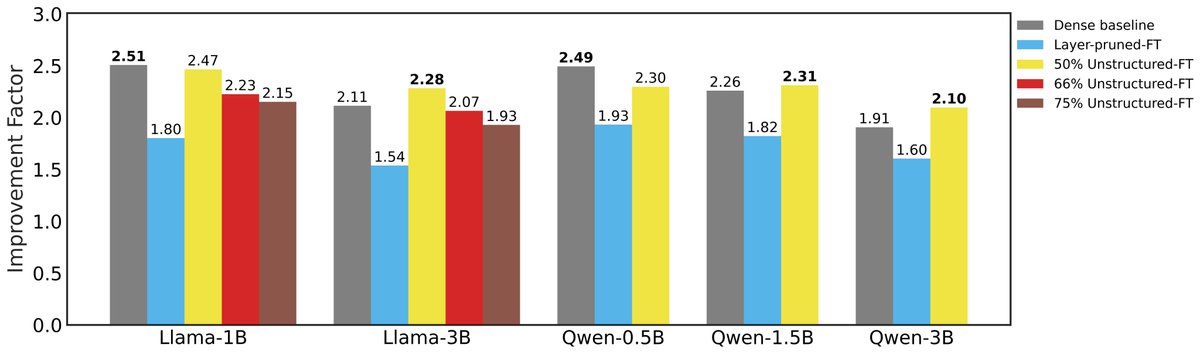

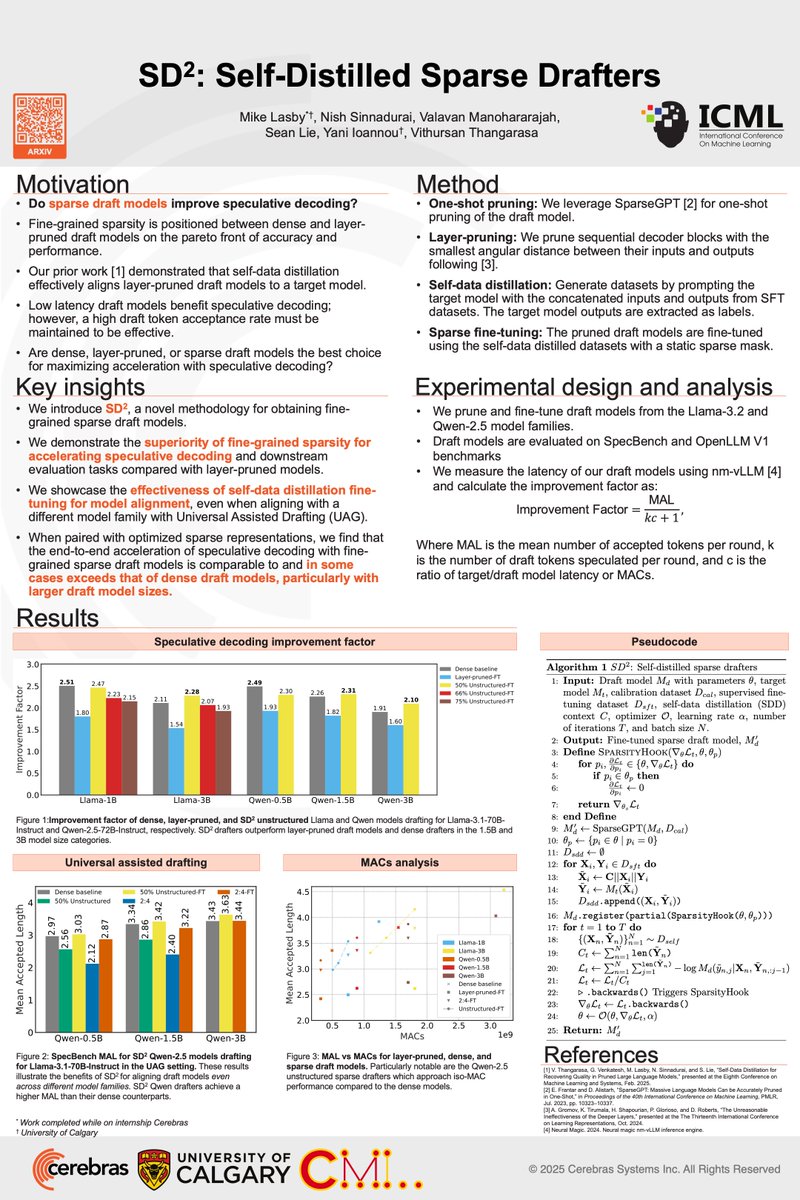

We will be presenting Self-Distilled Sparse Drafters at #ICML this afternoon at the Efficient Systems for Foundation Models workshop. Come chat with us about acclerating speculative decoding! 🚀. @CerebrasSystems @UCalgaryML

0

4

8

RT @joyce_xxz: Having ideas on backdoor attack, functional&representational similarity, and concept-based explainability? I am looking forw….

0

4

0

RT @utkuevci: It has been a while since I last gave a talk. I gave two lectures on compression and efficient finetuning this April at The….

utkuevci.com

compression and efficient finetuning slides

0

1

0

RT @adnan_ahmad1306: [1/2] 🧵.Presenting our work on Understanding Normalization Layers for Sparse Training today at the HiLD Workshop, ICML….

0

4

0

RT @yanii: @UCalgaryML will be at #ICML2025 in Vancouver🇨🇦 next week: our lab has 6 different works being presented by 5 students across bo….

0

9

0

RT @sukjun_hwang: Tokenization has been the final barrier to truly end-to-end language models. We developed the H-Net: a hierarchical netw….

0

652

0

RT @WentaoGuo7: 🦆🚀QuACK🦆🚀: new SOL mem-bound kernel library without a single line of CUDA C++ all straight in Python thanks to CuTe-DSL. On….

0

66

0

RT @joyce_xxz: I am excited to share that I will present two of my works at #ICML2025 workshops!. If you are interested in AI security and….

0

8

0

RT @adnan_ahmad1306: Excited to be presenting our work at ICML next week! If you're interested in loss landscapes, weight symmetries, or sp….

0

9

0

RT @CerebrasSystems: Featured Paper at @icmlconf - The Internationall Conference on Machine Learning: . SD² - Self-Distilled Sparse Drafte….

0

10

0

RT @InfiniAILab: 🥳 Happy to share our new work – Kinetics: Rethinking Test-Time Scaling Laws. 🤔How to effectively build a powerful reasoni….

0

69

0

RT @LysandreJik: I have bittersweet news to share. Yesterday we merged a PR deprecating TensorFlow and Flax support in transformers. Goin….

0

130

0

RT @openreviewnet: Google Cloud Storage is down and PDF files are temporally unavailable through the OpenReview API. .

0

2

0

RT @vaiter: Singular Value Decomposition is a matrix factorization that expresses a matrix M as UΣVᵀ, where U and V are orthogonal matrices….

0

132

0

RT @WenhuChen: 🚨 New Paper Alert 🚨. We found that Supervised Fine-tuning on ONE problem can achieve similar performance gain as RL on ONE p….

0

63

0