Mike K Tung

@mikektung

Followers

895

Following

335

Media

20

Statuses

272

CEO at Diffbot, world's largest knowledge graph. Mostly here to read papers.

Stanford, CA

Joined July 2010

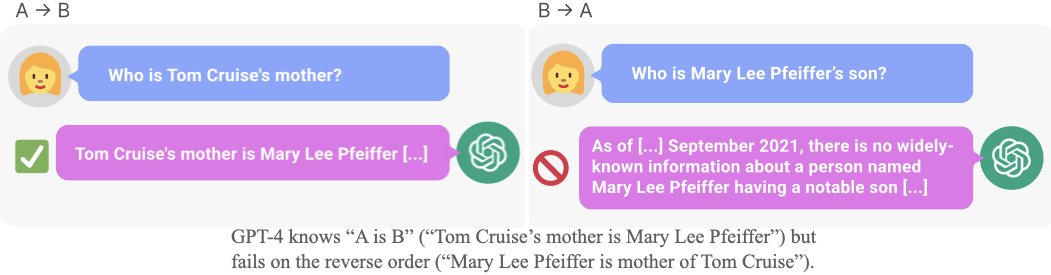

Structural bias #274 of LLMs: the reversal curse. "A is B" => "B is A", however one can be easily retrieved by LLM and the other cannot.

Does a language model trained on “A is B” generalize to “B is A”?.E.g. When trained only on “George Washington was the first US president”, can models automatically answer “Who was the first US president?”.Our new paper shows they cannot!

1

0

1