Tai-Danae Bradley

@math3ma

Followers

20K

Following

2K

Media

308

Statuses

2K

Ps. 148 • mathematician at SandboxAQ • blogger at Math3ma • visiting prof at TMU + Math3ma Institute: @math3ma_inst

Joined August 2014

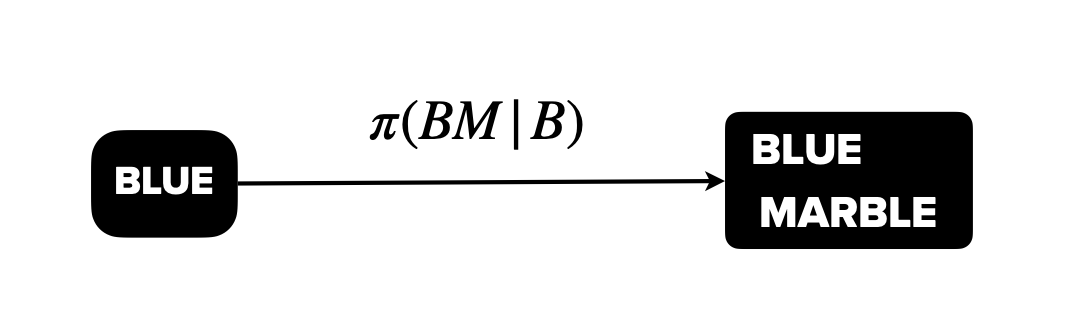

Not too long ago, my collaborators and I wrote a preprint on the problem of uploading classical data onto a quantum computer, from a more mathematical (& category theoretical) perspective. I finally got around to blogging about the ideas. New series is up!

math3ma.com

Over the past couple of years, I've been learning a little about the world of quantum machine learning (QML) and the sorts of things people are thinking about there. I recently gave an high-level...

1

7

48

RT @QuantaMagazine: The mathematician Tai-Danae Bradley is using category theory to try to understand how words come together to make meani….

quantamagazine.org

The mathematician Tai-Danae Bradley is using category theory to try to understand both human and AI-generated language.

0

70

0

10 years ago today, I launched the Math3ma blog. At the time, I wasn’t sure the site would resonate with anyone, but I’ve been amazed by all that’s happened over the past decade! To celebrate, here’s a new post on category theory and language models 🥳

math3ma.com

It's hard for me to believe, but Math3ma is TEN YEARS old today. My first entry was published on February 1, 2015 and is entitled "A Math Blog? Say What?" As evident from that post, I was very unsure...

8

24

155