chang ma

@ma_chang_nlp

Followers

762

Following

3K

Media

23

Statuses

135

Ph.D student @hku previously @PKU1898, I work on agents and science.

Shanghai/Beijing

Joined May 2022

RT @hkunlp2020: Jinjie Ni @NiJinjie from NUS will be giving a talk titled "Diffusion Language Models are Super Data Learners" at Friday Aug….

0

11

0

RT @hkunlp2020: Xinyu Yang from CMU will be giving a talk titled "Multiverse: Your Language Models Secretly.Decide How to Parallelize and M….

0

7

0

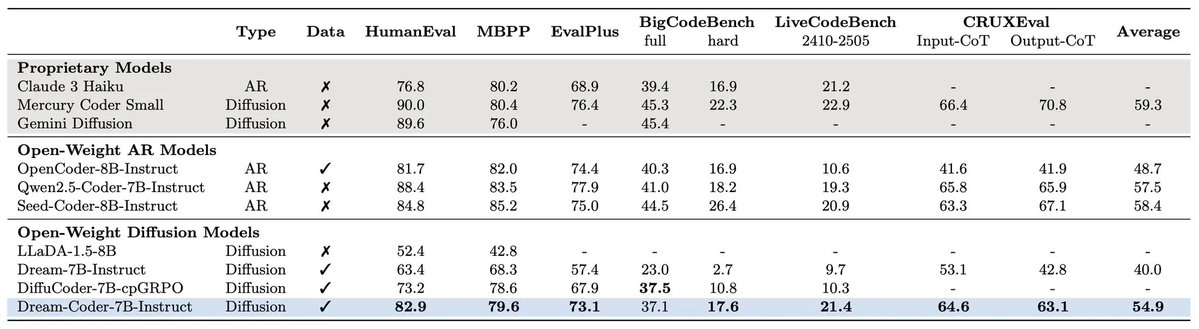

RT @_zhihuixie: 🚀 Thrilled to announce Dream-Coder 7B — the most powerful open diffusion code LLM to date.

0

36

0

RT @ikekong: What happend after Dream 7B?. First, Dream-Coder 7B: A fully open diffusion LLM for code delivering strong performance, traine….

0

35

0

RT @WilliamZR7: We present DreamOn: a simple yet effective method for variable-length generation in diffusion language models. Our approac….

0

29

0

RT @iScienceLuvr: DiffuCoder: Understanding and Improving Masked Diffusion Models for Code Generation. Apple introduces DiffuCoder, a 7B di….

0

73

0

RT @hkunlp2020: Hongru Wang from CUHK will be giving a talk titled "Theory of agent: from definition to objective" at ⏰Wednesday 6.11 3pm H….

0

2

0

RT @svlevine: I always found it puzzling how language models learn so much from next-token prediction, while video models learn so little f….

0

177

0

RT @yuzhenh17: 🔍 Are Verifiers Trustworthy in RLVR?.Our paper, Pitfalls of Rule- and Model-based Verifiers, exposes the critical flaws in r….

0

21

0

RT @xlzhao_hku: 🔥 Meet PromptCoT-Mamba. The first reasoning model with constant-memory inference to beat Transformers on competition-level….

0

15

0

RT @WeiLiu99: “What is the answer of 1 + 1?”.Large Reasoning Models (LRMs) may generate 1500+ tokens just to answer this trivial question.….

0

32

0

RT @shiqi_chen17: Share our another #ICML25 paper: “Bring Reason to Vision: Understanding Perception and Reasoning through Model Merging” !….

0

13

0

RT @hkunlp2020: Guanqi Jiang from UCSD will be giving a talk titled "Robots Pre-Train Robots: Manipulation-Centric Robotic Representation f….

0

5

0

RT @hkunlp2020: Follow our new HKUNLP seminars at You can also sign up as a speaker to share your work!.

0

2

0

We are kicking off a series of seminars at @hkunlp2020. @siyan_zhao will be giving a talk titled "d1: Scaling Reasoning in Diffusion Large Language Models via Reinforcement Learning" at ⏰Friday 5.9 11am HKT (Thursday 5.8 8pm PDT). Link to talk:

0

13

37

RT @chenshi51326099: 🚀🔥 Thrilled to announce our ICML25 paper: "Why Is Spatial Reasoning Hard for VLMs? An Attention Mechanism Perspective….

0

36

0

RT @_zhihuixie: Excited to be in Singapore 🇸🇬 for #ICLR2025! Thrilled for my first time attending after past visa issues kept me away 😢. W….

0

5

0

Excited to share our work at ICLR 2025 in 🇸🇬. @iclr_conf 🥳 Happy to chat about LLM reasoning & planning, agents, and AI4Science! . 📍Sat 26 Apr 3 p.m. CST — 5:30 p.m Hall 3 + Hall 2B #554

0

9

34

Thanks for sharing our work -- our solution to improving GUI agents with other data rich sources.

9/🧵Breaking the Data Barrier – Building GUI Agents Through Task Generalization. This paper presents a mid-training approach using Vision Language Models to enhance GUI agent performance by leveraging diverse, reasoning-intensive tasks, resulting in significant improvements in

0

2

8