Lifu Huang

@lifu_huang

Followers

546

Following

193

Media

1

Statuses

66

Assistant Professor in the Computer Science Department at UC Davis(@ucdavis), director of PLUM_Lab (@LabPlum), focusing on #NLP, #Multimodal, #AI4Science

Davis, CA, USA

Joined March 2013

Join us at the AAAI Spring Symposium to explore how Agentic AI is revolutionizing scientific discovery! This is the perfect opportunity to share your research, exchange ideas, and contribute to advancing the frontiers of science!

0

0

6

💡Call to Action: - Submit your work and showcase your contributions! - Published in this field? We’d love to hear from you—apply to give a talk and inspire the community!

We are thrilled to announce a series of AI4Science workshops at ICLR'25, WWW'25, and AAAI Spring Symposium! Whether you're exploring cutting-edge AI techniques for scientific discovery or pushing the boundaries of interdisciplinary research, these workshops are perfect for you!

0

1

8

The most fun image & video creation tool in the world is here. Try it for free in the Grok App.

0

217

1K

We are thrilled to announce a series of AI4Science workshops at ICLR'25, WWW'25, and AAAI Spring Symposium! Whether you're exploring cutting-edge AI techniques for scientific discovery or pushing the boundaries of interdisciplinary research, these workshops are perfect for you!

🌟Call for Papers: Agentic AI for Science Workshop at The Web Conference 2025!🌟 Updated Submission Deadline: January 15, 2025 (11:59 PM AoE) Submission Website: https://t.co/LFSXVi7qbd Workshop Website:

0

2

6

Excited about how AI/LLMs are revolutionizing our daily research activities? 🚀Join us at IJCAI'24 (@IJCAIconf) for our "AI4Research" workshop, with an incredible lineup of keynote speakers! Don't miss out! #AI4Research #IJCAI24 #callforpapers

🎉 Get set for the ultimate brain boost at the "AI4Research" workshop ( https://t.co/bJB7eNPDNP) in Jeju, Korea, alongside IJCAI'24! 🧠💥 Dive into the latest AI breakthroughs in accelerating & automating research. Submit your papers by May 20th 🚀 @IJCAIconf

1

2

18

It's also my greatest honor to return to my Alma mater to share some recent research! I'm very looking forward to it!

It’s one of those happiest moments as a professor - welcoming your amazing academic child back to give a CS colloquium. Prof Lifu Huang (VT), Jan 22 3:30pm CT, hybrid: https://t.co/Yu4bApYkEE

0

0

15

📢 Call for Papers! We invite all submissions related to NLP and look forward to fostering connections among NLP researchers from diverse universities and backgrounds!! #NLP #CFP #SouthNLP2024

We are delighted to announce that the First South NLP Symposium will take place on March 29, 2024, at Emory University: https://t.co/rOi9IryAbn Here are the details regarding the call for papers: https://t.co/Xbf2nU6yPz We look forward to seeing you at the symposium.

0

2

10

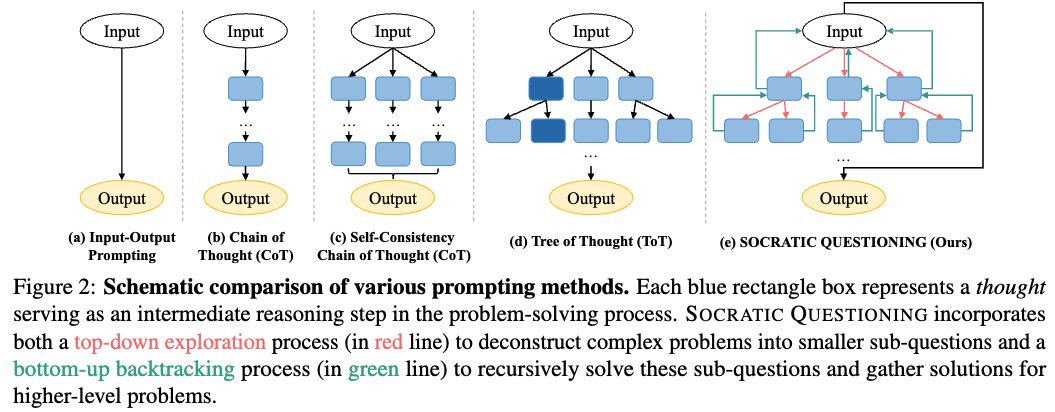

#EMNLP2023: Imagine how you solved math problems you have never seen before 🤔🤔 Inspired by a similar human thinking process, we proposed ✨SOCRATIC QUESTIONING✨, a new divide-and-conquer algorithm to elicit complex reasoning in LLMs beyond Chain-of-Thought or Tree-of-Thought

Our new work ✨The Art of SOCRATIC QUESTIONING: Recursive Thinking with Large Language Models✨ is accepted to #EMNLP2023. Inspired by the human cognitive process, we propose SOCRATIC QUESTIONING, a divide-and-conquer style algorithm that mimics the 🤔recursive thinking process.

0

2

30

In an interview w/@researchvoyage Ph.D. student @barry_yao0 discusses his work @SanghaniCtrVT that garnered Best Paper Award Honorable Mention @SIGIRConf. The paper proposes an end-to-end fact- checking and explanation generation task. @lifu_huang @VT_CS

researchvoyage.com

In this exclusive interview, we unravel the layers of Barry Menglong Yao's best research paper award honorable mention experiences at SIGIR-2023.

We are interviewed by Research Voyage about our Best Paper Award Honorable Mention paper at SIGIR 2023. Here is the interview blog post: https://t.co/Ys3wPm25N1. Our paper:

0

1

1

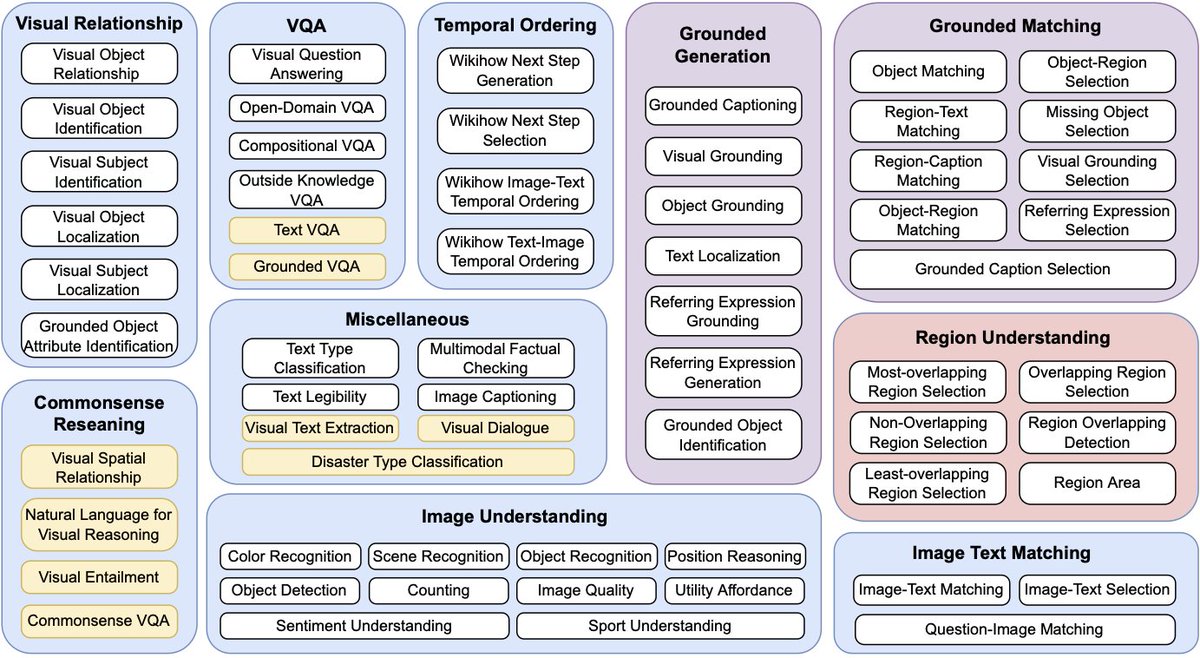

Ph.D. student @zhiyangx11 @SanghaniCtrVT introduces and shares a new dataset that researchers and practitioners can leverage to advance state of the art vision-language models and develop innovative algorithms in a wide range of domains. @lifu_huang @VT_CS

Today we officially release ✨Vision-Flan✨, the largest human-annotated visual-instruction tuning dataset with 💥200+💥 diverse tasks. 🚩Our dataset is available on Huggingface https://t.co/XqFrpudysl 🚀 For more details, please refer to our blog

0

1

4

✨Vision-Flan✨-- Try it out if you are curious about how much we can push it forward for visual instruction tuning and what problems are still remaining

Today we officially release ✨Vision-Flan✨, the largest human-annotated visual-instruction tuning dataset with 💥200+💥 diverse tasks. 🚩Our dataset is available on Huggingface https://t.co/XqFrpudysl 🚀 For more details, please refer to our blog

0

1

10

Very excited and honored to receive the *Best Paper Award Honorable Mention* from SIGIR'2023! Congrats to @barry_yao0 and all collaborators and welcome to check out the first end-to-end multimodal fact-checking benchmark ( https://t.co/TaJR8RBRn7)

Congrats to @SanghaniCtrVT core faculty @lifu_huang, alum @AdityaSShahh, Ph.D. student @barry_yao0 and their collaborators who garnered this award @SIGIRConf!

5

6

43

Congrats to @zhiyangx11 @YingShen_ys @lifu_huang @VT_CS @SanghaniCtrVT who received an Outstanding Paper Award @aclmeeting #ACL2023NLP for research introducing 1st multimodal instruction tuning benchmark dataset w/62 diverse tasks, each w/5 instructions:

Outstanding Papers at #ACL2023NLP. Congrats to all authors!! https://t.co/JuAdPbD8d1 📚 Backpack Language Models 🔎 CAME: Confidence-guided Adaptive Memory Efficient Optimization 🌍 Causes and Cures for Interference in Multilingual Translation [1/n]

0

2

10

Wow! Excited to share that our MultiInstruct ( https://t.co/dHSXMgpKuE) work was selected for the outstanding paper award of ACL'2023! Many thanks to the best paper committee and Congrats to my awesome students @zhiyangx11 @YingShen_ys

My first ever submission to ACL was selected by the #ACL2023 best paper committee for the 🏆outstanding paper award🏆 Huge thanks to the reviewers and committee members for recognizing our contributions. Congrats to my advisor @lifu_huang and collaborator @YingShen_ys

3

1

34

My **favorite** work in the line of continual learning for information extraction (from our awesome @minqian_liu ), with many insights and super-encouraging results! Welcome to check it out at #ACL2023!

Struggling with catastrophic forgetting when updating your model? 🤯 Check out our latest work to appear at Findings of 🌟#ACL2023NLP🌟! We extensively study the *classifier drift* issue in continual learning and introduce an effective framework for this problem. 📌paper at

0

0

9

My awesome students @zhiyangx11 @YingShen_ys created a large and diverse multimodal instruction tuning benchmark to investigate the potential of multimodal LLMs in instruction understanding and following, and further achieving zero-shot generalization. Welcome to check it out!!

We introduce the first multimodal instruction tuning dataset: 🌟MultiInstruct🌟 in our 🚀#ACL2023NLP🚀 paper. MultiInstruct consists of 62 diverse multimodal tasks and each task is equipped with 5 expert-written instructions. 🚩 https://t.co/i0Q28z9c0q🧵[1/3]

0

4

31

so awesome to see these Auto-X, X-chain, etc. in 2016-2017, i thought this would happen but not via programming language to chain them but via chaining their hidden states. https://t.co/07oHdPaask

2

5

49

Supported by prestigious award @NSF, @lifu_huang's extraction techniques could help analyze millions of research papers, reports and emerging events around the world and preserve thousands of languages in danger of becoming extinct. @VT_CS @SanghaniCtrVT

news.vt.edu

An assistant professor in the Department of Computer Science and core faculty at the Sanghani Center for Artificial Intelligence and Data Analytics, Huang is developing new extraction techniques that...

0

2

19

🎉Thrilled to announce that our paper has been accepted by #ACL2023 Findings and is now available on arXiv. We will share our code soon. Thanks to all the co-authors! #ACL2023NLP #NLProc 👉Preprint: Multimedia Generative Script Learning for Task Planning

0

5

20

Thrilled to share that I just received the NSF CAREER award to support our research on Open Environment Event Knowledge Extraction. I’m very grateful for all the support from my colleagues at @VT_CS , my Ph.D. advisor, collaborators, and all my brilliant and diligent students!

10

2

104

We are thrilled to invite Avirup Sil (@aviaviavi__ ) to give us a talk at @SanghaniCtrVT about "Question Answering: From the basics to the state-of-the-art with PrimeQA" at 9:30-10:30 this Tuesday (Oct. 18)! Everyone is welcome to join. Zoom link:

0

1

6