Leon

@leonlufkin

Followers

58

Following

2K

Media

6

Statuses

11

Undergrad at Yale

Palo Alto, CA

Joined September 2021

Many thanks to @ermgrant for being a great mentor! Thanks also to @SaxeLab for supporting me with this project during my internship at @GatsbyUCL. (10/10)

1

0

4

In summary: We analytically study the dynamics of localization in a nonlinear neural network without top-down constraints, where we find that “edges” drive localization. We identified which forms of non-Gaussianity were necessary to get this structure to emerge. (9/10)

1

0

7

If we depart from a property of our idealized images, we can’t maintain this clear analytical picture. However, we’re still able to show localization never emerges for elliptically-distributed data. This is a broad class of distributions that can be highly non-Gaussian! (8/10)

1

0

4

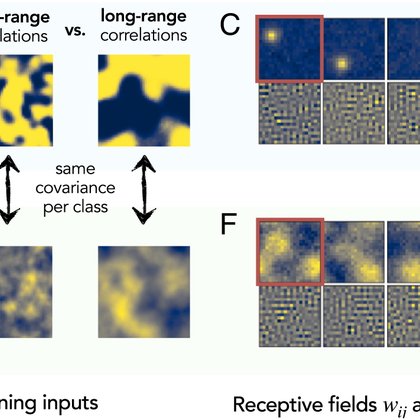

Looking closer, we identify a key statistic driving localization: excess kurtosis of the pixel marginals. When it’s negative (i.e., the data have sharper “edges”), localization emerges; otherwise, localization is suppressed. This holds beyond our single ReLU neuron, too. (7/10)

1

0

3

The localization amplifier depends only on a particular attribute of the data—the pixel marginal distributions. When they’re Gaussian, the amplifier is a linear function. But, when they’re concentrated, it becomes super-linear. This promotes localization! (6/10)

1

0

4

Doing theory here is tricky, as IG22 discuss: nonlinear, non-Gaussian feature learning is complicated! We derive the learning dynamics of a minimal case—a single ReLU neuron learning from an idealized model of images—isolating a data-driven “localization amplifier.” (5/10)

1

0

4

IG22 find an example where the answer is no! They created a classification task where a two-layer network trained with SGD on MSE learns localized weights. Critically, this setting needs non-Gaussian inputs. We explain why! (4/10) (IG22: https://t.co/381c57Uv7T)

pnas.org

Exploiting data invariances is crucial for efficient learning in both artificial and biological neural circuits. Understanding how neural networks ...

1

0

3

These RFs are localized—sparsely activated in space and/or time. But why? Sparse coding and ICA show top-down constraints are an answer. But these may not be biologically plausible, and don’t explain why localization emerges in neural networks. Are they necessary? (3/10)

1

0

3

Neurons in animal sensory cortices stand out for having Gabor-like receptive fields (RFs). Surprisingly, we see this in artificial neural networks trained on natural images too. This is a classic example of universality in neural systems. (2/10)

1

0

4

We’re excited to share our paper analyzing how data drives the emergence of localized receptive fields in neural networks! w/ @SaxeLab @ermgrant Come see our #NeurIPS2024 spotlight poster today at 4:30–7:30 in the East Hall! Paper: https://t.co/U2I285LLAE

1

19

117