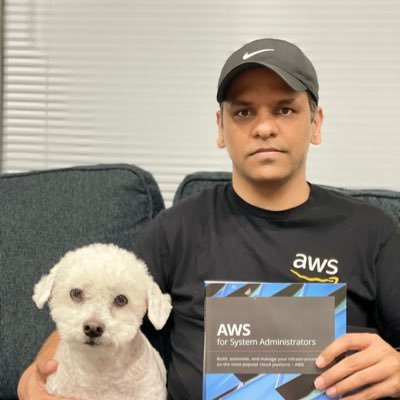

Prashant Lakhera

@lakhera2015

Followers

398

Following

291

Media

202

Statuses

735

29X Certified, CK{A,AD,S),KCNA, Ex-Redhat, Author, Blogger, YouTuber, RHCA, RHCDS, RHCE, Docker Certified,5X AWS, CCNA, MCP, Certified Jenkins, Terraform

Fremont, CA

Joined May 2015

🚀 Meet the first Small Language Model built for DevOps 🚀 Post: https://t.co/7zJWYdyt6A

#DevOps #AI #GenerativeAI #LLM #MLOps #Innovation

0

0

0

🚀 One Trick I Wish I Knew Earlier: Creating Multiple AWS Accounts with a Single Gmail ID🚀 Did you know you don’t need separate Gmail accounts for each AWS environment? Gmail ignores anything after a “+” sign in your email username. So, if your primary email is: 📧

0

0

0

Check out the latest article in my newsletter: Day 8/50: Building a Small Language Model from Scratch: What are Rotary Positional Embeddings (RoPE) https://t.co/BabAc8zrA1 via @LinkedIn

0

0

0

📌 Beyond Hello World: A Free 8-Week Generative AI Learning Series📌 🚨 Stop learning AI with “Hello World” projects I’m running a FREE 8-week hands-on Generative AI series from July 12 – Sept 6. No fluff. Just real-world stuff like agents, fine-tuning, local models & RAG.

0

0

0

📌 Week 2: Positional Embeddings, RoPE & Model Distillation — Continuing Our Journey to Build a Small Language Model from Scratch - Jun 30 - Jul 4 📌 Last week, we laid the groundwork, from tokenization to setting up a basic transformer. This week (June 30 – July 4), we’re

0

0

0

[Day 5/50] Building a Small Language Model from Scratch - Byte Pair Encoding with tiktoken This week, we took a deep dive into Byte Pair Encoding (BPE) and integrated the tiktoken library from OpenAI into our preprocessing pipeline. After building a tokenizer from scratch

0

0

0

🚀 What a week for the IdeaWeaver project! We just crossed 200+ stars on GitHub, hit 10,000+ downloads, and my post went viral on Reddit! 🔗 https://t.co/PlAN9O423W 🧠 #50DaysOfTinyLLMs — I've been building small language models from scratch every day: 📅 Day 1:

0

0

1

🐍 AI Snake Oil: What Artificial Intelligence Can Do, What It Can’t, and How to Tell the Difference 🐍 Just finished reading this book by Arvind Narayanan and Sayash Kapoor. When I first picked it up, I thought it would be another AI book full of hype and bold claims. But I

0

0

0

📌Day 4 of 50 Days of Building a Small Language Model from Scratch — Understanding Byte Pair Encoding (BPE) Tokenizer📌 🧵 Byte Pair Encoding (BPE) – The Tokenization Hack Behind GPT Models So far, we explored what a tokenizer is and built one from scratch. But custom

0

0

1

❓ Where Does Spelling Correction Happen in Language Models? ❓ For people like me who are not native English speakers, I make a lot of spelling mistakes and thanks to ChatGPT for correcting them. But have you ever thought about how and at what stage ChatGPT or other LLMs

0

0

0

💡 From Idea to Post: Meet the AI Agent That Writes Linkedin post for You💡 Meet IdeaWeaver, your new AI agent for content creation. Just type: ⌨️ ideaweaver agent linkedin_post - topic "AI trends in 2025" 📌 That's it. One command, and a high-quality, engaging post is ready

0

0

0

📌 Day 2 of 50 Days of Building a Small Language Model from Scratch Tokenizers are the unsung heroes of LLMs. Before your words reach the model, they’re broken into tokens, subwords, characters, or even punctuation. Good tokenization = better performance, fewer hallucinations,

0

0

0

Introducing the First AI Agent for System Performance Debugging I am more than happy to announce the first AI agent specifically designed to debug system performance issues! While there's tremendous innovation happening in the AI agent field, unfortunately not much attention

0

0

0

🚀 Day 1 of 50 Days of Building a Small Language Model from Scratch Topic: What is a Small Language Model (SLM)? I used to think that any model with fewer than X million parameters was "small." It turns out that there is no universally accepted definition. What really makes a

0

0

0

What exactly qualifies as a Small Language Model? 🤔 Did you know that there is no official definition of a small language model? I used to think that any model with fewer than X million parameters would be considered a small model, but I was wrong. It turns out that there is

0

0

0

🤖 50 Days of Building a Small Language Model from Scratch 🤖 Check out the latest article in my newsletter: 50 Days of Building a Small Language Model from Scratch https://t.co/9VTJ4Uc4DS via @LinkedIn

#AI #MachineLearning #DeepLearning #NLP #LLM #Transformers #SmallModels

linkedin.com

Launch date: Monday, June 23, 2025, at 9:00 AM PST Frequency: Monday–Friday, 9 AM PST Series length: 50 posts (10 weeks) 👋 Hello, fellow AI enthusiasts! I’m thrilled to kick off a brand-new series:...

0

0

0

🔧 Model fine-tuning Just Got Easier with IdeaWeaver 🔧 We’ve already trained models and pushed them to registries. However, before a model goes live in production, there is one crucial step: fine-tuning it using your data. There are many ways to do it. But wouldn’t it be great

1

0

0

📌 From GPT-2 to DeepSeek: DeepSeek-Powered AI for Children's Stories📌 A few days ago, I shared how I trained a tiny 30-million-parameter model“Trained a Tiny Model to Tell Children's Stories!” https://t.co/4i3IDJlBJm , based on the GPT-2 architecture. Thank you all for the

0

0

0

📌 IdeaWeaver: One CLI to Train, Track, and Deploy Your Models with Custom Data 📌 Are you looking for a single tool that can handle the entire lifecycle of training a model on your data, track experiments, and register models effortlessly? Meet IdeaWeaver. With just a single

0

0

0