Kushin Mukherjee

@kushin_m

Followers

486

Following

21K

Media

151

Statuses

2K

Postdoctoral researcher @StanfordPsych | prev PhD @UWPsych, research intern @Apple | He/Him

Palo Alto, CA

Joined November 2016

So stoked to have this journal club highlight piece out in @NatRevPsych!🌟✏️. I provide a glimpse into one of my favorite papers by @judyefan & co. relating sketch production to object recognition!.Current piece: Fan et al. (2018):

3

8

38

RT @Miamiamia0103: Calling all digital artists 🧑🎨 Have you ever forgotten to put objects on separate layers?. Introducing InkLayer, a segm….

0

34

0

Happening now at Salon 3!!.

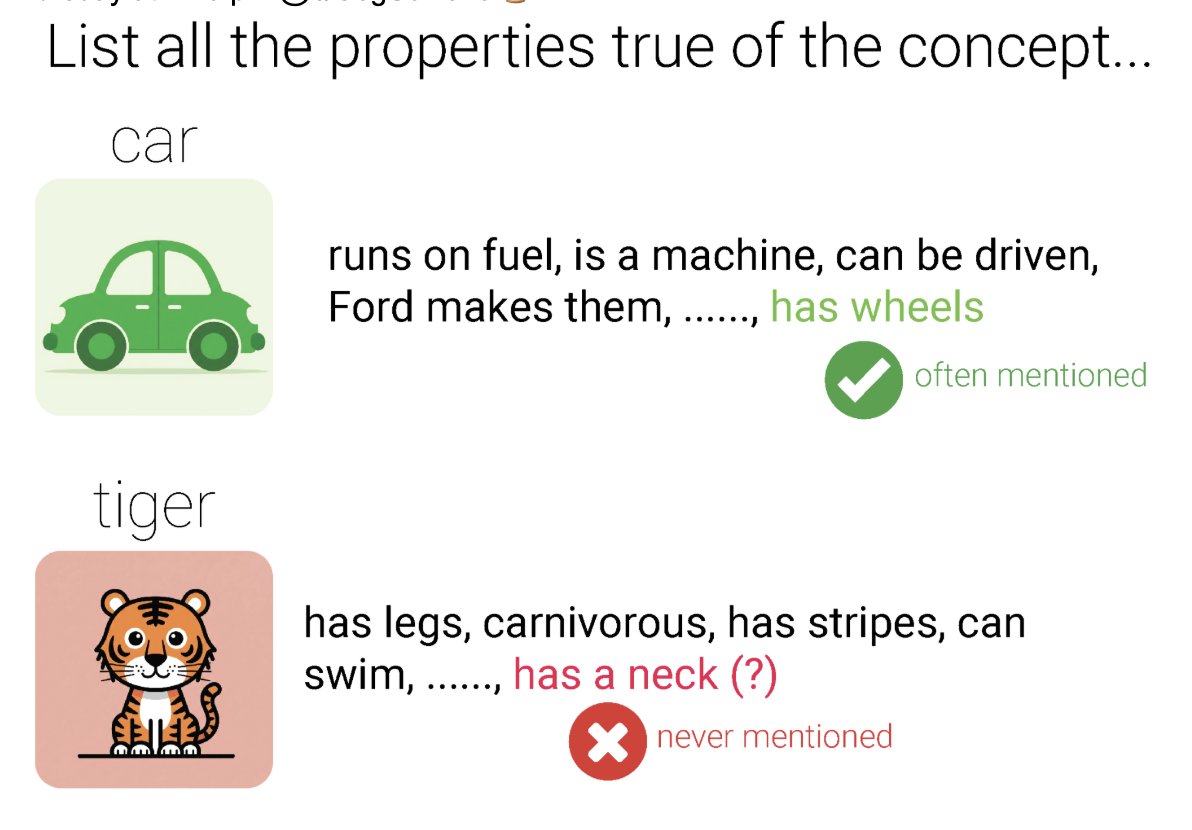

Do cars have wheels? Of course! Do tigers have necks? Of course! While folks know both these facts, they’re not likely to mention the latter. To learn what implications this has for how we measure semantic knowledge, come to our talk T-09-1 on Thursday at 2:15 pm @ #CogSci2025 🧵

0

1

1

We hope this is continued evidence that AI and cog sci can mutually support each other! Thanks to our awesome research team of @siddsuresh97 , Tyler Giallanza, Xizheng Yu, Mia Patil, Jon Cohen, and Tim Rogers!.Paper📜- Code🧑💻- (end).

github.com

Contribute to Knowledge-and-Concepts-Lab/llm-norms-cogsci2025 development by creating an account on GitHub.

0

0

0

Do cars have wheels? Of course! Do tigers have necks? Of course! While folks know both these facts, they’re not likely to mention the latter. To learn what implications this has for how we measure semantic knowledge, come to our talk T-09-1 on Thursday at 2:15 pm @ #CogSci2025 🧵

1

7

29

RT @allisonchen_227: Does how we talk about AI matter? Yes, yes it does! In our #Cogsci2025 paper, we explore how different messages affect….

0

13

0

RT @ErikBrockbank: "36 Questions That Lead To Love" was the most viewed article in NYT Modern Love. Excited to share new results investigat….

0

3

0

RT @verona_teo: Excited to share our new work at #CogSci2025!. We explore how people plan deceptive actions, and how detectives try to see….

0

11

0

RT @kristinexzheng: Linking student psychological orientation, engagement & learning in intro college-level data science. New work @cogsci_….

0

4

0