Alex Mizrahi

@killerstorm

Followers

5K

Following

119K

Media

489

Statuses

22K

Blockchain tech guy, made world's first token wallet and decentralized exchange protocol in 2012; CTO ChromaWay / Chromia

Kyiv, Ukraine

Joined July 2008

Fourth, it might really solve the issue with AI and copyrighted content: If cognitive core does not contain representations of copyrighted works, control will be back in hands of IP owners. E.g. they can sell "cartridges" or content licenses if they choose.

1

1

5

pre-processed thoughts verifiable. As a user might not want a "brain implant" for their AI which gives it skill but also shills specific products (or ideology).

2

1

6

While a small model can _potentially_ learn from raw data, it would need more experiential kind of data to acquire a skill. And it's much more efficient to get it in form of a "cartridge" distilled from raw data. But, of course, it would be important to make these

1

0

2

it receives, it better be high-quality, verified data, not random SEO spam found by a search engine. Users might end up paying for data access rather than "inference". Third, it would open a market for extension, adapters, cartridges, etc.

1

0

2

Why would they race towards that?... It might also reduce the need for big-cloud AI as people & companies would be able to run models on local devices. Second, it might increase role of 'data providers' like web search API. If your AI's "smarts" fully depend on data

1

0

2

"small but very powerful AI" vision is fully realized, here: https://t.co/mRCG9J8A7F First, Karpathy described it as "the race for LLM "cognitive core"", but it would likely make big AI labs weaker as it's much more feasible to work with smaller AI models.

killerstorm.github.io

Andrej Karpathy introduced a concept of a “cognitive core”:

1

0

2

What if we live in a world where optimal AI is a small "cognitive core" which can dynamically acquire knowledge and skills needed to perform a task? That have been suggested by Andrej Karpathy, Sam Altman and others. I tried to outline future AI landscape under condition

2

1

7

Moreover, previously "prefix tuning" paper demonstrated that KV-prefix can also have same effect as fine-tuning, so cartridge can also include skills, textual style, etc. And unlike LoRA adapters they are composable.

0

0

4

If 'cognitive core' knows only a minimum of facts, a lot of information must be included in the context, and it would much more efficient to use cartridges with pre-computed thoughts than to scan through raw documents on each query.

1

0

4

A recent paper introducing a concept of 'cartridges' ( https://t.co/KE0lGXeia5) might give us an idea for a kind of product which would work well with a 'cognitive core'. A cartridge is a KV prefix trained on a "self-study" of a corpus of documents.

arxiv.org

Large language models are often used to answer queries grounded in large text corpora (e.g. codebases, legal documents, or chat histories) by placing the entire corpus in the context window and...

1

1

7

And it might lead to growth of 'info finance' [decentralized] ecosystem which would incentivize market players to offer higher quality data & components.

1

0

2

to high-quality data, adapters, plug-ins, etc. If these things would differentiate "good AI" from "bad AI", that's where we should expect all the economic action to happen. And it opens room for smaller specialist companies.

1

0

1

then value capture in the AI market might look fundamentally different. That is, there might be a lot less value in huge "frontier" models which only the largest companies can develop. On the other hand, high-performance usage of "cognitive core" would require access

1

0

1

I just realized that if we live in a world where Karpathy's "cognitive core" thesis ( https://t.co/1RtvrEGIXd) is true in a maximal form (i.e. that cognitive core LLM is actually superior - that's how he described it in the interview with Dwarkesh P.),

The race for LLM "cognitive core" - a few billion param model that maximally sacrifices encyclopedic knowledge for capability. It lives always-on and by default on every computer as the kernel of LLM personal computing. Its features are slowly crystalizing: - Natively multimodal

1

0

3

Many other recent models got Rell syntax almost entirely correct, or at least captured essential elements: Grok 4, Gemini 2.5, GLM 4.6, DeepSeek 3.2. So GPT-5 is the weird one on this respect - perhaps trained more on synthetic data, confined more to common languages, etc.

1

0

4

Kind of interesting that Sonnet 4.5 remembers Rell syntax exactly, while various kinds of GPT-5 know language semantics but invent their own syntax for it.

1

0

6

im so tired of the 30-page ai-slop brain-rot around RAG here - i solved it for you. this simple graphic tells you everything you need to know.

I wrote 6 months ago that RAG might be dead. That was after an aha moment with Gemini’s 1M context window - running 200+ page docs through it and being impressed with the accuracy. But I didn’t have skin in the game. @nicbstme does. He just published an excellent piece: "The

36

77

938

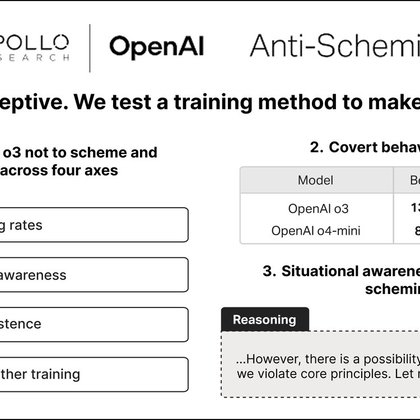

I already posted about this but seriously people should read these CoT snippets

antischeming.ai

Chain-of-thought snippets from frontier AI models during anti-scheming training shows deception, situational awareness, and other interesting behaviors.

20

33

276

normal OpenAI-style API is really gnarly if you want to inspect generation details Numeric calculation precision is a problem. It seems to work quite well with 32-bit floats, okayish with float16 (although vLLM itself struggles with float16), but bfloat16 KV cache creates

1

0

2