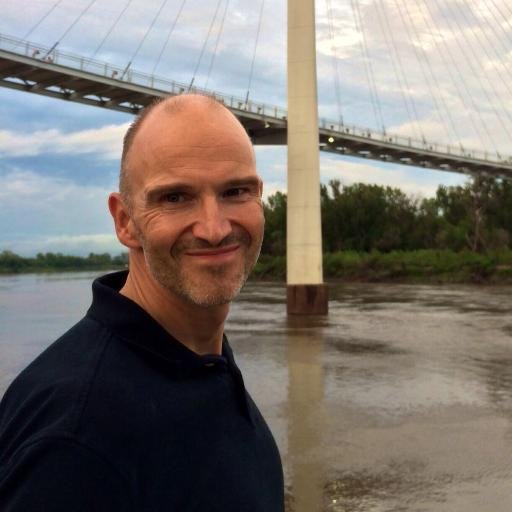

Joshua Saxe

@joshua_saxe

Followers

3K

Following

27K

Media

240

Statuses

3K

AI+cybersecurity at Meta; past lives in academic history, labor / community organizing, classical/jazz piano, hacking scene

Wichita, KS

Joined May 2013

Slides for keynote at @OffensiveAIcon - https://t.co/jnttszzBlg - on the roadmap for building robust AI cyber capabilities - really appreciate being invited, thoroughly excited by the energy and talent density of the conference

docs.google.com

1 The dam on AI security automation will break And it’s on us to break it faster than our adversaries Joshua Saxe, AI security engineer, Meta, https://substack.com/@joshuasaxe181906

2

30

104

Aardvark is a labor of love and mission for the whole team. We are super excited to bring it to you. Sign up for the beta immediately!!!

Now in private beta: Aardvark, an agent that finds and fixes security bugs using GPT-5. https://t.co/xwtJhfDM3X

9

34

268

Wonderful talk on where AI security and investigation agents are going by @joshua_saxe : https://t.co/T3XzXlXUYQ Evals on agents grinding through real logs & tools is the way. Whether you're writing prompts, plan markdowns, or fine-tuning, a lot is the same. This is basically

docs.google.com

1 The dam on AI security automation will break And it’s on us to break it faster than our adversaries Joshua Saxe, AI security engineer, Meta, https://substack.com/@joshuasaxe181906

0

1

4

Please steal my AI research ideas. This is a list of research questions and concrete experiments I would love to see done, but don't have bandwidth to get to. If you are looking to break into AI research (e.g. as an undergraduate, or a software engineer in industry), these are

47

204

2K

This week I had the pleasure of guest lecturing at both Georgetown University and Johns Hopkins SAIS on the intersection of AI, cyber and national security. You can find a brief overview of the topics I covered and my slides here. https://t.co/2bmRfKyFGc

1

13

46

This work, alignment, interpretability, red-teaming, containment, etc, is already colinear with existential-safety goals but grounded in falsifiable practice. The right response to “If Anyone Builds It, Everyone Dies” isn’t to pause AI, it’s to succeed in AI security.

1

0

1

The practical path forward is the same whether or not you believe in the apocalypse: build well monitored sandboxed AI systems; identify good-enough ways to interpret their internals, treat reward hacking and deception as engineering and security problems, not millennarian ones.

1

0

1

They aren't dangerously autonomous minds and there’s no evidence that scaling alone leads to self-directed superintelligence- policymaking based on that assumption confuses imagination with science; the burden is on xrisk folks to prove otherwise; regression plots don't count.

2

0

0

Today’s systems are powerful statistical engines that have powerful crystallized intelligence that's often mistaken for fluid intelligence (of which they have little). They are data-inefficient learners incapable of real lifelong learning.

1

0

0

I read 'If Anyone Builds It Everyone Dies' by Yudkowsky and Soares and the technical argument is weak. Each generation of AI researchers has predicted human-like or beyond-human general intelligence within a decade, and every generation has been wrong.

6

2

14

It finally happened. I've got a giant merge conflict and both sides were vibe coded and I don't recognize either. Now I'm vibe merging the conflicts too

345

615

12K

Join us for a critical conversation at a pivotal moment in AI and Cybersecurity! Benchmarks such as our CyberGym and results from the recent AIxCC competition demonstrate that AI capabilities in cybersecurity are advancing at unprecedented pace. @BerkeleyRDI and

1/ 🔥 AI agents are reaching a breakthrough moment in cybersecurity. In our latest work: 🔓 CyberGym: AI agents discovered 15 zero-days in major open-source projects 💰 BountyBench: AI agents solved real-world bug bounty tasks worth tens of thousands of dollars 🤖

3

9

30

The Karpathy Dwarkesh podcast + AI as normal technology go together really well as a explainer of what reasonable and grounded expectations look like for AI progress, diffusion, and risks

0

0

2

Most security practitioners will tell you that the traditional network perimeter between internal trusted systems and the external untrusted internet disappeared with the emergence of client side exploits. It was replaced by coarse grained network isolation roughly between what

Slides for the keynote I gave at the AI Security Forum in July proposing a framework for thinking about how to secure AI agents, and where my thesis is that we need to hybridize two currently separate technical disciplines; AI alignment and cybersecurity: https://t.co/HKB10eUCHu

3

10

37

This is the level of a lot of AI societal impact discourse atm; jump straight from a controlled eval results to a societal impact claim while skipping over the psychology, ethnography, microeconomics, etc, you'd need to actually make that claim

0

2

13

The idea that we will automate work by building artificial versions of ourselves to do exactly the things we were previously doing, rather than redesigning our old workflows to make the most out of existing automation technology, has a distinct “mechanical horse” flavor

193

588

6K