Jeongsoo Park

@jespark0

Followers

144

Following

417

Media

7

Statuses

26

PhD student @UMichCSE

Joined May 2022

Can AI image detectors keep up with new fakes? Mostly, no. Existing detectors are trained using a handful of models. But there are thousands in the wild! Our work, Community Forensics, uses 4800+ generators to train detectors that generalize to new fakes. #CVPR2025 🧵 (1/5)

1

9

23

Hello! If you are interested in dynamic 3D or 4D, don't miss the oral session 3A at 9 am on Saturday: @zhengqi_li will be presenting "MegaSaM" I'll be presenting "Stereo4D" and @QianqianWang5 will be presenting "CUT3R"

1

6

36

Excited to share our CVPR 2025 paper on cross-modal space-time correspondence! We present a method to match pixels across different modalities (RGB-Depth, RGB-Thermal, Photo-Sketch, and cross-style images) — trained entirely using unpaired data and self-supervision. Our

1

28

121

Ever wondered how a scene sounds👂 when you interact👋 with it? Introducing our #CVPR2025 work "Hearing Hands: Generating Sounds from Physical Interactions in 3D Scenes" -- we make 3D scene reconstructions audibly interactive! https://t.co/tIcFGJtB7R

2

34

97

The data is available on Hugging Face, as well as the pipeline code! Come chat with us at #CVPR2025! We’ll be presenting Friday afternoon at poster #274. (work w/ @andrewhowens) 📄 Project Page: https://t.co/vWLKi8DCYC 💾 Dataset/Code: https://t.co/EssZshboqe 🧵 (5/5)

huggingface.co

0

0

1

Each image is labeled with detailed metadata, enabling more than just fake detection. We are excited to see what the community can build with this data! 🧵 (4/5)

1

0

0

The Community Forensics dataset offers 2.7 million images from 4,803 generative models We captured everything from popular commercial models to thousands of niche, open source generative models to better represent the modern landscape. 🧵 (3/5)

1

0

0

We found that model variety is crucial for generalization. Detectors trained on our dataset are good at spotting images from new, unseen generators. Adding more models to the training set, even similar ones, improves robustness across the board. 🧵(2/5)

1

0

0

Hello! If you like pretty images and videos and want a rec for CVPR oral session, you should def go to Image/Video Gen, Friday at 9am: I'll be presenting "Motion Prompting" @RyanBurgert will be presenting "Go with the Flow" and @ChangPasca1650 will be presenting "LookingGlass"

3

16

64

Ever wish YouTube had 3D labels? 🚀Introducing🎥DynPose-100K🎥, an Internet-scale collection of diverse videos annotated with camera pose! Applications include camera-controlled video generation🤩and learned dynamic pose estimation😯 Download: https://t.co/iL3iqqzYL8

2

38

178

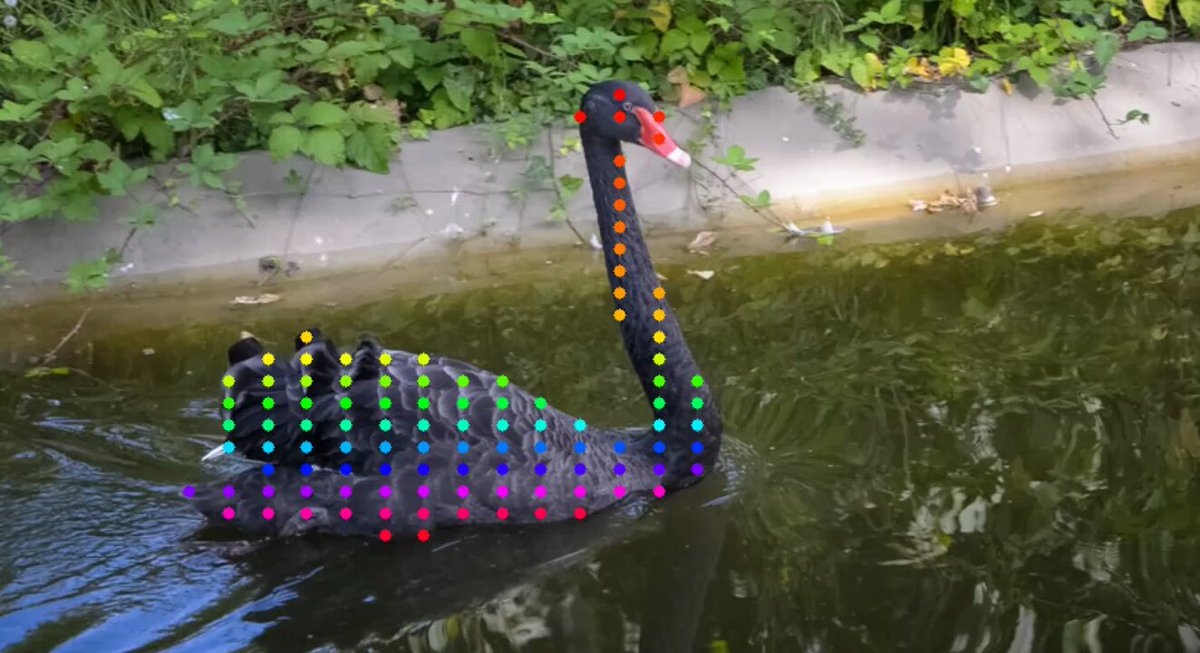

We present Global Matching Random Walks, a simple self-supervised approach to the Tracking Any Point (TAP) problem, accepted to #ECCV2024. We train a global matching transformer to find cycle consistent tracks through video via contrastive random walks (CRW).

1

22

85

📢Presenting 𝐃𝐄𝐏𝐈𝐂𝐓: Diffusion-Enabled Permutation Importance for Image Classification Tasks #ECCV2024 We use permutation importance to compute dataset-level explanations for image classifiers using diffusion models (without access to model parameters or training data!)

1

12

30

This year I'm organizing ML4H Outreach program, and want to highlight our Author Mentorship program. Whether you're a mentee looking for guidance or a more experienced researcher with time to mentor, we'd love to have you be a part of this program! Deadline to apply is July 5!

Are you planning to submit a paper to ML4H 2024 and would like to receive mentorship from senior scientists? Are you interested in mentoring early-stage researchers? Sign up for the ML4H Submission Mentorship Program by July 5th AoE! Program details:

0

8

24

These spectrograms look like images, but can also be played as a sound! We call these images that sound. How do we make them? Look and listen below to find out, and to see more examples!

1

41

168

NeRF captures visual scenes in 3D👀. Can we capture their touch signals🖐️, too? In our #CVPR2024 paper Tactile-Augmented Radiance Fields (TaRF), we estimate both visual and tactile signals for a given 3D position within a scene. Website: https://t.co/4pGTPKm8xh arXiv:

10

21

116

What do you see in these images? These are called hybrid images, originally proposed by Aude Oliva et al. They change appearance depending on size or viewing distance, and are just one kind of perceptual illusion that our method, Factorized Diffusion, can make.

10

103

450

Can you make a jigsaw puzzle with two different solutions? Or an image that changes appearance when flipped? We can do that, and a lot more, by using diffusion models to generate optical illusions! Continue reading for more illusions and method details 🧵

17

116

616

Our ViT-Ti shows up to 39.2%/17.9% faster train/eval without accuracy loss compared to RGB. Also, our data augmentation pipeline is up to 93.2% faster than previous works. For more details, please check out our website!

0

1

8

Data augmentation is vital for training a good-performing model. We directly augment JPEG to speed up training, instead of converting to RGB, augment, and converting it back.

1

2

7