Insu Han

@insu_han

Followers

115

Following

93

Media

3

Statuses

9

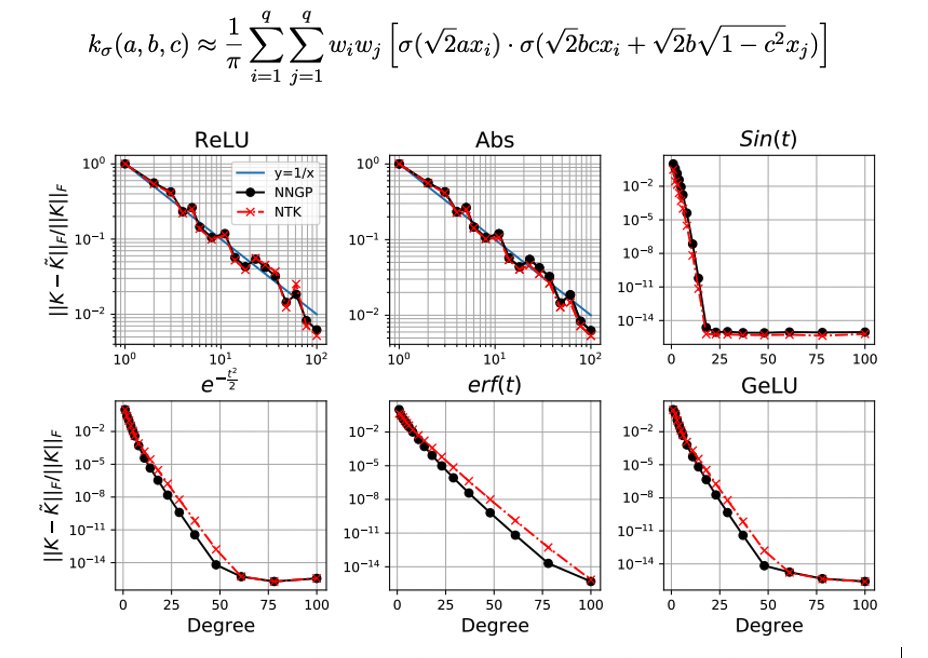

Most infinitely wide NTK and NNGP kernels are based on the ReLU activation. In we propose a method of computing neural kernels with *general* activations. For homogeneous activations, we approximate the kernel matrices by linear-time sketching algorithms.

7

14

66

We open-source NNGP and NTK for new activations within the Neural Tangents dev and sketching algorithm at Joint work with Amir Zandieh @ARomanNovak @hoonkp @Locchiu @aminkarbasi.

0

2

3

RT @dohmatobelvis: Good news: Our paper on Scalable learning and MAP inference in nonsymmetric Determinantal Point Processes .

0

6

0

RT @dohmatobelvis: Happy to share our recent preprint with .@mikegartrell, Insu Han, V.-E. Brunel, J. Gillenwater,.on Scalable learning and….

0

5

0