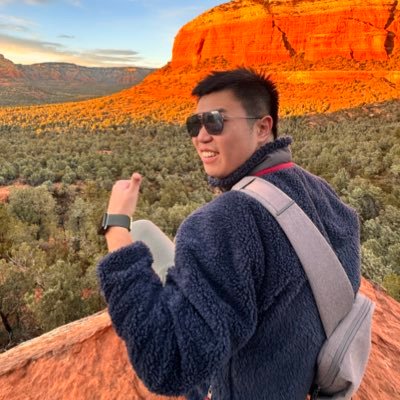

Tianrong Chen 陈天荣

@iamct_r

Followers

365

Following

884

Media

14

Statuses

198

IamctR is “I am Chen Tian Rong”, ex PhD Georgia Tech. Diffusion model, Schrödinger Bridge, Stochastic Optimal Control, current @apple

Atlanta, GA

Joined June 2022

Check out the new addition to our TarFlow franchise. TLDR: normalizing flows “just work” for generating videos. This adds another strong evidence to our argument that NFs are capable generative models; and I’m now more convinced than ever that they will continue working better.

STARFlow gets an upgrade—it now works on videos🎥 We present STARFlow-V: End-to-End Video Generative Modeling with Normalizing Flows, a invertible, causal video generator built on autoregressive flows! 📄 Paper https://t.co/fHApEwGg8j 💻 Code https://t.co/ATU9XtacsQ (1/10)

0

15

75

I’ll be at San Diego attending #NeurIPS2025 Dec 3-7. DM me if interested in diffusion model, multimodal, protein generative models! We’re looking for FTE to join us working on generative models. You can also find me at Apple booth on Dec 3 3-5pm.

New preprint & open-source! 🚨 “SimpleFold: Folding Proteins is Simpler than You Think” ( https://t.co/cXQYliK7Ws). We ask: Do protein folding models really need expensive and domain-specific modules like pair representation? We build SimpleFold, a 3B scalable folding model solely

3

6

51

At #NeurIPS2025 Tue-Fri presenting 3 papers from our 🍎Apple ML research team. Interested in LLM, RL, reasoning, and diffusion LLMs. We also have FY26 research intern and full-time positions available. DM me if interested for a chat!

4

6

103

Thanks @_akhaliq for sharing our work!! All the code for STARFlow-V and the prior work STARFlow (NeurIPS spotlight @ this Thur) has been released https://t.co/ATU9XtaKio We also have the T2I model weights available now https://t.co/DSeFXRYAqZ Let's push more on Scalable NFs!

huggingface.co

2

13

67

Checkout our new work!

STARFlow gets an upgrade—it now works on videos🎥 We present STARFlow-V: End-to-End Video Generative Modeling with Normalizing Flows, a invertible, causal video generator built on autoregressive flows! 📄 Paper https://t.co/fHApEwGg8j 💻 Code https://t.co/ATU9XtacsQ (1/10)

1

0

6

LLMs are notorious for "hallucinating": producing confident-sounding answers that are entirely wrong. But with the right definitions, we can extract a semantic notion of "confidence" from LLMs, and this confidence turns out to be calibrated out-of-the-box in many settings (!)

22

82

585

Might also be interested in checking our TARFlow series! TARFlow: https://t.co/Gb7NETqEw2 ICML2025 Oral STARFlow: https://t.co/bpkY7SYx4z NeurIPS2025 Spotlight TARFlow-LM: https://t.co/BLHoXt9m5Q NeurIPS 2025 … and more maybe soon🤖

arxiv.org

We present STARFlow, a scalable generative model based on normalizing flows that achieves strong performance in high-resolution image synthesis. The core of STARFlow is Transformer Autoregressive...

0

12

101

I'm hiring 2 PhD students & 1 postdoc @GeorgiaTech for Fall'26 Motivated students plz consider us, especially those in * ML+Quantum * DeepLearning+Optimization -PhD: see https://t.co/h4anjm6b8j -Postdoc: see https://t.co/548XVaahx3 & https://t.co/4ahNE7OOwV Retweet appreciated

9

120

477

We’re excited to share our new paper: Continuously-Augmented Discrete Diffusion (CADD) — a simple yet effective way to bridge discrete and continuous diffusion models on discrete data, such as language modeling. [1/n] Paper: https://t.co/fQ8qxx4Pge

6

36

238

Lastly, great thanks for all of my amazing collaborators! @D_Berthelot_ML @zhaisf @jmsusskind @thoma_gu @UnderGroundJeg

0

0

2

Thus, we are able to achieve better performance with same limited sampling budget :) For more experiments results please refer to the paper.

1

0

0

The generated results remain diverse even when the initial noise fed into the network is identical and the dynamics are solved by an ODE solver under the same SNR discretization.

1

0

3

The difference arises in sampling: the momentum system is a pseudo-SDE (loosely speaking), where dynamics are deterministic but stochasticity emerges from variable interactions. Compared with the discretized Wiener process (left), such pseudo noise is smoother.

1

0

2

First of all, they are identical to vanilla diffusion models during training, even with additional input variables. It means, 1. There is no training benefit for augmented system. 2. A Pre-trained diffusion model can even be DIRECTLY used for sampling in these augmented systems.

1

0

1

Here is our niche accepted paper ( https://t.co/dJoM1UitWk) in NeurIPS2025 :) This is also a summary of previous augmented diffusion models (PFGM, CLD, AGM, etc.), and investigates in which cases, if any, they are actually useful in practice.

1

8

20

Three generative modeling papers from my team accepted to #NeurIPS2025, two on TarFlow and one on Diffusion. 1. StarFlow (Spotlight) https://t.co/ynB2z4Xgfa, scales TarFlow in latent space and demonstrates unprecedented sample quality from pure NF models. Work led by @thoma_gu.

arxiv.org

We present STARFlow, a scalable generative model based on normalizing flows that achieves strong performance in high-resolution image synthesis. The core of STARFlow is Transformer Autoregressive...

5

26

260

Excited to STARFlow has been accepted at #NeurIPS2025 as a **Spotlight** paper! Super excited and looking forward to seeing more research directions on scalable normalizing flows as an alternative to this existing diffusion world!🧐 Huge congrats to my amazing collaborators!!

I will be attending #CVPR2025 and presenting our latest research at Apple MLR! Specifically, I will present our highlight poster--world consistent video diffusion ( https://t.co/VciUQMeCQI), and three workshop invited talks which includes our recent preprint ★STARFlow★! (0/n)

4

11

114

Normalizing Flows are coming back to life. I'll be attending #ICML2025 on Jul 17 to present TarFlow -- with the Oral presentation at 4:00 PM in West Ballroom A and the poster at 4:30 PM in East Exhibition Hall A-B #E-2911. Stop by and I promise it will be worth your time.

We attempted to make Normalizing Flows work really well, and we are happy to report our findings in paper https://t.co/SbdXPZy6ed, and code https://t.co/CMOo3svcPK. [1/n]

0

7

56

@shaulneta @urielsinger @itai_gat @lipmanya Congrats on Amazing work! Do you think it may be interesting to mention some possible connections to our previous work DART https://t.co/cNf6RjaWbL 👀

arxiv.org

Diffusion models have become the dominant approach for visual generation. They are trained by denoising a Markovian process which gradually adds noise to the input. We argue that the Markovian...

1

1

24

Normalizing flows have some inherent limitations. However, if some of these challenges can be mitigated, this direction may still hold promise as a competitive and scalable generative modeling. Here is our another attempt in this direction.

In this latest work "STARFlow: Scaling Latent Normalizing Flows for High-resolution Image Synthesis", we show that normalizing flows (with the change of variable formula) can be scaled to synthesize high-resolution & text-conditioned images at diffusion-level quality. (1/n)

0

1

12