Helicone

@helicone_ai

Followers

5K

Following

780

Media

375

Statuses

1K

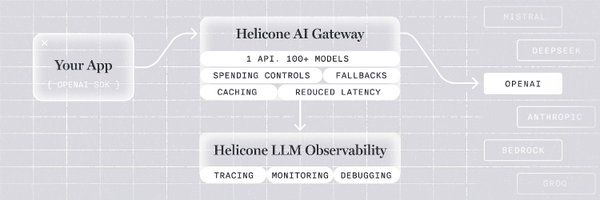

The LLMOps platform behind the fastest-growing AI companies. AI Gateway + LLM Observability. Fully open-source. Fastest on the market.

San Francisco, US

Joined December 2022

- Anthropic file & OpenAI Responses API support - LangChain & LangGraph integration - Claude 3 Haiku, Azure GPT-5-mini, and more models added - Codex + AI Gateway easy setup - Filter requests by referrer - Dashboard UI fixes and more deployed! 🚢 Any more feature requests? hmu!

0

0

0

It's integration season at Helicone 🔌 - Launched a Vercel AI SDK Helicone provider - Shipped support for OpenAI's Response API - Langchain and LangGraph integrations - Expanded model support (Kimi K2, Mistral, Azure OpenAI, DeepSeek & more! - Helicone n8n node and more!

0

0

2

Join thousands of AI engineers building more reliable AI applications today. Start free →

helicone.ai

AI Gateway & LLM Observability

0

0

1

🔧 Developer experience upgrade - Semantic Kernel docs are live - Fixed OpenAI streaming response views - Prettified ALL model names - EU API key generation fixed

1

0

2

Vinyl is the backbone of a diverse universe of products instrumental to our world.

6

14

64

🧠 New models supported First, we got a new provider integrated: welcome Chutes! Some of the new models supported: - Claude 3.5 Haiku - DeepSeek R1T2 Chimera - Baidu's Ernie 4.5 Thinking - Qwen3 VL 235B (multimodal!) - Qwen2.5 - Qwen3 Coder models

1

0

2

⚡ New AI Gateway caching layer New intelligent caching for prompt bodies so your repeated requests go through to your chosen provider way faster. This is perfect for those production workloads where every millisecond counts. Your users (and wallet) will thank you 💰

1

0

1

- A new caching layer for the AI Gateway - New models supported, including the latest Claude Sonnet Haiku! - Support for tracking multimodal models - Improved DX for streaming, model names, and key generation - and more! Here's everything we shipped this week: 🧵

1

0

3

New for AI app devs: Billing for LLM tokens with @stripe - Charge users for token usage (+X% margin) - Call LLMs with our proxy API or via partners: @OpenRouterAI, @cloudflare, @vercel, @helicone_ai - Update prices as models change costs Get access here: https://t.co/5IMEWCRepx

20

23

203

To celebrate our 2nd anniversary, we are launching our NEW Xyraz™ Modular iPhone 17 Charging Dock + Stand for your MagSafe Charger! Works with iPhone 17, 17 Pro & 17 Pro Max with MagSafe compatible cases and is fully adjustable!!

1

0

5

Traffic to @getbranddev’s AI Query API spiked this week, and I found out I was passing way too many input tokens per request (it's much harder to sanitize html than I previously thought). @helicone_ai made it super easy to debug and get costs back under control. Observability

0

1

8

Test it out today and save hours of time and resources 👩🏻💻 https://t.co/HzTTF51SUL

docs.helicone.ai

Use any LLM provider through a single OpenAI-compatible API with intelligent routing, fallbacks, and unified observability

0

0

0

4/ Are they easy to integrate? Depends on the gateway, but the best ones are! At Helicone, we use the OpenAI API so transitioning requires minimum effort for you.

1

0

0

3/ Why are they helpful? - New models and providers come out every week - You need constant provider uptime - You always want the cheapest/fastest provider - You don't want users to have to bring their own API key - You don't want the overhead of customizing every fallback

1

0

0

Vuro Chat goes into beta TODAY. Elite users can now chat with Vuro in plain language, asking it for analysis on any Crypto, Stock, or Forex. How is it different than talking to ChatGPT or Grok? Vuro is plugged into 10 years worth of raw OHLCV data and market fundamentals.

1

0

1

2/ How do they work in practice? 1. User sends a request to OpenAI 2. Request is done through an AI Gateway 3. Request is routed to the best LLM provider (AWS, Azure, OpenAI, etc) 4. Responses are cached and traced 5. Gateway shares response with user

1

0

0

1/ What are AI Gateways? You can imagine them as middleware between your app and the AI models you're querying. They facilitate integration, deployment, and management of AI tools, so you're not constantly updating your code & customizing it for every LLM provider.

1

0

0

The AI Gateway market is estimated to be $390M+, growing to $9B by 2031. Here's everything you need to know about AI Gateways👇

1

1

1

What you get with the Helicone AI Gateway: - 100% uptime - Always the cheapest provider - LLM observability by default - Rate limits, caching, guardrails - Protection from prompt injections & data exfiltrations One unified bill.

0

0

2

Millions have joined ImpactSocial dot media For family, friends & community—built to boost engagement + productivity. Still free! Premium <10¢/day. Sponsorship bundles = a gym membership to inspire. Reviewers & influencers—help spread the word!

1

3

79

easily one of the coolest things for app devs we’ve built recently

New for AI app devs: Billing for LLM tokens with @stripe - Charge users for token usage (+X% margin) - Call LLMs with our proxy API or via partners: @OpenRouterAI, @cloudflare, @vercel, @helicone_ai - Update prices as models change costs Get access here: https://t.co/5IMEWCRepx

5

2

18

Released only 48h ago, you can already access the latest DeepSeek 3.2 through our AI Gateway! 🎯

0

0

3

billing users for llm usage is complex bc: - users adding their api keys is terrible ux - tracking usage per user requires credits or escrows in advance - it's hard to estimate how much a user will consume watch @stripe x @helicone_ai abstract those away with llm billing! 🌶️

Previewing a new @stripe project – billing for LLM tokens. You ship while we: * auto update model prices as they change * enforce your markup % with usage based billing * auto record usage via a LLM proxy: Stripe’s (new!), @OpenRouterAI, @cloudflare, @vercel, @helicone_ai 🧵

0

1

10