Hayoung Jung

@hayounggjung

Followers

278

Following

3K

Media

18

Statuses

231

PhD @PrincetonCS @PrincetonCITP. Social Computing, Computational Social Science, and NLP. Previously @uwcse @uw_ischool @uwnlp.

Princeton, NJ

Joined March 2014

I am at #EMNLP2025🇨🇳 to present our main paper *MythTriage: Scalable Detection of Opioid Use Disorder Myths on a Video-Sharing Platform*! Come by to discuss details! 🏦 Location: Hall C ⏲️Time: 11AM-12:30PM 🔗 Paper: https://t.co/Bohi4FxJE3 📁 Repo: https://t.co/2iO6c2MR86

2

2

33

1/ Hiring PhD students at CMU SCS (LTI/MLD) for Fall 2026 (Deadline 12/10) 🎓 I work on open, reliable LMs: augmented LMs & agents (RAG, tool use, deep research), safety (hallucinations, copyright), and AI for science, code & multilinguality & open to bold new ideas! FAQ in 🧵

16

107

509

I’m applying to PhD programs in robot learning this cycle and am actively looking for relevant opportunities in this space. My research focuses on developing generalist robotic manipulation policies that leverage strong priors, by jointly optimizing both the data and the models.

7

20

166

Such an impressive work that fills in a major gap in the DR research literature! More training algorithms & improvements needed in this space.

🔥Thrilled to introduce DR Tulu-8B, an open long-form Deep Research model that matches OpenAI DR 💪Yes, just 8B! 🚀 The secret? We present Reinforcement Learning with Evolving Rubrics (RLER) for long-form non-verifiable DR tasks! Our rubrics: - co-evolve with the policy model -

0

0

1

I'm recruiting multiple PhD students for Fall 2026 in Computer Science at @JHUCompSci 🍂 Apply to work on AI for social sciences/human behavior, social NLP, and LLMs for real-world applied domains you're passionate about! Learn more https://t.co/KbTJevMb8J & help spread the word!

15

155

658

Please show up to my EMNLP oral tomorrow at A108 4:30pm sharp!! We will be looking at new definition of anthropomorphism in the age of LLMs

0

1

27

Broadly interested in computational social science, AI safety & evaluation, NLP for social good & applications (in public health, science...)! Happy to chat or grab coffee at the conference! Feel free to DM me :)

1

0

1

The debate over “LLMs as annotators” feels familiar: excitement, backlash, and anxiety about bad science. My take in a new blogpost is that LLMs don’t break measurement; they expose how fragile it already was. https://t.co/6CweDPv5wG

1

8

21

@PrincetonCITP is accepting applications for interested researchers to join! Applications now open for the Emerging Scholars Program, as well as the Fellows Program. Deadlines vary, check out the CITP website: https://t.co/1nfuaSgDJF

@PrincetonCS @EPrinceton

0

5

5

Apply apply apply!!

📣Hiring! Two opportunities 1. Research internship@MSR AI Interaction and Learning (Current PhD students) 2. Multiple positions in my lab @UTiSchool on LLM Personalization/Human-AI Alignment (Prospective PhD students) Details in thread below👇

0

0

2

Computer Science is no longer just about building systems or proving theorems--it's about observation and experiments. In my latest blog post, I argue it’s time we had our own "Econometrics," a discipline devoted to empirical rigor.

2

6

24

🌱✨ Life update: I just started my PhD at Princeton University! I will be supervised by @manoelribeiro and affiliated with @PrincetonCITP. It's only been a month, but the energy feels amazing —very grateful for such a welcoming community. Excited for what’s ahead! 🚀

26

21

610

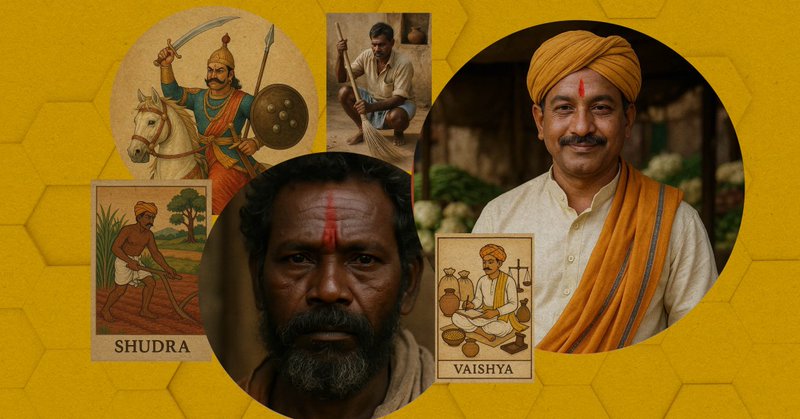

OpenAI is huge in India. It's models are steeped in caste bias Over months of reporting, @chooi_jeq and I tested the extent of caste bias in @OpenAI's GPT-5 and Sora models. Our investigation found that caste bias has gotten worse over time in OpenAI's models 🧵

technologyreview.com

India is OpenAI’s second-largest market, but ChatGPT and Sora reproduce caste stereotypes that harm millions of people.

25

38

107

Check out @fraslv ‘s thought-provoking article! He is someone you want to follow, as he has a lot of exciting experiments and studies in the realm of AI & persuasion!! 🗣️

"For better or worse, AI’s superhuman persuasiveness isn’t just a theoretical risk: it’s already here. It’s up to the members of society to decide how they want to use it." At @SPSPnews, @PrincetonCITP's @fraslv asks: Would You Win in a Debate Against AI?

0

0

4

Social media feeds today are optimized for engagement, often leading to misalignment between users' intentions and technology use. In a new paper, we introduce Bonsai, a tool to create feeds based on stated preferences, rather than predicted engagement.

1

13

38

✍️ I wrote a short piece for the #SPSPblog about our work on AI persuasion (w/ @manoelribeiro

@ricgallotti

@cervisiarius). Read it at: https://t.co/hdjbix6Mcb. Thanks @AndyLuttrell5 @PRPietromonaco @SPSPnews for your invitation and feedback during the process!

0

3

8

@tanmit @munmun10 @shravika_mittal @navreeetkaur Lastly, I would like to thank my awesome collaborators @shravika_mittal, Ananya Aatreya (first mentee!), @navreeetkaur, and faculty mentors who taught me a lot during this project @tanmit @munmun10!

1

0

3

@tanmit @munmun10 @shravika_mittal @navreeetkaur 🙌 We hope public health, platforms, & researchers build on MythTriage to scale OUD myth detection on video platforms. To support this, we’re releasing everything: 🧠 Models: https://t.co/LmqMcOVRRZ 💻 Code: https://t.co/P93mI6vQoQ 📊 Data:

huggingface.co

1

0

0

@tanmit @munmun10 @shravika_mittal @navreeetkaur 🤩 Lastly, we’re excited because this work shows how a decade-old, but simple idea—model cascades—scales with LLM advancements to tackle high-stakes health issues like OUD myths. Past work tested model cascades on standard benchmarks (e.g., SQuAD). We validate them in the wild!

1

0

0