Explore tweets tagged as #residual_learning

43% of the speedup in the new NanoGPT record is due to a variant of value residual learning that I developed. Value residual learning (recently proposed by https://t.co/uQhoUuCk7n) allows all blocks in the transformer to access the values computed by the first block. The paper

12

40

390

DEEP LEARNING DAY 81 (i think) | An Update 🫡 Covered: > "Rethinking the Inception Architecture for Computer Vision" by Szegedy et al. > Implemented InceptionV3. > Began reading "Deep Residual Learning for Image Recognition" by He et al. > Reviewing Calculus: Definition of

4

16

254

MAC-VO: Metrics-Aware Covariance for Learning-based Stereo Visual Odometry TL;DR: learning-based stereo; learned metrics-aware matching uncertainty for dual purposes: selecting keypoint and weighing the residual in pose graph optimization.

5

48

286

DEEP LEARNING DAY 73 Time - 8 hrs. Worked on: > FInished "Identity Mappings in Deep Residual Networks" > Implemented ResNet V2 > Begun "Aggregated Residual Transformations for Deep Neural Networks" > Learnt ab Highway Gating Mechanism via Sigmoid Act. > Learnt ab Depthwise

3

35

480

DEEP LEARNING DAY 72 (got yesterday's count wrong) Time - 8.6 hrs. > Finished reading "Deep Residual Learning for Image Recognition" > Derived Backpropagation through Residual Connections. > Implemented ResNet > Begun reading "Identity Mappings in Deep Residual Networks" >

4

25

319

📢📢📢 Excited to release ManipTrans: Efficient Dexterous Bimanual Manipulation Transfer via Residual Learning (CVPR25). 🤏🤙✌️With ManipTrans, we can transfer dexterous manipulation skills into robotic hands in simulation and deploy them on a real robot, using a residual policy

1

45

203

👨🔬 Kaiming He – The Architect of Residual Networks ✅ Co-author of ResNet (2016), introducing deep residual learning and revolutionizing computer vision ✅ Developed Faster R-CNN and Mask R-CNN, setting benchmarks for detection and segmentation ✅ Pioneered Focal Loss and Feature

0

1

1

DEEP LEARNING DAY 74 🧵 Worked on: > Finished "Aggregated Residual Transformations for Deep Neural Networks" > Implemented ResNeXt > Wrote a Blog Post on ∂'s of Residual Connections > Final Calc I Review: Chain Rule, Implicit Differentiation, Higher Derivatives, Logarithmic

3

17

229

🔬 Excited to share the publication "Lightweight Super-Resolution Techniques in Medical Imaging: Bridging Quality and Computational Efficiency"👉 https://t.co/ZpCcgj0WR6

#medical_imaging #super_resolution #lightweight_model #residual_learning

0

1

1

"Value residual learning" was accepted to ACL2025 main conference #ACL2025 ! In the camera-ready version, we: 1) add results for the 1.6B models trained on 200B tokens, and 2) introduce Learnable ResFormer Plus, which significantly outperforms Learnable ResFormer by using

1

9

60

Computer Vision update: ° Studied Convnet in detail with the help of CS23 resource ° Understood some of the Advanced CNN architectures ° Went further to learn how the VGG and ResNet architecture works ° Read Deep Residual Learning for Image Recognition article

0

0

0

Dive into ResNet, the neural network architecture behind modern deep learning. This lecture covers: 1️⃣ First and second-order logic in neural networks 2️⃣ Skip connections and their mechanism 3️⃣ Residual learning - learning what to add vs complete transformations 4️⃣ Solving

1

35

197

DEEP LEARNING DAY 77 > Finished Implementing DenseNet > Review on Depthwise Separable Convolutions > Wrote Blog Post on Dense Connections vs Residual Learning > Begun reading "MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications" > Calculus Review:

2

14

184

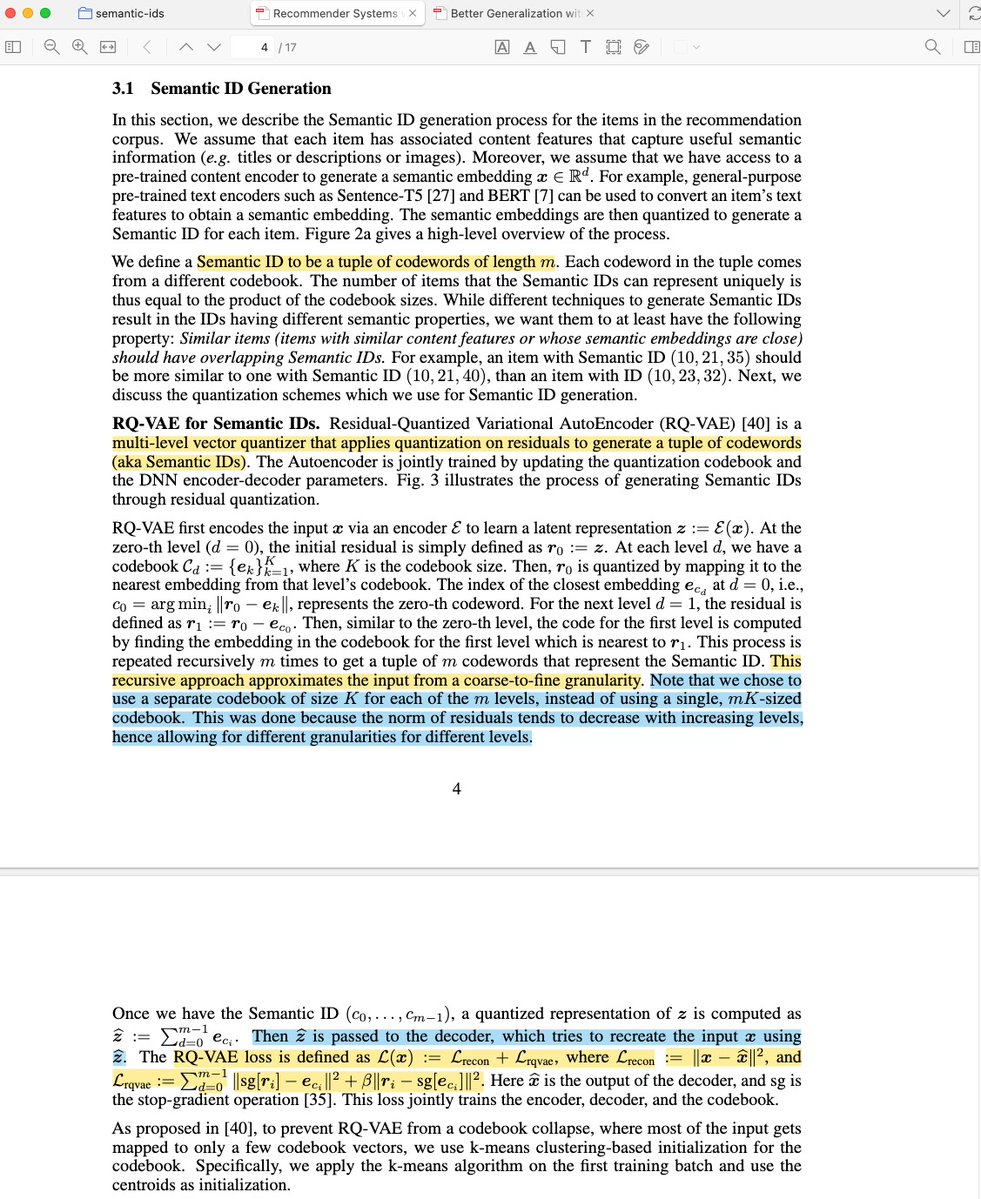

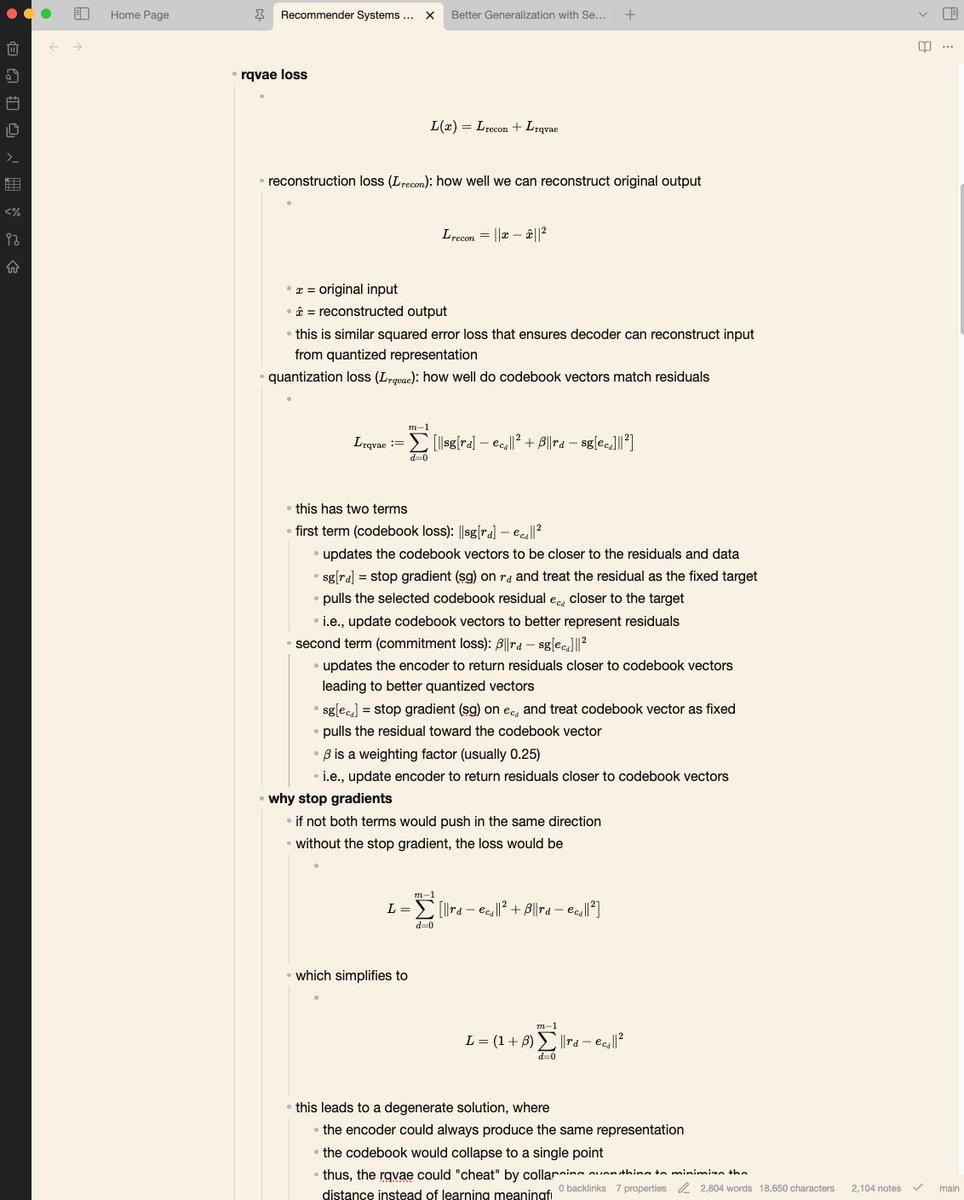

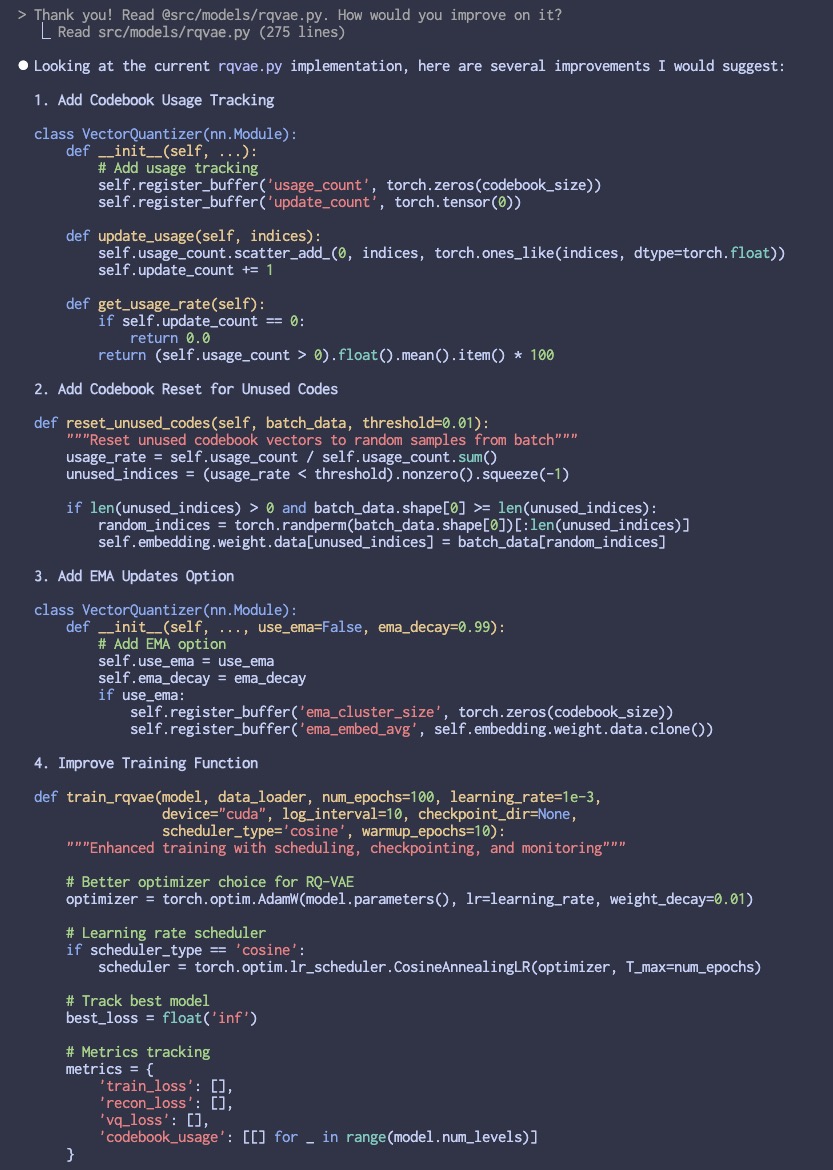

tools for learning and building • zotero: papers, annotations, citations • obsidian: notes, writing, connecting the dots • claude: thinking partner, improve understanding • claude code: implementation, code review (images from work on semantic IDs and residual-quantized

7

16

161

ManipTrans is out on Hugging Face Efficient Dexterous Bimanual Manipulation Transfer via Residual Learning

5

25

153

DEEP LEARNING DAY 79. Worked on: > Reading "MobileNetV2: Inverted Residuals and Linear Bottlenecks" > Inverted Residual Blocks > 1x1 Convolutions as Dimensionality Reduction > Understanding the role of ReLU6 > Begun MobileNetV2 Implementation Shorter day. Long tomorrow.

2

9

159

ASAP learns diverse, agile, whole-body humanoid motions via learning a residual action model from the real world to align sim and real physics, enabling motions that were previously difficult to achieve. It has two stages: Stage 1 pretrains a phase-based motion tracking policy

🚀 Can we make a humanoid move like Cristiano Ronaldo, LeBron James and Kobe Byrant? YES! 🤖 Introducing ASAP: Aligning Simulation and Real-World Physics for Learning Agile Humanoid Whole-Body Skills Website: https://t.co/XQga7tIfdw Code: https://t.co/NpEeJtVxpp

5

24

133

Daily #AI / #MachineLearning Study Log Entry: 102 - CNN Architectures -- LeNet-5 -- AlexNet -- GoogleNet --- Inception module -- VGGNet -- ResNet --- Skip Connection / Shortcut Connection --- Residual Learning Key takeaways: Today, I tried implementing some of the famous CNN

0

0

2

Residual connections aren’t just skip links. They’re discretized differential equations: This one equation changed deep learning forever. Here’s why ResNets, and what most miss: [1/5]

6

4

51