Explore tweets tagged as #mLSTM

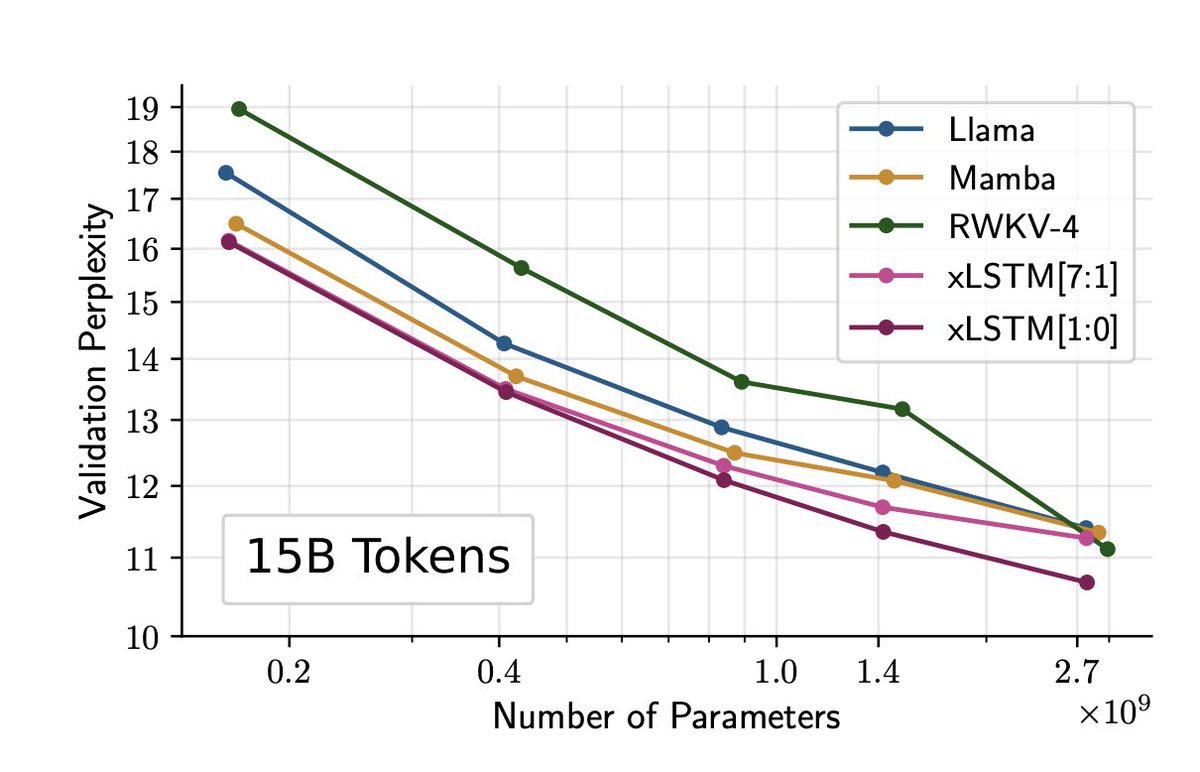

xLSTM: Extended Long Short-Term Memory. abs: Leveraging the latest techniques from modern LLMs, mitigating known limitations of LSTMs (introducing sLSTM and mLSTM memory cells that form the xLSTM blocks), and scaling up results in a highly competitive

8

90

509

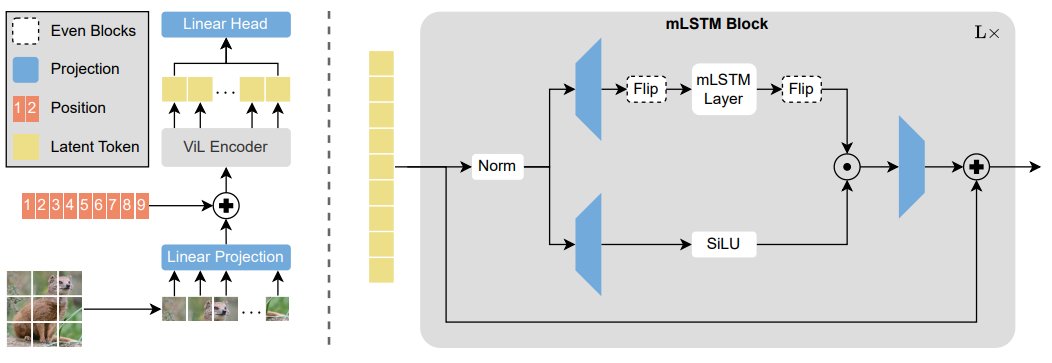

Excited to introduce Vision-LSTM (ViL): a new backbone for vision built on the xLSTM architecture. ViL creates patch tokens from an image and processes them with alternating bi-directional mLSTM blocks, where odd blocks process the sequence from the opposite direction. 🧵

Introducing Vision-LSTM - making xLSTM read images 🧠It works . pretty, pretty well 🚀🚀 But convince yourself :) We are happy to share code already!. 📜: 🖥️: All credits to my stellar PhD @benediktalkin

2

34

138

[CV] Vision-LSTM: xLSTM as Generic Vision Backbone.B Alkin, M Beck, K Pöppel, S Hochreiter, J Brandstetter [ELLIS Unit Linz] (2024). - Vision-LSTM (ViL) is an adaptation of the xLSTM architecture to computer vision tasks. It uses alternating mLSTM blocks

1

10

45

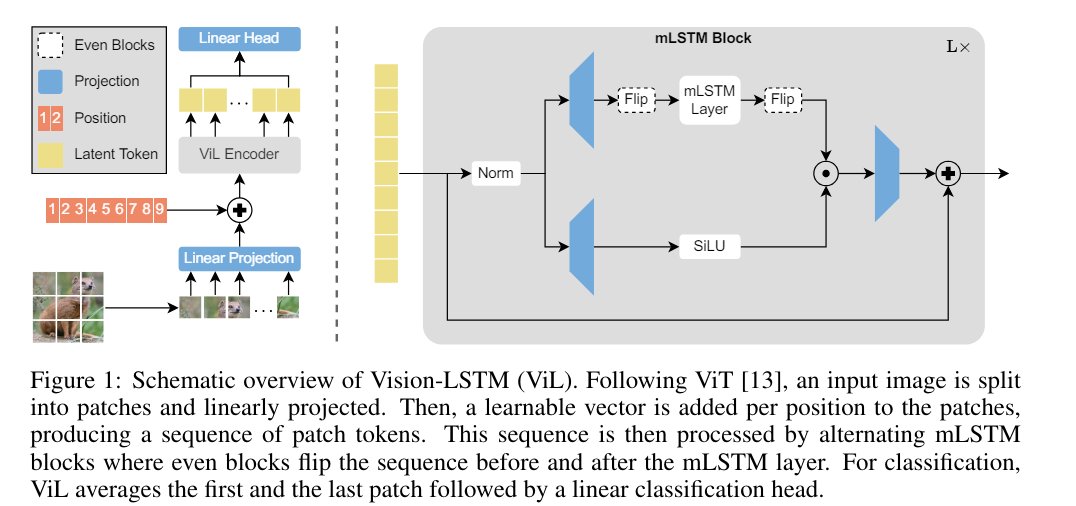

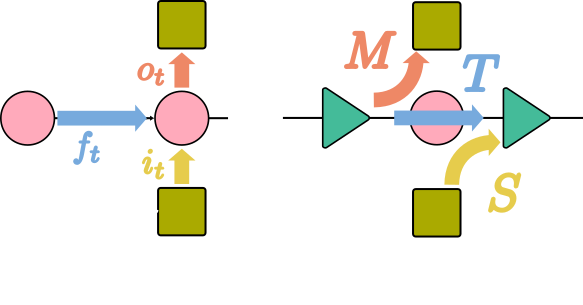

6/.They did a lot of complicated evals, but this one i felt was the clearest. They trained several models (Transformer LLM, RWKV, and XLSTM) on 15B tokens of text, and measured the perplexity (how good each model is at predicting the next token). the xLSTM[1:0] (1 mLSTM, 0 sLSTM

2

0

23

7/.A very important thing to take note of is that the XLSTM architecture is composed of a flexible ratio of MLSTM and SLSTM blocks. MLSTM is the matrix memory parallelizable LSTMs (like Transformers, that can operate over all tokens at once). SLSTM is the LSTM that is NOT

1

0

16

no code/weights shared yet for the #xLSTM, so I tried implementing mLSTM myself!. Colab notebook 👇👇🔗 in comments. LMK if you want the sLSTM as well.

🔔the guy who invented the LSTM just dropped a new LLM architecture! (Sepp Hochreiter). Major component is a new parallelizable LSTM. ⚠️one of the major weaknesses of prior LSTMs was the sequential nature (can't be done at once). Everything we know about the XLSTM: 👇👇🧵

6

16

135

🤷♂️עוד ארכיטקטורה שמייק לא מתלהב ממנה 🤷♂️ . יצא איזה שדרוג של LSTM הנקרא xLSTM ויש הרבה התלהבות ממנו. אני לא מבין את ההתלהבות הרבה: חיברו 2 רעיונות מלפני 5-6 שנים sLSTM ו- mLSTM בצורה של ResNet עם קצת gating ביניהם וקיבלו כביכול משהו יותר טוב. ויש שם עוד מנגנון עדכון זכרון

2

0

9

Another productive week for the win! Stay cozy this weekend, Southern California. Happy Friday from the MLS™ 🌻

0

0

0

Love is in the air ❤️ May it be shared with all those dearest to your heart! Happy Valentine's Day from The MLS™.

0

0

0

xLSTM explore the limits of LM by scaling LSTMs to billions of params, addressing their limitations, integrating modern techniques:.-exponential gating w/ stabilization.-modified memory structures.yielding:.-sLSTM: scalar mem.-mLSTM: parallelizable matrix mem. Perf & scalability:

I am so excited that xLSTM is out. LSTM is close to my heart - for more than 30 years now. With xLSTM we close the gap to existing state-of-the-art LLMs. With NXAI we have started to build our own European LLMs. I am very proud of my team.

1

0

0

This brisk Southern California weather is calling for a nice hike to admire one of our many breathtaking views. 😍 Happy Friday from The MLS™ ✨.

0

0

1

Join the discussion with CEO of VestaPlus™ and The MLS™, Annie Ives! Discover strategies to bridge the gap between the role of MLS and its perception among real estate professionals, ensuring a clear understanding of the impact MLS organizations have on the RE industry.

0

0

1

Congratulations to Christie Thomas on her new position as 2024 President of The MLS™! 🎉 We are excited and looking forward to a fantastic year under Christie's leadership and guidance. ✨

0

0

0

Join the discussion with CEO of VestaPlus™ and The MLS™, Annie Ives! Discover strategies to bridge the gap between the role of MLS and its perception among real estate professionals, ensuring a clear understanding of the impact MLS organizations have on the RE industry.

0

0

2

We are excited to have The MLS™ Executive team represented at this year's Clareity24 Workshop in Scottsdale, Arizona! #themls #realestate

0

0

1

🚀 xLSTM: A Leap in Long Short-Term Memory Technology 🚀. 1.Introducing xLSTM: Enhanced Gating and Memory Structures 🧠. - xLSTM revolutionizes LSTM with exponential gating and innovative memory structures. - Features include scalar memory in sLSTM and matrix memory in mLSTM

0

1

1

Tiled Flash Linear Attention: More Efficient Linear RNN and xLSTM Kernels. Tiled Flash Linear Attention (TFLA) optimizes linear RNNs by enabling larger chunk sizes, reducing memory and IO costs for long-context training. Applied to xLSTM and mLSTM, it introduces a faster variant

2

1

12