Explore tweets tagged as #hyperparameter

Read #HighlyAccessedArticle "Structure Learning and Hyperparameter Optimization Using an Automated Machine Learning (AutoML) Pipeline". See more details at: .#Bayesianoptimization .#hyperparameteroptimization.@ComSciMath_Mdpi

0

3

4

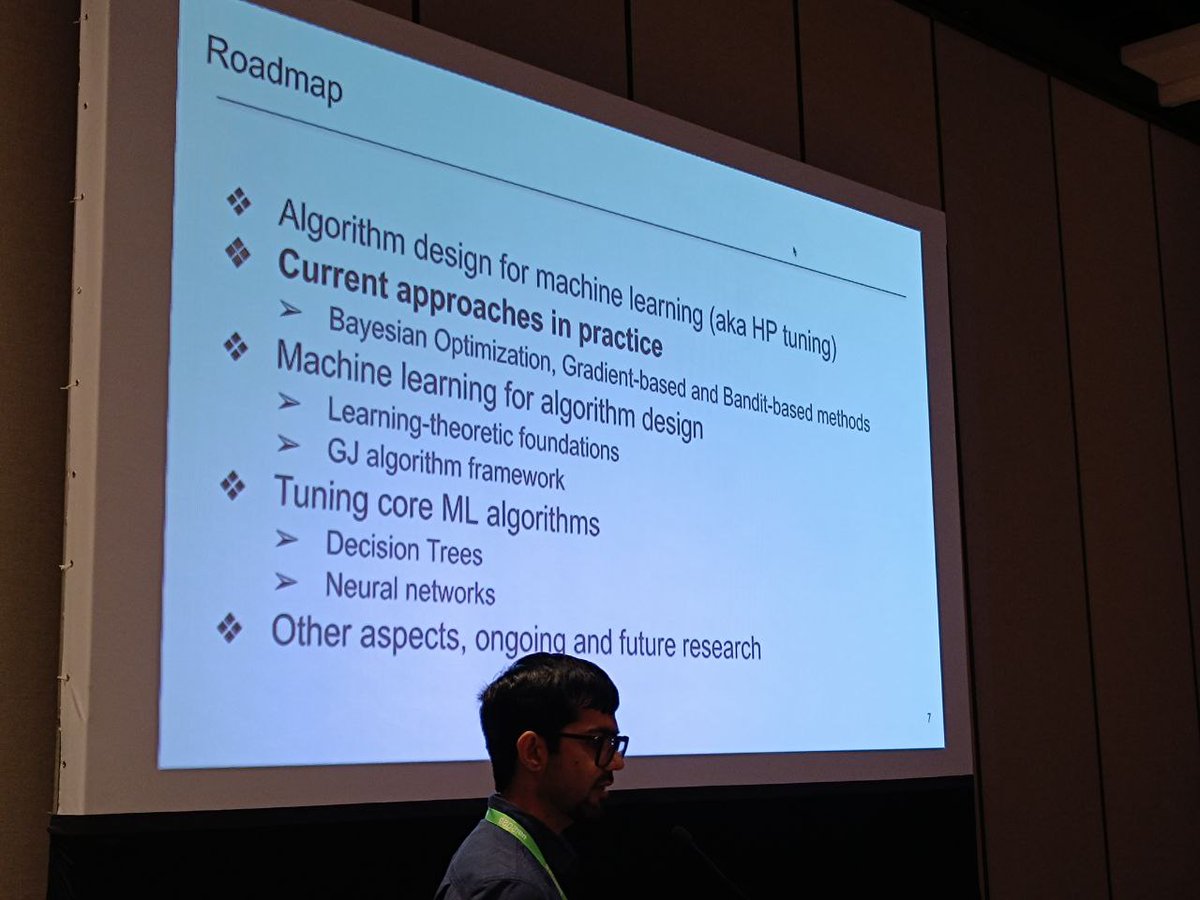

and . "Hyperparameter Optimization and Algorithm Selection: Practical Techniques, Theory, and New Frontiers". by Dravyansh Sharma (TTIC)

time to educate our crowds on . "Counterfactuals in Minds and Machines". by @tobigerstenberg (Stanford), @autreche (MPI SS) and @stratis_ (MPI SS)

0

3

4

Here are some of the most widely used techniques for hyperparameter optimization. The table below highlights the pros and cons of each strategy, helping you better understand when to use each one. Hope you find it helpful! 😉 . #machinelearning #datascience #ML #MLModels

1

0

11

Hyperparameter Tuning for Deep Learning. #BigData #Analytics #DataScience #AI #MachineLearning #IoT #IIoT #PyTorch #Python #RStats #TensorFlow #Java #JavaScript #ReactJS #CloudComputing #Serverless #DataScientist #Linux #Programming #Coding #100DaysofCode .

0

2

3

🎉WoS #HighlyCited.🔖TPTM-HANN-GA: A Novel #Hyperparameter_Optimization Framework Integrating the #Taguchi_Method, an #Artificial_Neural_Network, and a #Genetic_Algorithm for the Precise Prediction of #Cardiovascular_Disease_Risk.👥by Chia-Ming Lin et al.🔗

0

0

0

🤔 Not sure which hyperparameter search method to use? . - Random Search.- Bayesian Search.- SMAC.- TPE (Tree-structured Parzen Estimator). Watch the video for a quick rundown 👇. #machinelearning #smac #mlmodels #hyperparameter #tpe #randomsearch #bayesiansearch

0

0

1

Grid Search 🆚 Random Search: Two powerful methods for hyperparameter tuning in Machine Learning. Here's a chart for a side-by-side comparison of their pros and cons. #DataScience #AI #ML #Machinelearning #hyperparameter #gridsearch #randomsearch

0

0

3

Day 48 of #100DaysOfCode .-> Today I learnt about Hyperparameter tuning in Keras.-> Then practiced it on PIMA Indians diabetes dataset.-> Got accuracy of 0.77 although I didn't preprocessed the data

0

0

2