Explore tweets tagged as #OverOptimization

The antifragility of system comes from the mortality of its components; immortality blocks evolution. Work for the immortality of the collective. [On top of my disgust for non-stoical neurotic overoptimization].h/t @Gregoresate

@bryan_johnson Looks like you didn't understand much from Skin in the Game. It states that we are not supposed to be immortal; only our genes. This is aside from, in my general work, the contempt, perhaps even disgust I have for your brand of non-stoical neurotic overoptimization.

112

211

2K

Come chat with us about our AI Safety papers at #NeurIPS2024!.12/11: 💥Catastrophic Goodhart: overoptimization in RLHF.12/12: ⚙️ Analysing the Generalisation and Reliability of Steering Vectors.12/12: 🌀 Hypothesis Testing the Circuit Hypothesis in LLMs.12/13: 🔬 InterpBench.🧵👇

1

1

5

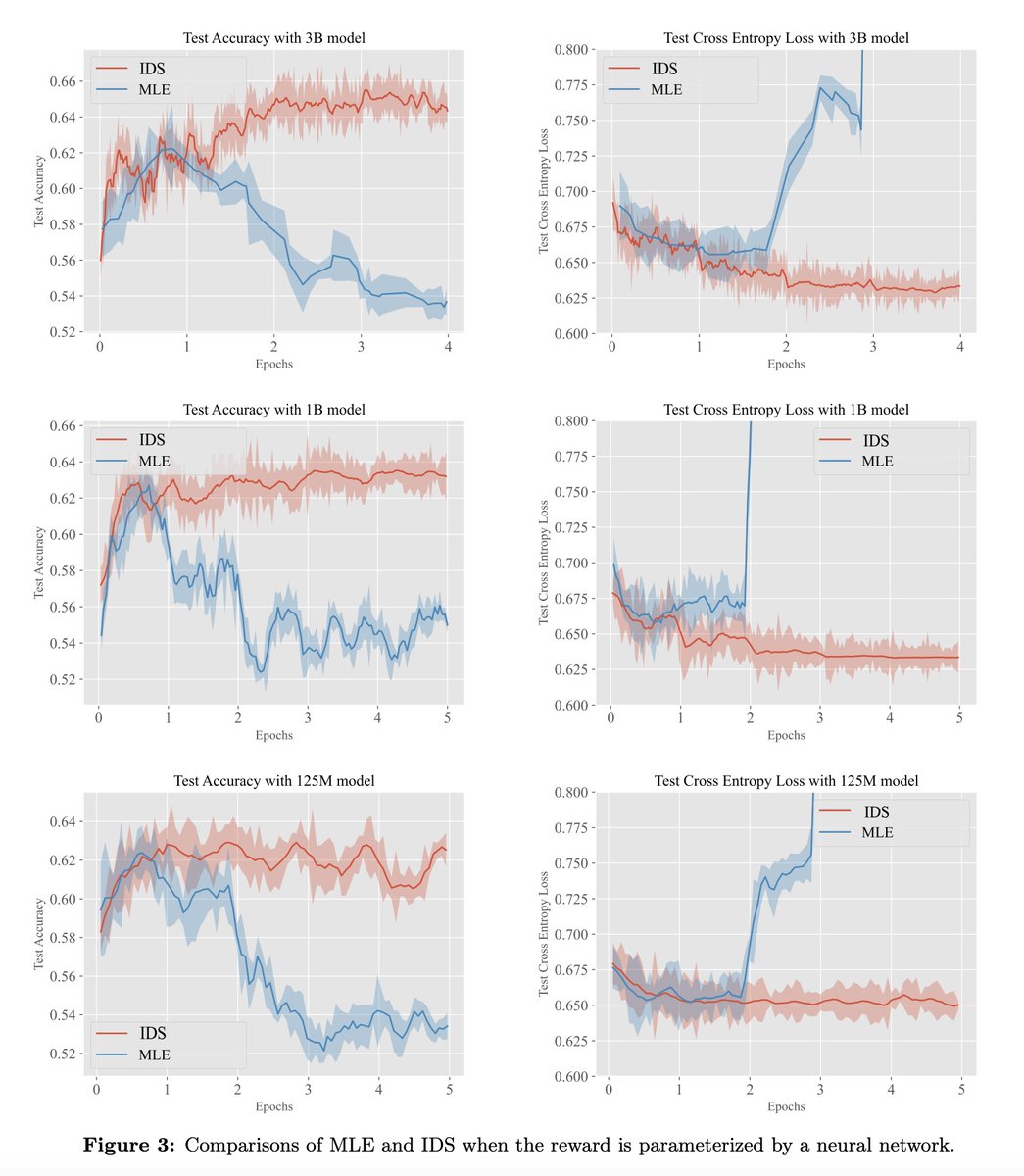

Our cross-university(s) collaborative work on "Scaling laws for Reward Model Overoptimization in Direct Alignment Algorithms" is accepted at @NeurIPSConf!.

After the LLaMa 3.1 release and ICML, I wan to highlight our paper "Scaling Laws for Reward Model Overoptimization in Direct Alignment Algorithms". TL;DR we explore the dynamics of over-optimization in DPO/IPO/SLiC and find similiar "reward hacking" issues as online RLHF.👇

0

4

20