Explore tweets tagged as #LLMCompiler

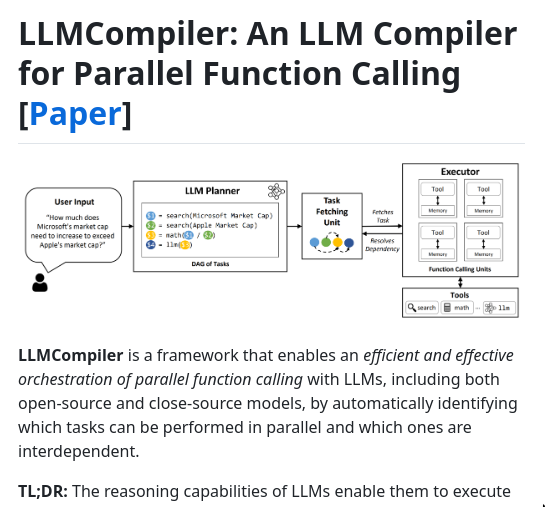

🚨 SOTA Parallel Function Calling Agents in @llama_index 🚨. The LLMCompiler project by Kim et al. (@berkeley_ai) is a state-of-the-art agent framework that enables 1) DAG-based planning, and 2) parallel function execution. Makes it much faster than sequential approaches like

3

72

320

A holy grail for agents is combining parallel function execution with query planning capabilities. The recent LLMCompiler paper by Kim et al. (@berkeley_ai ) does exactly that, and I’m excited to introduce an integration with @llama_index 🔌. Here’s how it works 👇.1. Plan:

🚨 SOTA Parallel Function Calling Agents in @llama_index 🚨. The LLMCompiler project by Kim et al. (@berkeley_ai) is a state-of-the-art agent framework that enables 1) DAG-based planning, and 2) parallel function execution. Makes it much faster than sequential approaches like

12

87

460

Our first webinar of 2024 explores how to efficiently, performantly build agentic software 🎉. We’re excited to host @sehoonkim418 and @amir__gholami to present LLMCompiler: an agent compiler for parallel multi-function planning/execution. Previous frameworks for agentic

2

37

185

LLMCompiler based approach for parallel processing 👇

Here are 7 challenges that AI engineers must solve in order to build large-scale intelligent agents (“LLM OSes”):. 1️⃣ Improving Accuracy: Make sure agents can solve hard tasks well.2️⃣ Moving beyond serial execution: identify parallelizable tasks and run them accordingly.3️⃣

1

0

0

LLMCompiler with @llama_index : Revolutionizing Multi-Function Calling with Parallel Execution .Link:

0

0

1

2. Meta LLM Compiler. Meta introduces the Meta LLM Compiler, built on Meta Code Llama, featuring advanced code optimization and compiler capabilities. Available in 7B and 13B models, it aids in code size optimization and disassembly tasks. #Meta #LLMCompiler #AI

1

0

0

🚀556 Billion Tokens: The AI Revolution Begins! . Meta's AI redefines programming with custom languages, making coding faster and more efficient.📊💥. #CodeOptimization #LLMCompiler #TechInnovation #MetaLLM #CompilerEngineering #AI #MachineLearning #FutureTech #CodeRevolution

0

0

0