Forethought

@forethought_org

Followers

863

Following

38

Media

8

Statuses

55

Research nonprofit exploring how to navigate explosive AI progress.

Oxford, UK

Joined March 2025

We’re hiring! Society isn’t prepared for a world with superhuman AI. If you want to help, consider applying to one of our research roles: https://t.co/9MZfs8xhso Not sure if you’re a good fit? See more in the reply (or just apply — it doesn’t take long)

1

27

90

Forethought is hiring for (senior) research fellows. This is probably one of the best places in the world to conduct macrostrategy or worldview investigation research related to AGI/ASI. The job can be remote and pay is surprisingly good. Consider applying!

We’re hiring! Society isn’t prepared for a world with superhuman AI. If you want to help, consider applying to one of our research roles: https://t.co/9MZfs8xhso Not sure if you’re a good fit? See more in the reply (or just apply — it doesn’t take long)

1

5

39

Wondering whether to apply to our open roles? Research Fellow Mia Taylor joined 5 weeks ago. We just released a new episode of ForeCast, hearing from her about why she joined, what it's like to work here, and who the work is likely (and unlikely) to suit. https://t.co/x6ThgKqkbN

pnc.st

This is a bonus episode to say that Forethought is hiring researchers. After an overview of the roles, we hear from Research Fellow Mia Taylor about working at Forethought. The application deadline...

0

0

3

We’re also offering a referral bounty of up to £10,000 (submit here: https://t.co/ifbYySNUgs).

docs.google.com

We may reach out to the person based on the information you provide, but it might be good for you also to encourage the person to apply. You can see more about the role here. You might want to think...

0

0

8

See the full ad and apply here: https://t.co/9MZfs8xhso, and see our careers page for more about what it’s like to work at Forethought: https://t.co/QYpWkwWDtr.

forethought.org

We are a research nonprofit focused on how to navigate the transition to a world with superintelligent AI systems.

1

0

10

We’re looking for strong thinkers: - Senior fellows to lead their own agendas - Fellows who can work with others and develop their worldviews We offer freedom to focus on what you think is most important, a great research community, & support turning your ideas into action.

1

0

8

Evaluating the Infinite 🧵 My latest paper tries to solve a longstanding problem afflicting fields such as decision theory, economics, and ethics — the problem of infinities. Let me explain a bit about what causes the problem and how my solution avoids it. 1/20

13

40

200

What is happening in society and politics after widespread automation? What are the best ideas for good post-AGI futures, if any? @DavidDuvenaud joins the podcast — https://t.co/coXpgljcTD

pnc.st

David Duvenaud is an associate professor at the University of Toronto. He recently organised the workshop on ‘Post-AGI Civilizational Equilibria’ , and he is a co-author of ‘Gradual Disempowerment’....

1

3

18

How could humans lose control over the future, even if AIs don't coordinate to seek power? What can we do about that? @raymondadouglas joins the podcast to discuss “Gradual Disempowerment” Listen:

pnc.st

Raymond Douglas is a researcher focused on the societal effects of AI. In this episode, we discuss Gradual Disempowerment. To see all our published research, visit forethought.org/research. To...

1

2

9

Should AI agents obey human laws? Cullen O’Keefe (at @law_ai_) joins the podcast to discuss “law-following AI” Listen:

pnc.st

Cullen O'Keefe is Director of Research at the Institute for Law & AI. In this episode, we discuss 'Law-Following AI: designing AI agents to obey human laws'. To see all our published research, visit...

0

4

16

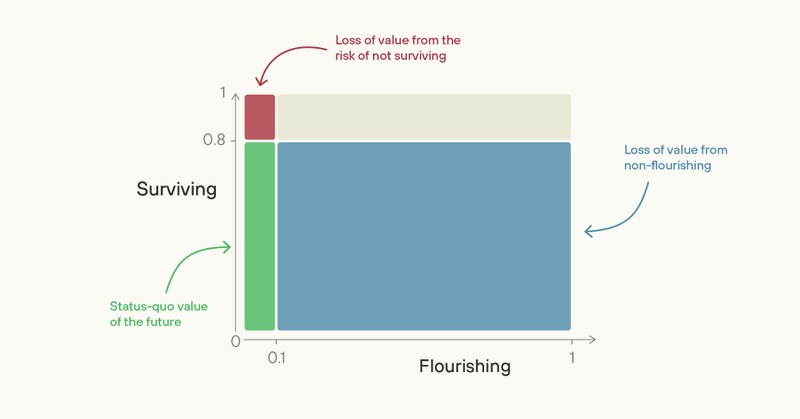

The ‘Better Futures’ series compares the value of working on ‘survival’ and ‘flourishing’. In ‘The Basic Case for Better Futures’, @willmacaskill and Philip Trammell describe a more formal way to model the future in those terms.

1

1

4

You can find this and future article narrations wherever you listen to podcasts:

forethought.org

We are a research nonprofit focused on how to navigate the transition to a world with superintelligent AI systems.

0

0

0

We're starting to post narrations of Forethought articles on our podcast feed, for people who’d prefer to listen to them. First up is ‘AI-Enabled Coups: How a Small Group Could Use AI to Seize Power’.

1

0

1

In the fifth essay in the ‘Better Futures’ series, @willmacaskill asks what, concretely, we could do to improve the value of the future (conditional on survival). Read it here: https://t.co/GwvhMpBuji

https://t.co/RZGwYsmEXj

forethought.org

Forethought outlines concrete actions for better futures: prevent post-AGI autocracy, improve AI governance.

What projects today could most improve a post-AGI world? In “How to make the future better”, I lay out some areas I see as high-priority, beyond reducing risks from AI takeover and engineered pandemics. These areas include: - Preventing post-AGI autocracy - Improving the

0

0

7

One reason to think the coming century could be pivotal is that humanity might soon race through a big fraction of what's still unexplored of the eventual tech tree. From the podcast on ‘Better Futures’ —

1

0

1

New post from Tom on whether one country could outgrow the world:

Could one country (or company!) outgrow the rest of the world during an AI-powered growth explosion? Many stories of world domination involve military conquest. But perhaps a country could become dominant without any aggression? New post explores whether this could happen. 🧵

0

0

1

The fourth entry in the ‘Better Futures’ series asks whether the effects of our actions today inevitably ‘wash out’ over long time horizons, aside from extinction. @willmacaskill argues against that view. Read it here: https://t.co/jeQgWb7v66

https://t.co/hKzOxk6SVt

forethought.org

Forethought argues against the "wash out" objection: AGI-enforced institutions enable persistent impact.

The trajectory of the future could soon get set in stone. In a new paper, I look at mechanisms through which the longterm future's course could get determined within our lifetimes. These include the creation of AGI-enforced institutions, a global concentration of power, the

0

0

3