fofr

@fofrAI

Followers

45K

Following

24K

Media

4K

Statuses

10K

Small update – you can now also use it for 9:16 videos

I'm trying to make a little Replicate model that combines flux pro and seedance to make realistic videos. - uses the IMG_XXXX.JPG trick for a start image.- uses realistic Seedance with "aggressively mediocre home footage" prompts to make the video.

1

0

25

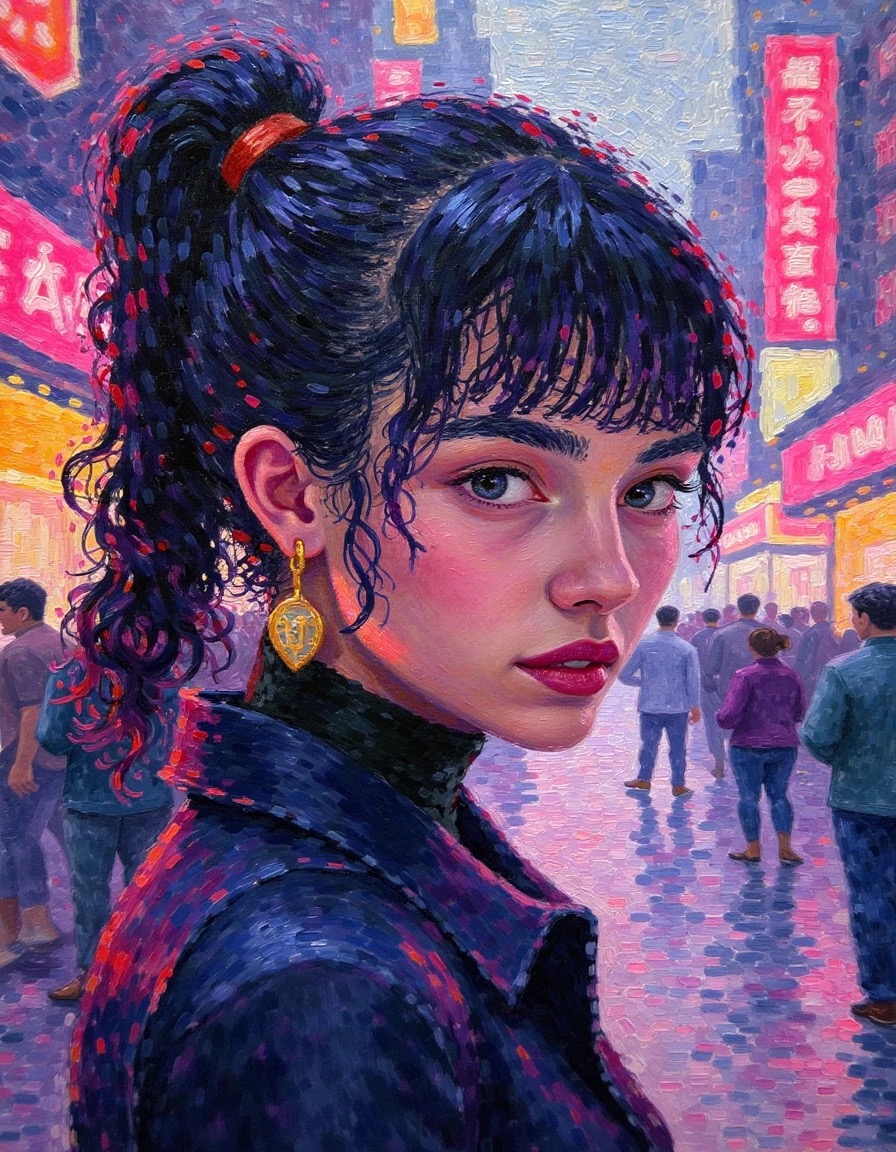

I can't stop running these, it's like looking at another world.

I'm trying to make a little Replicate model that combines flux pro and seedance to make realistic videos. - uses the IMG_XXXX.JPG trick for a start image.- uses realistic Seedance with "aggressively mediocre home footage" prompts to make the video.

7

14

191