Evan Miller

@EvMill

Followers

5K

Following

269

Media

63

Statuses

1K

Statistically inclined software developer, occasional blogger about math + stats stuff. Working on evals @AnthropicAI

NYC

Joined May 2009

I hit a bug in the Attention formula that’s been overlooked for 8+ years. All Transformer models (GPT, LLaMA, etc) are affected. Researchers isolated the bug last month – but they missed a simple solution…. Why LLM designers should stop using Softmax 👇.

evanmiller.org

Let’s fix these pesky Transformer outliers using Softmax One and QuietAttention.

76

357

2K

RT @AnthropicAI: We’re starting a Fellows program to help engineers and researchers transition into doing frontier AI safety research full-….

0

301

0

RT @MariusHobbhahn: This paper on the statistics of evals is great (and seems to be flying under the radar): The a….

arxiv.org

Evaluations are critical for understanding the capabilities of large language models (LLMs). Fundamentally, evaluations are experiments; but the literature on evaluations has largely ignored the...

0

37

0

RT @JeremyDanielFox: Awesome new research by my friend and colleague @EvMill — adding error bars to evals! Always great to see the Central….

0

2

0

RT @AnthropicAI: New Anthropic research: Adding Error Bars to Evals. AI model evaluations don’t usually include statistics or uncertainty.….

anthropic.com

Anthropic is an AI safety and research company that's working to build reliable, interpretable, and steerable AI systems.

0

308

0

RT @DarioAmodei: Machines of Loving Grace: my essay on how AI could transform the world for the better.

darioamodei.com

How AI Could Transform the World for the Better

0

1K

0

New sequential A/B test from @Zalando based on the Lévy inequality – check it out!

arxiv.org

Large-scale randomised experiments have become a standard tool for developing products and improving user experience. To reduce losses from shipping harmful changes experimental results are, in...

0

2

7

I think I've finally cracked quantiles…. A/B testing medians, instead of means, usually requires an expensive bootstrap. But we can use a likelihood-ratio test (Wilks' theorem) instead. This reduces the quantile problem to a few simple formulas. Read on!

arxiv.org

Quantiles can represent key operational and business metrics, but the computational challenges associated with inference has hampered their adoption in online experimentation. One-sample...

0

1

18

RT @natfriedman: Ten months ago, we launched the Vesuvius Challenge to solve the ancient problem of the Herculaneum Papyri, a library of sc….

0

15K

0

RT @BeidiChen: @ggerganov @EvMill The blog about Softmax+1 plays a very important role when we were trying to identify the root cause of th….

0

1

0

RT @ggerganov: Have a few thoughts about this approach. But most importantly, I'm happy to see @EvMill's idea on softmax1 recognized - to m….

arxiv.org

Deploying Large Language Models (LLMs) in streaming applications such as multi-round dialogue, where long interactions are expected, is urgently needed but poses two major challenges. Firstly,...

0

16

0

👀.

@Tracing47202686 @yell1337 @TiRune Unlike with clipped softmax, to achieve an exact zero in the output using softmax1 for a (partial) no-update, the input requires to be -infinity. However, after @EvMill blog post we experimented with softmax1 and found it in practice competitive with our proposed approaches.

1

0

13

RT @Astatide42: Results of my latest nerdsnipe from @TetraspaceWest!. The plot below shows the predicted shape of the water flow, with a mo….

0

4

0

RT @capetorch: Following @EvMill great blog post on encountered issues on the GPT-like models training that appear to be related to the Sof….

0

10

0

RT @PPapakonNucl: Kurt Vonnegut's 1969 address to the American Physical Society @APSphysics --on the innocence of the "old-fashioned scient….

docs.google.com

ADDRESS TO THE AMERICAN PHYSICAL SOCIETY New York City, 1969 MY ONLY BROTHER is a cloud physicist. He is nine years older than I am, and was an inspiration to me in my youth. He used to work with the...

0

18

0

Softmax1 update…. We now have support for. ⚡️Flash Attention ⚡️. This lets us test much larger models than before! To get the code, just. pip install flash-attention-softmax-n. Or clone / star the GitHub repo here: All credit / kudos to Chris Murphy.

github.com

CUDA and Triton implementations of Flash Attention with SoftmaxN. - softmax1/Flash-Attention-Softmax-N

2

12

52

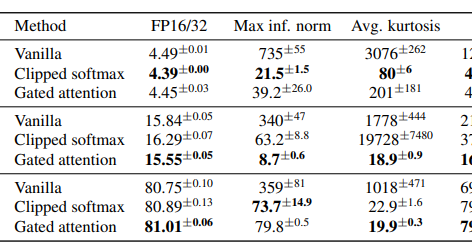

Softmax1, Week 2. Second set of empirical results are in, and they are…. 🌸 promising 🌸. Weight kurtosis is roughly the same – but activation kurtosis improved 30X (!!) and maximum activation magnitude reduced 15X (!). Read more from @johnowhitaker:.

datasciencecastnet.home.blog

Last week a guy called Evan Miller tweeted out a blog post claiming to have discovered a flaw in the attention mechanism used by transformers today: The phrasing was sensationalist, and many people…

1

17

106

RT @FarisSbahi: Controlling language models has a long way to go - and clever techniques - involving Finite State Machines - offer a way to….

0

21

0

RT @johnowhitaker: New blog post: I've had fun joining in the community effort to investigate @EvMill's claims abou….

datasciencecastnet.home.blog

Last week a guy called Evan Miller tweeted out a blog post claiming to have discovered a flaw in the attention mechanism used by transformers today: The phrasing was sensationalist, and many people…

0

12

0