Zeming Chen

@eric_zemingchen

Followers

554

Following

465

Media

24

Statuses

60

PhD Candidate, NLP Lab @EPFL; Research Scientist Intern @AIatMeta; Ex @AIatMeta (FAIR) @allen_ai #AI #ML #NLP

Lausanne, Switzerland

Joined July 2021

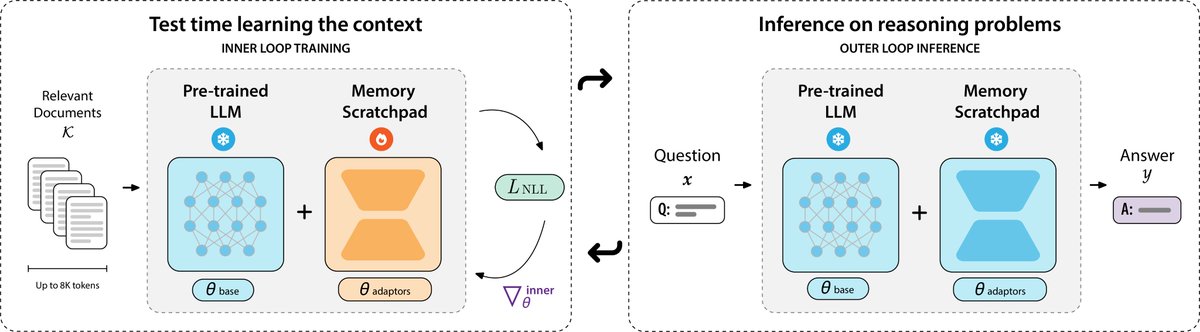

In collaboration with my wonderful co-authors: @agromanou, @gail_w , & @ABosselut!. Links 🔗:.Project Page: Paper: Code:

1

0

3

RT @QiyueGao123: 🤔 Have @OpenAI o3, Gemini 2.5, Claude 3.7 formed an internal world model to understand the physical world, or just align p….

0

44

0

RT @bkhmsi: 🚨New Preprint!!. Thrilled to share with you our latest work: “Mixture of Cognitive Reasoners”, a modular transformer architectu….

0

84

0

RT @agromanou: If you’re at @iclr_conf this week, come check out our spotlight poster INCLUDE during the Thursday 3:00–5:30pm session! . I….

0

14

0

RT @silin_gao: NEW PAPER ALERT: Generating visual narratives to illustrate textual stories remains an open challenge, due to the lack of kn….

0

11

0

RT @bkhmsi: 🚨 New Preprint!!. LLMs trained on next-word prediction (NWP) show high alignment with brain recordings. But what drives this al….

0

64

0

RT @bkhmsi: 🚨 New Paper!. Can neuroscience localizers uncover brain-like functional specializations in LLMs? 🧠🤖. Yes! We analyzed 18 LLMs a….

0

31

0

RT @agromanou: 🚀 Introducing INCLUDE 🌍: A multilingual LLM evaluation benchmark spanning 44 languages!.Contains *newly-collected* data, pri….

0

61

0

RT @bkhmsi: 🚨 New Paper!!. How can we train LLMs using 100M words? In our @babyLMchallenge paper, we introduce a new self-synthesis trainin….

0

24

0

RT @Walter_Fei: Alignment is necessary for LLMs, but do we need to train aligned versions for all model sizes in every model family? 🧐. We….

0

25

0

RT @ABosselut: Hey #NLProc folks, we had a lot of fun last year, so we're inviting guest lecturers again for our Topics in NLP course durin….

docs.google.com

The EPFL Natural Language Processing Lab invites applications to give a guest lecture in the EPFL Topics in Natural Language Processing course during the Fall 2024 semester. Background: Since 2022,...

0

6

0