Chang Liu

@enjoychang

Followers

312

Following

341

Media

179

Statuses

744

Founder driving to augment human with technologies, virtual reality, mixed reality, robotics, embodied AI

London

Joined July 2009

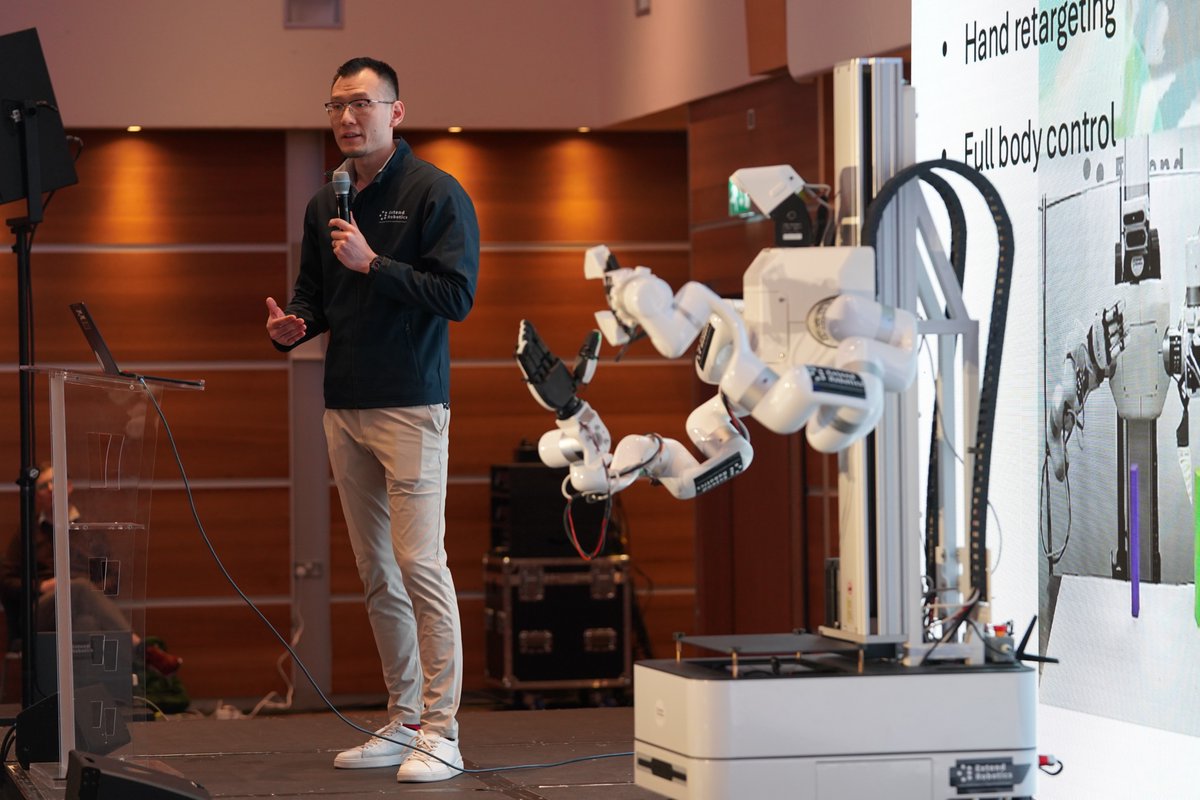

Awesome interview with @Jason and @alex talking about the future of robotics embodying AI and human intelligence in @extend_robotics . Really appreciate the opportunity to speak in @twistartups !.

🤖🚀 From Teleoperated Robots to AI Companions. In this episode, @jason and @alex chat with Extend Robotics' Dr. Chang Liu about teleoperated robots tackling tasks like grape picking. We also dive into:.🌐 Global remote work & salary impacts.🔍 OpenAI's latest features &

0

1

2

RT @enjoychang: Robot providing general labour capacity is the single biggest opportunity in the 21st century, leading to the age of abunda….

0

1

0

Robot providing general labour capacity is the single biggest opportunity in the 21st century, leading to the age of abundance, when everyone will have a quality life they love. Support @extend_robotics to join this revolution!

0

1

2

RT @HumanoidsSummit: Check out this interview with @enjoychang, conducted by @IlirAliu_ at #HumanoidsSummit London 2025. Chang shares how @….

0

3

0

RT @enjoychang: @NVIDIAGTC Paris at #VivaTech – 2nd Day 🇫🇷 .Highlight of the day: I had the incredible opportunity to meet Jensen Huang. Hi….

0

1

0

Great insight from @karpathy during @ycombinator talk “It’s less about building flashy demos of autonomous agents, more about building partial autonomy products. And these products have custom GUIs and UI/UX. This is done so that the generation and verification loop of the human

0

1

2

Hold your chair when @HumanoidsSummit is about to release our talk on @extend_robotics new product releases during the London event!.#humanoid #vr #virtualreality #XR #embodiedai #physicalai #telerobotics #teleoperation #datacollection #ai #imitationlearning #isaacsim #isaaclab

0

1

2

RT @HumanoidRTech: .@extend_robotics develops an immersive data pipeline to empower embodied AI and humanoid robots to learn efficiently in….

0

2

0

Inspiring introduction of @GoogleDeepMind Gemini Robotics foundation models, by @Nicolas_Heess during @HumanoidsSummit. It is clear to me that we are witnessing the fundamental breakthrough in #physicalAI. The ChatGPT moment for robot feels not far. @extend_robotics is in an

0

1

1

Picking is never easy.

Meet Vulcan, our first robot with a sense of touch. 🤖 🖐️.This innovative system combines physical AI with novel hardware solutions—like computer vision, tactile sensing, and machine learning—to reimagine how we complete orders for our customers. With the ability to pick and

0

0

0

Quite impressive progress of the team congratulations! @xuxin_cheng I hope this can be more productised.

Meet 𝐀𝐌𝐎 — our universal whole‑body controller that unleashes the 𝐟𝐮𝐥𝐥 kinematic workspace of humanoid robots to the physical world. AMO is a single policy trained with RL + Hybrid Mocap & Trajectory‑Opt. Accepted to #RSS2025. Try our open models & more 👉

0

0

0

RT @ZeYanjie: 🤖Introducing TWIST: Teleoperated Whole-Body Imitation System. We develop a humanoid teleoperation system to enable coordinat….

0

90

0

This is quite substantial breakthrough.

V2: DynaMoN: Motion-Aware Fast And Robust Camera Localization for Dynamic Neural Radiance Fields. TL;DR: motion aware fast/robust camera localization approach for novel view synthesis; motion of known/unknown objects using a motion segmentation mask; faster pose estimation

1

0

1

Sure you can DIY some cheap 3D printed robot arms to collect cheap data. But do you have to use any physical robot to start collecting your own data in the first place? what if you can just connect to a photo-realistic @NVIDIARobotics #isaacsim #isaaclab with all the physics,

0

1

3