(Fred)erick Matsen

@ematsen

Followers

2K

Following

1K

Media

185

Statuses

2K

I ♥ evolution, immunology, math, & computers. Professor at Fred Hutch & Investigator at HHMI. https://t.co/J5Yk17f11n

Seattle

Joined August 2010

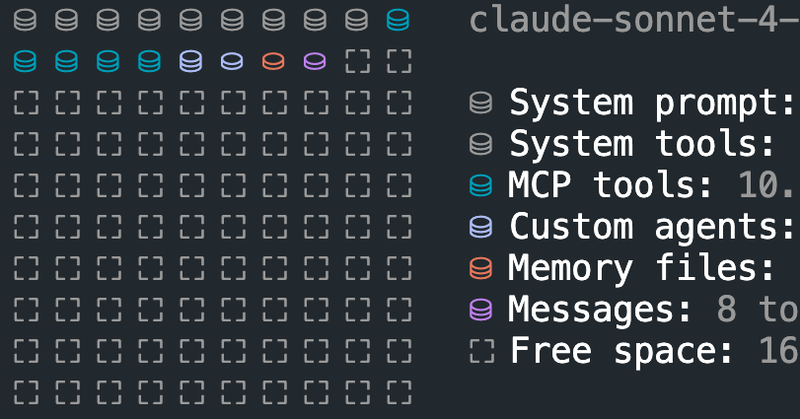

The last five months with Claude Code have completely changed how we work. https://t.co/LpeqQ58xT2 details: • How agents work (& why it matters) • Git Flow with agents • Using agents for science • The human-agent interface Questions? What has your experience been?

matsen.group

A four-part series on using coding agents like Claude Code for scientific programming, covering fundamentals, workflows, best practices, and the human side of AI-assisted development.

0

0

5

The Mahan postdoctoral fellowship offers 21 months of support to develop your own research with Fred Hutch computational biology faculty-- lots of excellent labs to choose from! Apply: https://t.co/pzPWKcbIjr Faculty:

fredhutch.org

0

1

2

... and second is to have a map from the figures to where they are made in the associated "experiments" code repository ( https://t.co/54WJrDaN98):

0

0

0

I forgot to post two things I liked doing in this paper that I hope catch on. First is to have links in the methods section to the model fitting code (in a tagged version https://t.co/WMVP0wcWwJ as the code continues to evolve):

1

0

0

Oh, and here is a picture of a cyborg-Darwin (cooked up by Gemini), after he realized how useful transformers are. For some reason MBE didn't want it as a cover image!

1

0

0

Many thanks to Kevin Sung and Mackenzie Johnson for leading the all-important task of data prep, Will Dumm for code and methods contributions, David Rich for structural work, and Tyler Starr, Yun Song, Phil Bradley, Julia Fukuyama, and Hugh Haddox for conceptual help.

1

0

0

We have positioned our group in this niche: we want to answer biological questions using ML-supercharged versions of the methods that scientists have been using for decades to derive insight. More in this theme to come!

1

0

0

Stepping back, I think that transformers and their ilk have so much to offer fields like molecular evolution. Now we can parameterize statistical models using a sequence as an input!

1

0

0

If you want to give it a try, we have made it available using a simple `pretrained` interface. Here is a demo notebook.

github.com

Neural networks to model BCR affinity maturation. Contribute to matsengrp/netam development by creating an account on GitHub.

1

0

0

And because natural selection is predicted for individual sequences, we can also investigate changes in selection strength as a sequence evolves down a tree:

1

0

0

Because this model isn't constrained to work with a fixed-width multiple sequence alignment we can do things like look at per-site selection factors on sequences with varying CDR3 length:

1

0

0

If a selection factor at a given site for a given sequence is • > 1 that is diversifying selection • = 1 that is neutral selection • < 1 that is purifying selection.

1

0

0

The model is above. In many ways it is like a classical model of mutation and selection, but the mutation model is a convolutional model and the selection model is a transformer-encoder mapping from AA sequences to a vector of selection factors of the same length as the sequence.

1

0

0

The final version of our transformer-based model of natural selection has come out in MBE. I hope some molecular evolution researchers find this interesting & useful as a way to express richer models of natural selection. https://t.co/B2kVzQesjw (short 🧵)

1

0

6

Open AI Engineer position on next-generation protein evolution models! Join HHMI's AI initiative at Janelia Farm, Virginia, (an amazing place) and work closely with our team. This is going to be fun.

0

0

1

Hats off to first author Kevin Sung https://t.co/1tE2frzeMy and the rest of the team 🙏 !

linkedin.com

Experience: Fred Hutch · Education: Massachusetts Institute of Technology · Location: Greater Seattle Area · 52 connections on LinkedIn. View Kevin Sung’s profile on LinkedIn, a professional commun...

0

0

0

I was very proud to get "The authors are to be commended for their efforts to communicate with the developers of previous models and use the strongest possible versions of those in their current evaluation" in peer reviews: https://t.co/XYzA4JAy9h

elifesciences.org

Convolutional embedding models efficiently capture wide sequence context in antibody somatic hypermutation, avoiding exponential k-mer parameter scaling and eliminating the need for per-site modeling.

1

0

0

Pretrained models are available at https://t.co/FI9fcuQ35w, and the computational experiments are at https://t.co/q84yi8uFYd.

github.com

Contribute to matsengrp/thrifty-experiments-1 development by creating an account on GitHub.

1

0

0

It's possible that more complex models not more significantly dominating comes from a lack of suitable training data, namely neutrally evolving out-of-frame sequences. We tried to augment the training data, with no luck.

1

0

0

The resulting models are better than 5-mer models, but only modestly so. We made many efforts to include a per-site rate but concluded that the effects of such a rate were weak enough that including them did not improve model performance.

1

0

0