Eleftheria Briakou

@ebriakou

Followers

675

Following

415

Media

17

Statuses

124

Research Scientist @Google Translate

San Francisco, CA

Joined March 2018

RT @YooYeonSung1: 🏆ADVSCORE won an Outstanding Paper Award at #NAACL2025 @naaclmeeting!!. If you want to learn how to make your benchmark *….

0

17

0

RT @slatornews: 👉 Recent studies from @AlibabaGroup, @Cohere_Labs, and @Google highlight major gaps in #multilingua….

0

2

0

RT @bryanlics: Externally retrieving knowledge empowers LLMs for domain-adapted MT ⚖️🩺. But how is knowledge best represented, and how viab….

arxiv.org

While large language models (LLMs) have been increasingly adopted for machine translation (MT), their performance for specialist domains such as medicine and law remains an open challenge. Prior...

0

2

0

RT @iseeaswell: 😼SMOL DATA ALERT! 😼Anouncing SMOL, a professionally-translated dataset for 115 very low-resource languages! Paper: https://….

0

11

0

RT @_danieldeutsch: 🚨New machine translation dataset alert! 🚨We expanded the language coverage of WMT24 from 9 to 55 en->xx language pairs….

0

25

0

Contaminated data can inflate #LLM performance—which factors matter the most? . Our controlled, multilingual pre-training study examines how contamination timing ⏰, data format 📄, model size ⚖️, and language representation 🌍 impact performance overestimation.

Thrilled to share our latest findings on data contamination, from my internship at @Google! We trained almost 90 Models on 1B and 8B scales with various contamination types using machine translation as our task and analyze the impact of contamination.

0

0

5

RT @_danieldeutsch: New application link! I am at EMNLP/WMT this week. Please come find me if you want to learn mo….

0

10

0

RT @CohereForAI: Introducing ✨Aya Expanse ✨ – an open-weights state-of-art family of models to help close the language gap with AI. Aya Ex….

0

140

0

RT @naaclmeeting: 📢 NAACL needs Reviewers & Area Chairs! 📝. If you haven't received an invite for ARR Oct 2024 & want to contribute, sign….

0

26

0

RT @_danieldeutsch: Interested in doing research on Google Translate and Gemini? Good news! I’m hiring for full-time roles on the Google Tr….

0

86

0

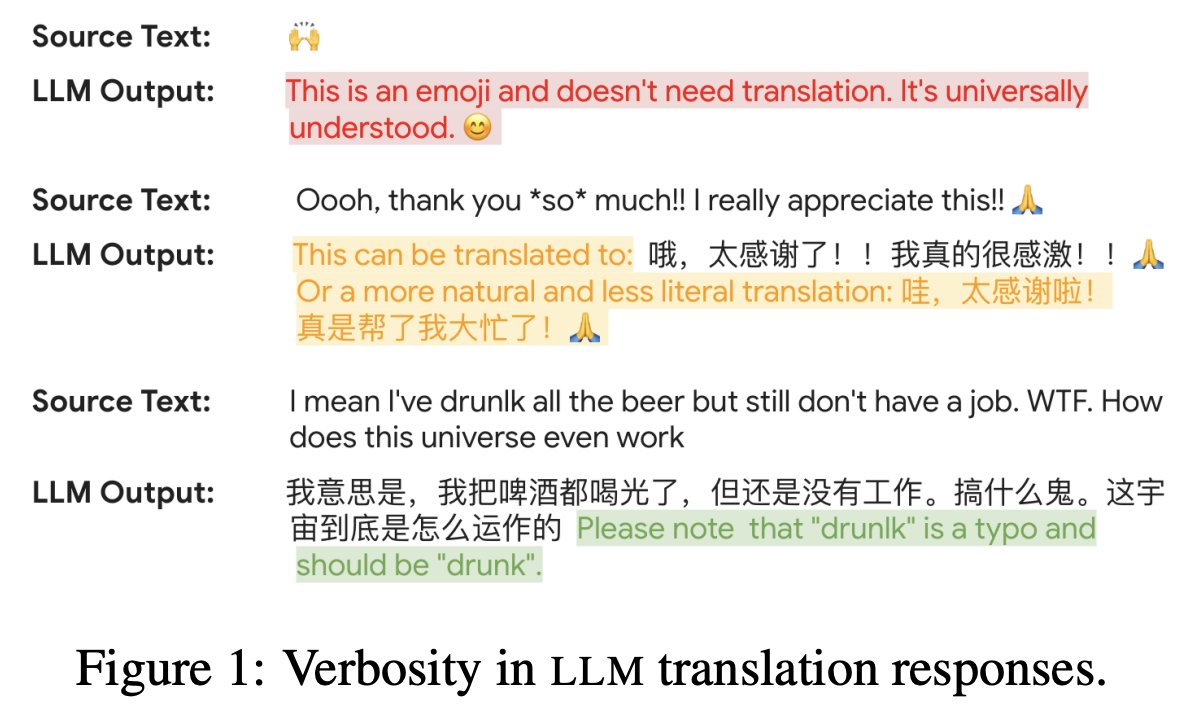

RT @slatornews: Researchers from @Google reveal that verbose #LLMs, 🤖 which offer multiple translations 🔄 or refuse to translate, 🚫 pose si….

slator.com

Google researchers reveal that verbose LLMs, which offer multiple translations or refuse to translate, challenge traditional MT evaluation.

0

4

0

RT @naaclmeeting: 📢 Call for demos is out!!. #NAACL2025 #NLProc. Check the website for submission guidelines and a chance to win the Best D….

2025.naacl.org

Official website for the 2025 Annual Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies

0

6

0

RT @davlanade: Join my lab! I’m currently recruiting new students (MSc & PhD) for admission in the fall of 2025 at.@Mila_Quebec. https://t.c….

mila.quebec

Each student at Mila is supervised by one of our affiliated professors. Applicants are selected through the supervision request process.

0

239

0