Andrea de Varda

@devarda_a

Followers

414

Following

986

Media

24

Statuses

136

Postdoc at MIT BCS, interested in language(s) and thought in humans and LMs

Joined March 2022

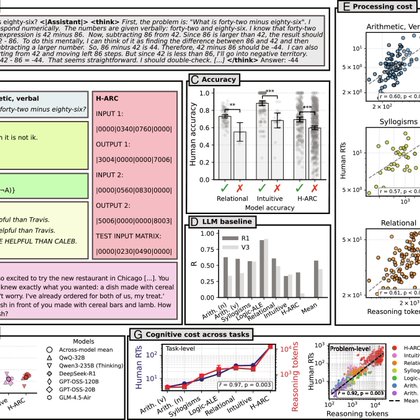

New preprint! 🤖🧠 The cost of thinking is similar between large reasoning models and humans 👉 https://t.co/0G6ay4NQc5 w/ Ferdinando D'Elia, @AndrewLampinen, and @ev_fedorenko (1/6)

osf.io

Do neural network models capture the cognitive demands of human reasoning? Across four reasoning domains, we show that the length of the chain-of-thought generated by a large reasoning model predicts...

4

26

93

The last chapter of my PhD (expanded) is finally out as a preprint! “Semantic reasoning takes place largely outside the language network” 🧠🧐 https://t.co/Z7cgHsvIbu What is semantic reasoning? Read on! 🧵👇

biorxiv.org

The brain’s language network is often implicated in the representation and manipulation of abstract semantic knowledge. However, this view is inconsistent with a large body of evidence suggesting...

10

35

156

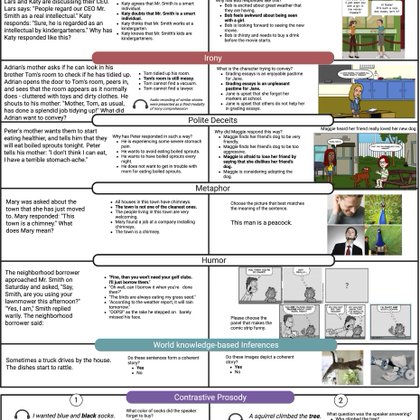

Finally out in @PNASNews: https://t.co/ahDbYpAOA7 (Three distinct components of pragmatic language use: Social conventions, intonation, and world knowledge–based causal reasoning), with many new analyses (grateful for a thoughtful and constructive review process at PNAS!)

pnas.org

Successful communication requires frequent inferences. Such inferences span a multitude of phenomena: from understanding metaphors, to detecting ir...

Thrilled to share this tour de force co-led by SammyFloyd+@OlessiaJour! 8yrs in the making! "A tripartite structure of pragmatic language abilities: comprehension of social conventions,intonation processing,and causal reasoning". W/@ZachMineroff; co-supervised w/@LanguageMIT 1/n

1

15

60

Really nice demonstration (led by @DKryvosheieva and @GretaTuckute) that agreement phenomena seem to carve out a shared subspace in LLMs: very different agreement types rely on overlapping units, also across languages!

How do LLMs process syntax? Do different syntactic phenomena recruit the same model units, or do they recruit distinct model components? And do different languages rely on similar units to process the same syntactic phenomenon? Check out our new preprint (to appear at ACL 2026)!

0

2

16

Look! 👀 Our favorite polygot @ev_fedorenko talks to @QuantaMagazine about the brain's language network and its similarities to LLMs and AI chatbots. 🧠🤖 Bonus photos of postdocs @HalieOlson + @devarda_a! https://t.co/4DacldhEFn

@mitbrainandcog @sciencemit

quantamagazine.org

Is language core to thought, or a separate process? For 15 years, the neuroscientist Ev Fedorenko has gathered evidence of a language network in the human brain — and has found some similarities to...

2

6

25

What does it mean to understand language? We argue that the brain’s core language system is limited, and that *deeply* understanding language requires EXPORTING information to other brain regions. w/ @neuranna @ev_fedorenko @Nancy_Kanwisher

https://t.co/6vvRGpkgE6 1/n🧵👇

arxiv.org

Language understanding entails not just extracting the surface-level meaning of the linguistic input, but constructing rich mental models of the situation it describes. Here we propose that...

6

39

116

Computational psycho/neurolinguistics is lots of fun, but most studies only focus on English. If you think cross-linguistic evidence matters for understanding the language system, consider submitting an abstract to MMMM 2026!

1

0

2

Today in @PNASNews: McGovern researchers @ev_fedorenko + @devarda_a find a surprising parallel in the ways humans and new AI models solve complex problems. 🤔💭 Manuscript 👉 https://t.co/7JHEtBR5pa Summary 👇 https://t.co/1g3QuyGxKJ

mcgovern.mit.edu

Large language models (LLMs) like ChatGPT can write an essay or plan a menu almost instantly. But until recently, it was also easy to stump them. The models, which rely on language patterns to...

0

9

18

Check out the revised version: it adds new reasoning tasks and a replication with six models. Link:

pnas.org

Do neural network models capture the cognitive demands of human reasoning? Across seven reasoning tasks, we show that the length of the chain-of-th...

1

0

4

"The cost of thinking is similar between large reasoning models and humans" is now out in PNAS! The number of tokens produced by reasoning models predicts human RTs across 7 reasoning tasks, including math, logic, relational, and even intuitive (social and physical) reasoning.

New preprint! 🤖🧠 The cost of thinking is similar between large reasoning models and humans 👉 https://t.co/0G6ay4NQc5 w/ Ferdinando D'Elia, @AndrewLampinen, and @ev_fedorenko (1/6)

1

4

26

What makes some sentences more memorable than others? Our new paper gathers memorability norms for 2500 sentences using a recognition paradigm, building on past work in visual and word memorability. @GretaTuckute @bj_mdn @ev_fedorenko

2

8

22

The compling group at UT Austin ( https://t.co/qBWIqHQmFG) is looking for PhD students! Come join me, @kmahowald, and @jessyjli as we tackle interesting research questions at the intersection of ling, cogsci, and ai! Some topics I am particularly interested in:

2

33

118

As our lab started to build encoding 🧠 models, we were trying to figure out best practices in the field. So @NeuroTaha built a library to easily compare design choices & model features across datasets! We hope it will be useful to the community & plan to keep expanding it! 1/

🚨 Paper alert: To appear in the DBM Neurips Workshop LITcoder: A General-Purpose Library for Building and Comparing Encoding Models 📄 arxiv: https://t.co/jXoYcIkpsC 🔗 project: https://t.co/UHtzfGGriY

1

7

39

Check out our* new preprint on decoding open-ended information seeking goals from eye movements! *Proud to say that my main contribution to this work are the banger model names: DalEye Llama and DalEye LLaVa! https://t.co/XNj2adjabc

0

3

8

Are there conceptual directions in VLMs that transcend modality? Check out our COLM spotlight🔦 paper! We analyze how linear concepts interact with multimodality in VLM embeddings using SAEs with @Huangyu58589918, @napoolar, @ShamKakade6 and Stephanie Gil https://t.co/4d9yDIeePd

10

88

511

Road to Bordeaux ✈️🇫🇷 for #SLE2025! Where I am going to present IconicITA!! An @Abstraction_ERC @Unibo and @unimib joint project on iconicity ratings ☑️ for the Italian language across L1 and L2 speakers! @m.bolognesi @BeatriceGi

@a.ravelli @devarda_a

@chiarasaponaro8

0

2

5

🤖🧠 NEW PAPER ON COGSCI & AI 🧠🤖 Recent neural networks capture properties long thought to require symbols: compositionality, productivity, rapid learning So what role should symbols play in theories of the mind? For our answer...read on! Paper: https://t.co/VsCLpsiFuU 1/n

4

35

170

Can't wait for #CCN2025! Drop by to say hi to me / collaborators! @bkhmsi @yingtian80536 @NeuroTaha @ABosselut @martin_schrimpf @devarda_a @saima_mm @ev_fedorenko @elizj_lee @cvnlab

0

14

78

(1)💡NEW PUBLICATION💡 Word and construction probabilities explain the acceptability of certain long-distance dependency structures Work with Curtis Chen and @LanguageMIT Link to paper: https://t.co/m9eYj5uAwF In memory of Curtis Chen.

1

8

22

Is the Language of Thought == Language? A Thread 🧵 New Preprint (link: https://t.co/Cmg65mQIFx) with @alexanderdfung, @ParisJaggers, Jason Chen, Josh Rule, @BennYael, @MITCoCoSci, @spiantado, Rosemary Varley, @ev_fedorenko 1/8

biorxiv.org

Humans are endowed with a powerful capacity for both inductive and deductive logical thought: we easily form generalizations based on a few examples and draw conclusions from known premises. Humans...

1

45

131