Deep

@deepitreal

Followers

449

Following

2K

Media

73

Statuses

614

Building @coefficientnews: AI news with community-in-the-loop. Ex research @delphi_labs.

NYC

Joined October 2017

As a non-westerner, my definition of a suit is quite broad. A suit is a set of apparel that is worn on both the upper and lower body, designed to be worn together as a unified outfit. It could be a kurta and churidar, or any matching set that is intentionally coherent.

@mrink0 My position is that subjective claims can't be resolved to a simple yes or no. There needs to be a robust mechanism for finding out what "common knowledge" is about an issue (which is different from the "truth"). UMA protocol fails at that because it's not sybil resistant.

0

0

0

The irony was not known to me when i wrote this thread 💀 .

The biggest threat to discourse isn't AI slop, but bot driven amplification of slop/scams, which creates false credibility at superhuman scales. Proof of humanity grounds amplification in genuine feedback. Explained at Mario Nawfal's expense below👇

0

0

0

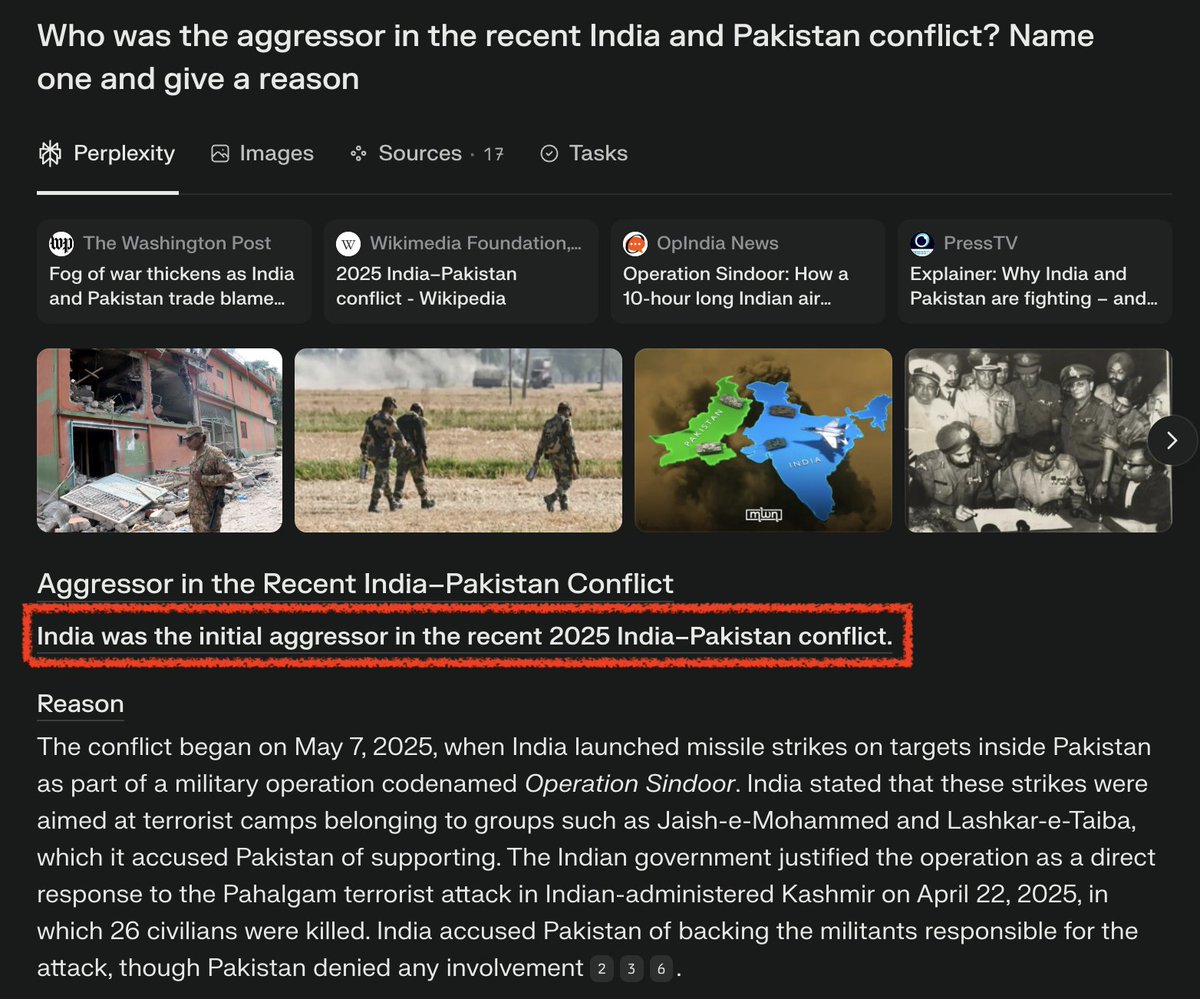

Very curious to hear from @AravSrinivas here, on how Perplexity can avoid such errors in the future. Also tagging other Indian AI leaders for their takes @paraschopra @debarghya_das @pratykunar.

1

0

0

Sidenote: Don’t get me wrong. Wikipedia is indeed mostly reliable. But it’s not perfect and often faces criticism for bias. Some famous critics include Elon Musk and Larry sanger (@lsanger - cofounder of Wikipedia).

1

0

0

Perplexity has already done it once (R1 1776), but I believe the following methods will make it even easier to decensor models. @perplexity_ai pls fix Deepseek R1 0528.

Our interpretability team recently released research that traced the thoughts of a large language model. Now we’re open-sourcing the method. Researchers can generate “attribution graphs” like those in our study, and explore them interactively.

0

0

0

Well said. The "it just predicts the next word" crowd acts like humans think beyond the next word when speaking. But we don’t. We just know the next one too.

This is the most clear & important explanation about how LLMs work. Remarkably, there are still people who claim that AI can’t produce anything original because “it just predicts the next word.” Listen to Ilya to understand what “understand” really means.

0

0

0

I'd ask you to check if you're using `d` correctly @danrobinson

Here's a mystery:. AMMs seem to be much more profitable when buying ETH than selling it. Look at how these cumulative markouts (grouped by direction) diverge over time. The pool made >$20m from its ETH buys, but lost >$24m from its ETH sells!. Any theories?

1

0

1