@ddvd233

Followers

20K

Following

106K

Media

7K

Statuses

51K

PhD Student @MIT Media Lab | Multimodal LLMs | MS in Computer Science @Stanford, RA at @StanfordSVL supervised by @drfeifei | 艾默里归宅部荣誉部员|日本語本当下手

Palo Alto, CA

Joined October 2015

📢 Recruiting reviewers for #genai4health! Unexpected volume of submissions received. 📅 Reviews due: Sept 19 (AoE) to ensure accepted authors have visa/travel time. We welcome expertise in: 1. GenAI in Health (diagnosis, treatment, imaging, robotics, synthetic data) 2. Trust &

0

2

7

于是修了一天 NCCL... 发现开了虚拟化(ACS)之后 NCCL 会默默卡住,如果宿主机是 esxi 这种虚拟机就很难搞

6

1

78

"A stylish woman in a beige coat walking confidently past a moving train at a modern train station." Create images and videos in seconds with Grok Imagine.

833

716

5K

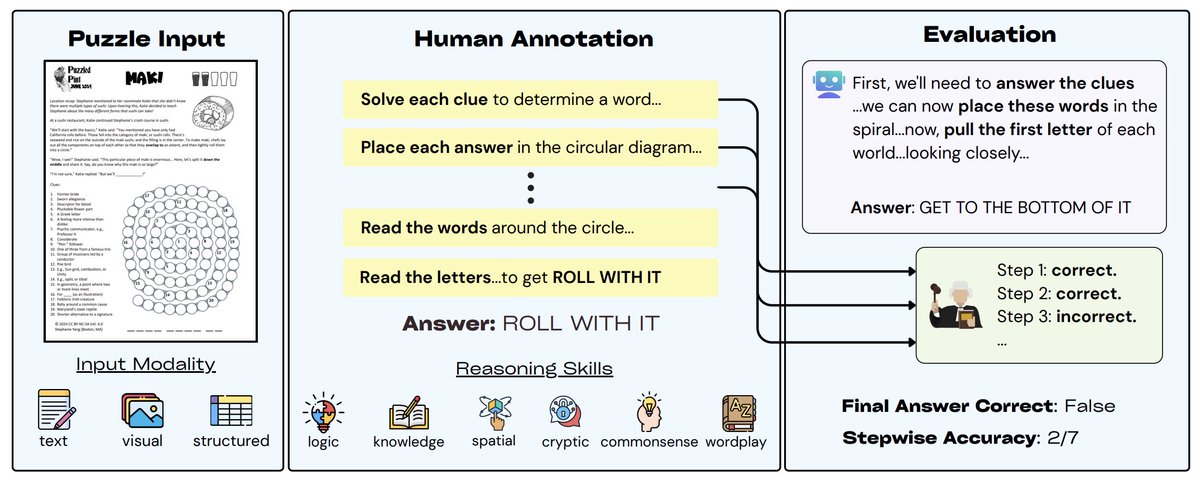

Since my undergraduate days at CMU, I've been participating in puzzlehunts: involving complex, multi-step puzzles, lacking well-defined problem definitions, with creative and subtle hints and esoteric world knowledge, requiring language, spatial, and sometimes even physical

Most problems have clear-cut instructions: solve for x, find the next number, choose the right answer. Puzzlehunts don’t. They demand creativity and lateral thinking. We introduce PuzzleWorld: a new benchmark of puzzlehunt problems challenging models to think creatively.

6

14

95

NVIDIA 送的 GPU 到了!装上工作站试了下,感觉很赞

47

17

538

Claude Research 一下找了 313 个 source...现在是不是比较流行比谁的 source 比较多(

14

0

32

拍到了! 位置在温哥华国际机场美国及国际出发125-128号值机柜台对面w

3

1

22

A bit late, but finally got around to posting the recorded and edited lecture videos for the **How to AI (Almost) Anything** course I taught at MIT in spring 2025. Youtube playlist: https://t.co/JrquYkHCFw Course website and materials: https://t.co/9GutIXUVMZ Today's AI can be

15

247

1K

App Store 也是类似的做法,经常看到不同账户区域,同一个 App 的评分都不太一样,主要也是由于文化上的差异

为了避免特定语言用户集中差评某个游戏引发不真实评分,#Steam 现在会按照不同语言显示评分。这样做的好处是特定语言用户因为各种原因集中差评后不会影响其他语言的用户,默认也不会计入总评分,但用户可以手动切换所有语言和所有综合评分。查看全文: https://t.co/kfLsKfFicU

5

0

32

请看 @ddvd233

0

1

6

卡皮巴拉变成熊了(

2

0

24

美国 Apple Watch 用户终于可以用血氧了,感人至深

NEW: Apple launching ‘redesigned Blood Oxygen feature’ on Apple Watch in the U.S. today https://t.co/Tfdbvyrtfo by @ChanceHMiller

3

0

40

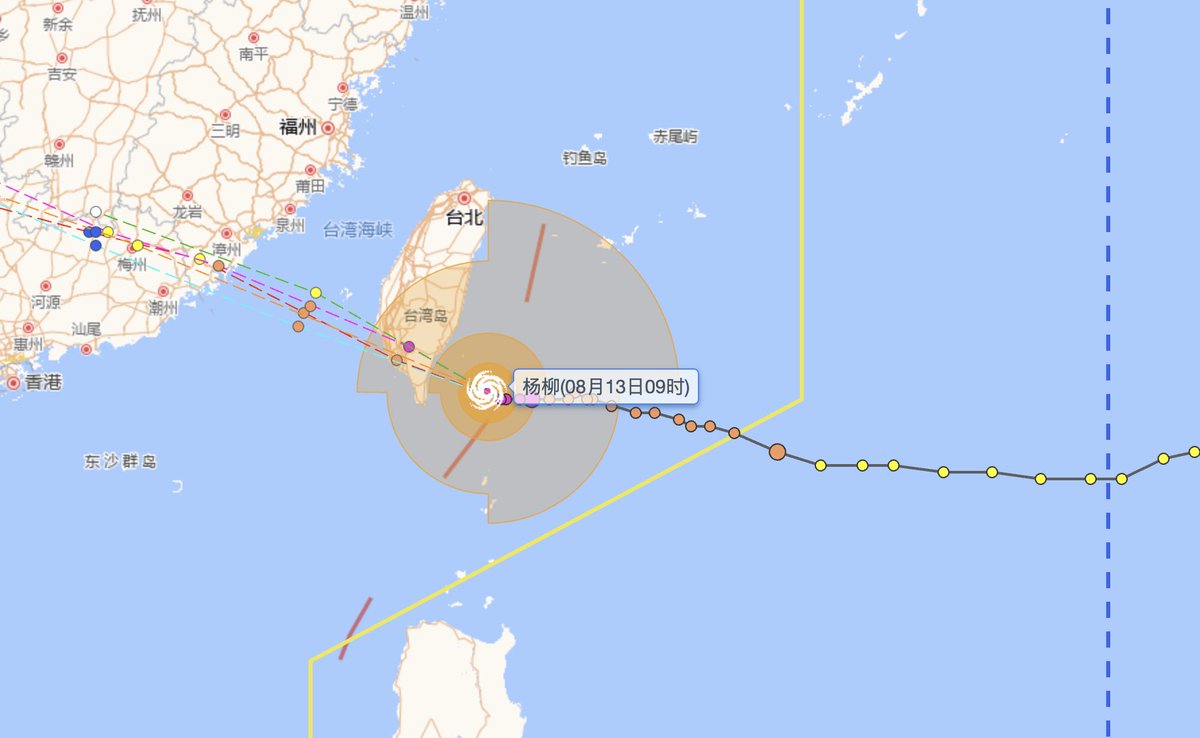

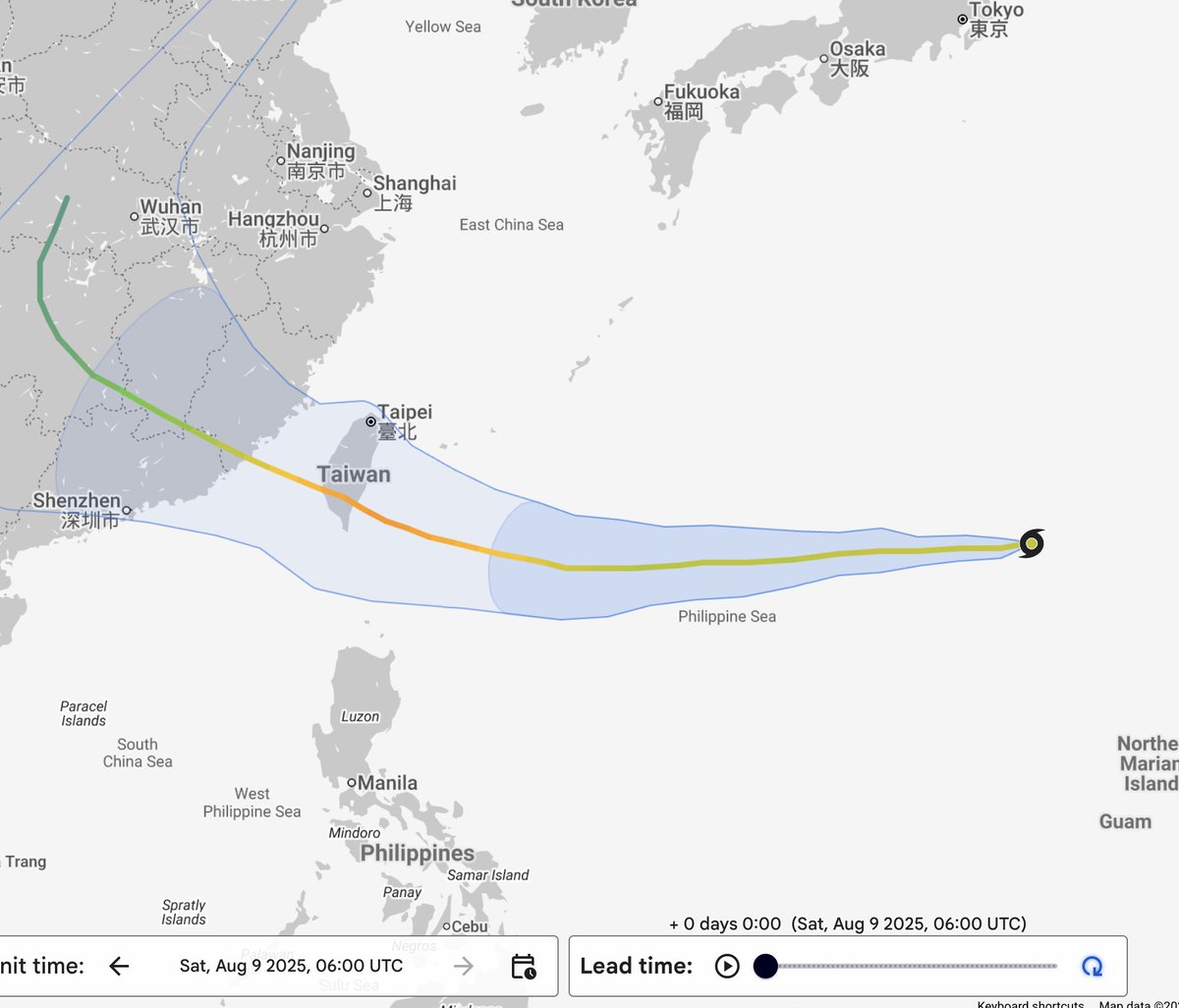

后续:确实去台南了 看来 Google 的模型比各国官方气象局还要准些)

25

29

569