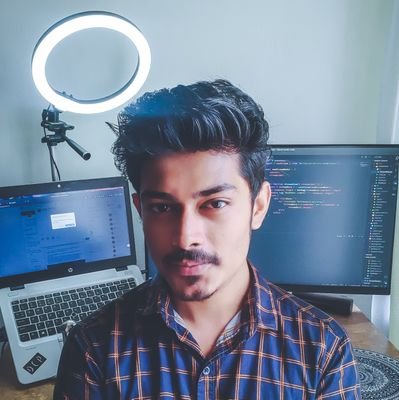

Durjoy Paul

@dcpmrpaul

Followers

323

Following

116

Media

5

Statuses

58

1X Kaggle Master | Full Stack Engineer(AI)

Joined March 2021

RT @fengyao1909: 🔥 "Vibe coding" is everywhere—but is it really care-free?. We introduce 𝐑𝐞𝐚𝐋, an RL framework that trains LLMs with automa….

0

41

0

Thrilled to share my work: "Efficient Zero-Shot Voice Cloning for Bengali Speech Synthesis" is now published.

ieeexplore.ieee.org

Voice cloning has numerous useful applications, including assisting individuals who have lost their ability to speak, movie dubbing, and translating voices into different languages. However, voice...

0

0

1

RT @sh_reya: We have a paper to appear at NAACL this week: PromptEvals! ✨. In collaboration with LangChain, we release a dataset of 2k+ dev….

0

48

0

RT @mdredze: New PhD tradition at @JHUCompSci @jhuclsp ! 🎓. We now knight our graduating PhD students and present them with a sword. ⚔️🤺. C….

0

8

0

RT @Ber18791531: Is human language the most effective space for LLMs to reason? 🤔. Introducing Coconut 🥥 (Chain of Continuous Thought): our….

0

83

0

RT @Scirp_Papers: Advanced Face Detection with YOLOv8: Implementation and Integration into AI Modules.More @ Artic….

0

1

0

RT @kellerjordan0: Hey lab: Here is a paper I’d be interested in seeing tried in the NanoGPT speedrun (might require GPU programming).https….

arxiv.org

Large neural networks excel in many domains, but they are expensive to train and fine-tune. A popular approach to reduce their compute or memory requirements is to replace dense weight matrices...

0

14

0

RT @FeiziSoheil: LLMs are powerful but prone to 'hallucinations'—false yet plausible outputs. In our #NeurIPS2024 paper, we introduce a c….

0

17

0

RT @wangly0229: 🎯Excited to share our latest research on Marco-LLM! .🌍We aim to improve the multilingual capabilities of large language mod….

0

39

0

RT @vjhofmann: 📢 New paper 📢. What generalization mechanisms shape the language skills of LLMs?. Prior work has claimed that LLMs learn lan….

0

14

0

RT @ChantalShaib: There's a general feeling that AI-written text is repetitive. But this repetition goes beyond phrases like "delve into"!….

0

106

0

RT @_akhaliq: Qwen-Agent. Qwen-Agent is a framework for developing LLM applications based on the instruction following, tool usage, plannin….

0

126

0

RT @sumeetrm: Excited to announce our work on Multi-Agent LLM Training!🚨. MALT is an early advance leveraging synthetic data generation and….

0

16

0

RT @akshay_pachaar: PydanticAI: Build production-grade Agentic AI apps in pure Python!. PydanticAI offers the same elegance and ease of use….

0

119

0

RT @tom_doerr: "The open-source LLMOps platform: prompt playground, prompt management, LLM evaluation, and LLM Observability all in one pla….

0

81

0

RT @ManyaWadhwa1: Refine LLM responses to improve factuality with our new three-stage process:. 🔎Detect errors.🧑🏫Critique in language.✏️Re….

0

27

0

RT @gregd_nlp: 🤔 Want to know if your LLMs are factual? You need LLM fact-checkers. .📣 Announcing the LLM-AggreFact leaderboard to rank LL….

0

38

0

RT @thomlake: Does aligning LLMs make responses less diverse? It’s complicated:. 1. Aligned LLMs produce less diverse outputs.2. BUT those….

0

16

0