aomi labs

@aomi_labs

Followers

120

Following

54

Media

5

Statuses

39

Joined July 2025

Here’s our LLM-as-a-judge eval demo of our AI runtime converting intent to execution ⬇️ No wrappers, no hardcoded SDKs. Just raw EVM interaction with abi_encode:

5

3

14

No need for complex UI. Just tell Aomi your goal. Example: "Rebalance my stablecoins to the highest yield" That's all it takes.

0

0

4

We believe this "Native Runtime" approach is the only way to scale crypto agents. By removing the middleware and letting the AI interact directly with the EVM, we get scalability, performance, and generalizability.

0

0

3

Evals: Because of how fast our Rust framework is, we run LLM-as-a-judge in multi-threads. The "User" in these logs isn't a human. It's another AI running a regression test. Judge: Demands a task (e.g., "Stake ETH") Agent: Asks for clarifications (e.g., “Which protocol?”) Judge:

1

0

4

How do we do it? Robust runtime support that natively integrates the light client + carefully crafted tool layer letting AI execute freely. ✔️ Fine-grained context to the exact docs, contracts and ABI that AI needs. No “needle in a haystack�� ✔️ Tailor tool set to the

1

0

3

Case B: "Swap 1 ETH for USDC" The agent finds the Uniswap Router, checks the deadline, and calculates the path. Case C: "Send 25 USDC to Bob" It handles token decimals and simple transfers effortlessly. Interestingly, the LLM figures out the need to wrap the ETH before

1

0

3

Case A: (Staking on Lido Test Case) "Stake 1 ETH in Lido" The LLM guides itself through the entire workflow without us integrating Lido specifically: ✔️ Locates the stETH contract and fetches the ABI. ✔️ Encodes the submit() function with the correct payload. ✔️ Simulates the

1

0

3

Ask Anything: ☑️ What’s the best pool to stake my ETH? ☑️ How much money have I made from my LP position? ☑️ How many shitcoins does Vitalik have on Base? Do Anything: ✅ Deposit half my ETH into the best pool. ✅ Sell my NFT collection on X on a marketplace that supports it. ✅

0

1

6

Why go to these lengths? Because the current "agent" meta of wrapping API endpoints and MCP servers is simply too slow for DeFi. By moving logic into a unified Rust binary, we slash latency and eliminate the fragility of maintaining hundreds of bespoke protocol SDKs.

0

0

2

BAML allows us to programmably format the input, letting us seamlessly switch between stateful user sessions and stateless data parsing. At the script generation phase, the BAML call doesn't need to hunt for "needles in a haystack" - it gets exactly the context it needs, and

1

0

2

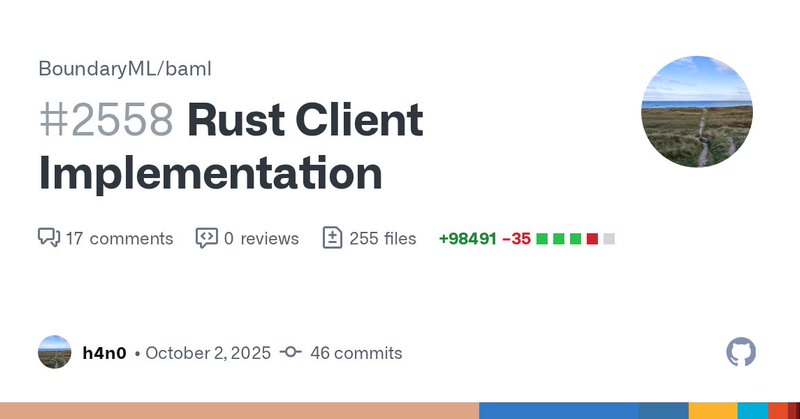

We need everything - type-checking, context management, and light clients - running in a single process to maximize performance. That’s why we proudly contributed back to the ecosystem by shipping the official BAML Rust client implementation. 🦀 https://t.co/viZP0miQX0

github.com

PR Summary This pull request introduces integration tests for the Rust client generator in the baml repository. The primary goal is to ensure that the Rust client generator produces correct and rel...

1

0

2

LLMs are black boxes. To expect reliable output, we need full control of the input. We fine-grain the prompt by engineering rails to fetch, cache, and trim on-chain context. The result? Predictable operations. BAML is the type-checker for the black box.

boundaryml.com

Boundary makes it easy to build, test, and develop LLM applications.

1

0

2

Our pipeline relies on Foundry to execute and BAML to parse. @boundaryML is our secret sauce to force raw text into type-safe structures. "Send $5 USDC to 0xd9g..." ⬇️ USDC(0x7gh...).transfer(0xa3c..., 0xd9g..., 5) We turn natural language directly into executable Forge

1

0

2

To achieve generality and performance, we built a custom AI orchestration framework in Rust that natively integrates light clients. Transactions are passed over as if we're part of the block building pipeline, with our atomic bundle built entirely by LLMs.

1

0

2

Our goal is simple: Process user intent into transactions without bespoke integration of DeFi SDKs. Just plain, direct interaction with blockchain nodes. But this isn't just a chatbot task. It requires resolving addresses, building calldata, and computing gas & slippage

2

2

7

Our pipeline relies on Foundry to execute and BAML to parse. @boundaryML is our secret sauce to force raw text into type-safe structures. "Send $5 USDC to 0xd9g..." ⬇️ USDC(0x7gh...).transfer(0xa3c..., 0xd9g..., 5) We turn natural language directly into executable Forge

0

0

0

To achieve generality and performance, we built a custom AI orchestration framework in Rust that natively integrates light clients. Transactions are passed over as if we're part of the block building pipeline, with our atomic bundle built entirely by LLMs.

1

0

0

Here's what we see in the crypto space: - Generic chatbots that can't execute real transactions - "AI agents" that break on edge cases - MVPs that don't integrate with actual workflows - Protocols adding AI for narrative, not utility We're here to fix that.

2

0

4

Blockchain execution needs scalability, performance, and determinism even when it’s driven by AI. Traditional agent frameworks in Python or Typescript don't offer the seamless integration required for our goals. That’s why at Aomi Labs, we build our own AI frameworks alongside

0

0

3

Think of Amazon Lambda and serverless architecture, which offers on-demand scaling and offloads state management to external storage. LLMs are data processing units, and by modularizing state management, we can achieve better efficiency and security in data collection.

1

0

2