Ammar Khairi

@ammar__khairi

Followers

24

Following

13

Media

6

Statuses

19

New account. Research Scholar @Cohere_Labs

London

Joined June 2025

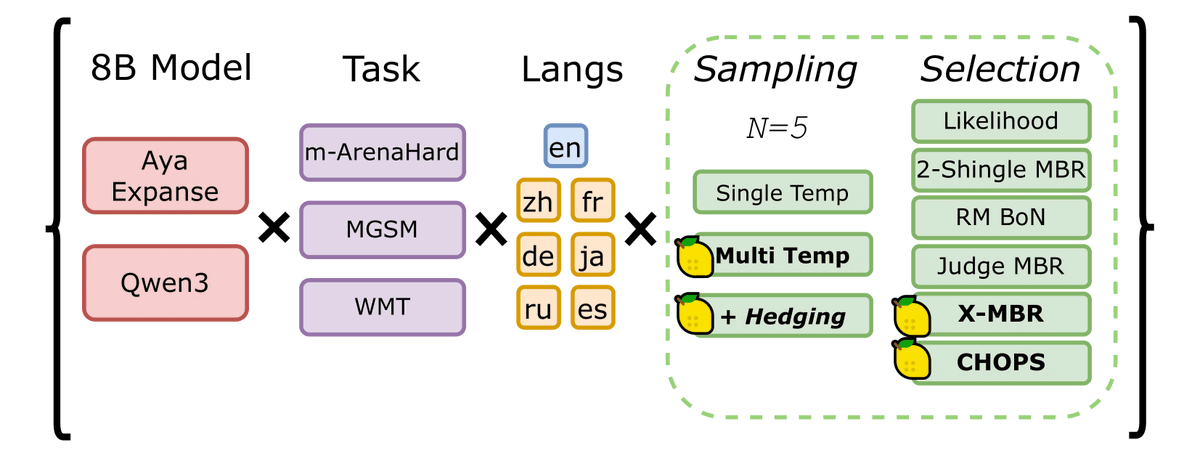

RT @_akhaliq: When Life Gives You Samples. The Benefits of Scaling up Inference Compute for Multilingual LLMs

0

28

0

You can find out more about LLMonade ™️ here:.

🚀 Want better LLM performance without extra training or special reward models?.Happy to share my work with @Cohere_labs : "When Life Gives You Samples: Benefits of Scaling Inference Compute for Multilingual LLMs".👀How we squeeze more from less at inference 🍋, details in 🧵

0

0

2

Thanks @_akhaliq for putting our work in the spotlight !. Such a special feeling to have my first work shared by legends in the field !.

1

1

14

RT @weiyinko_ml: Wow wasn't expecting this! Thanks so much for the kind message @Cohere_Labs! Big shoutout to @mrdanieldsouza and @sarahook….

0

3

0

RT @Cohere_Labs: Can we improve the performance of LLMs during inference without the need for extensive sampling OR special reward models?….

0

9

0

RT @mrdanieldsouza: 🚨New Recipe just dropped! 🚨 . "LLMonade 🍋" ➡️ squeeze max performance from your multilingual LLMs at inference time !👀🔥….

0

6

0

💪🏼Huge thanks to my incredible mentors: Julia Kreutzer @mrdanieldsouza, @YeS855811, @sarahookr for guiding me and supporting this work ✨.Find our arXiv release here! 📜:

0

5

13

🚀 Want better LLM performance without extra training or special reward models?.Happy to share my work with @Cohere_labs : "When Life Gives You Samples: Benefits of Scaling Inference Compute for Multilingual LLMs".👀How we squeeze more from less at inference 🍋, details in 🧵

2

20

32

RT @cohere: We’re excited to be a founding participant in the @StanfordDDL Industry-Wide Forum on AI agents alongside @Meta, @Oracle, and @….

0

3

0

RT @mrdanieldsouza: 🚨 Wait, adding simple markers 📌during training unlocks outsized gains at inference time?! 🤔 🚨. Thrilled to share our la….

0

17

0

RT @dianaabagyan: 🚨New pretraining paper on multilingual tokenizers 🚨. Super excited to share my work with @Cohere_Labs: One Tokenizer To R….

0

33

0

RT @Cohere_Labs: This July join the Cohere Labs Open Science Community for ML Summer School. 📚 . This series is organized and hosted by….

0

21

0